Source – electronicdesign.com

To identify skin cancer, perceive human speech, and run other deep learning tasks, chipmakers are editing processors to work with lower precision numbers. These numbers contain fewer bits than those with higher precision, which require heavier lifting from computers.

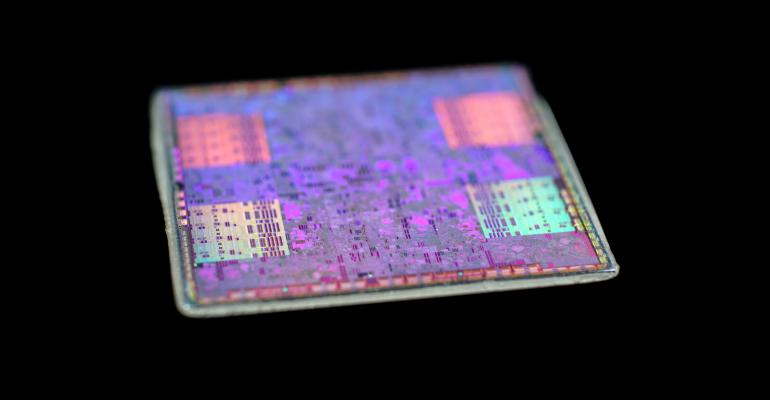

Intel’s Nervana unit plans to release a special processor before the end of the year that trains neural networks faster than other architectures. But in addition to improving memory and interconnects, Intel created a new way of formatting numbers for lower precision math. The numbers weigh fewer bits so the hardware can use less silicon, less computing power, and less electricity.

Intel’s numerology is an example of the dull and yet strangely elegant ways that chip companies are coming to grips with deep learning. It is still unclear whether ASICs, FPGAs, CPUs, GPUs, or other chips will be best at handling calculations like the human brain does. But every chip appears to be using lower precision math to get the job done.

Still, companies pay a surcharge for using numbers with less detail. “You are giving up something, but the question is whether it’s significant or not,” said Paulius Micikevicius, principal engineer in Nvidia’s computer architecture and deep learning research group. “At some point you start losing accuracy, and people start playing games to recover it.”

Shedding with precision is nothing new, he said. For over five years, oil and gas companies have stored drilling and geological data in half-precision numbers – 16-bit floating point – and run calculations with single-precision – 32-bit floating point – on Nvidia’s graphics chips, which are the current gold standard for training and running deep learning.

In recent years, Nvidia has edited its graphic chips to reduce computing power wasted in training deep learning programs. Its older Pascal architecture performs 16-bit math twice as efficiently as 32-bit operations. Its latest Volta architecture runs 16-bit operations inside custom tensor cores, which speedily move data through the layers of a neural network.

Intel’s new format maximizes the precision that can be stored in 16-bits. FlexPoint can represent a slightly wider range of numbers than traditional fixed-point formats, which can be handled with less computing power and memory. But it seems to provide less flexibility than floating-point numbers commonly used with neural networks.

Different parts of deep learning need different levels of precision. Training entails going through, for example, thousands of photographs without explicit programming. An algorithm automatically adjusts millions of connections between the layers of the neural network, and over time it creates a model for interpreting new data. This requires high-precision math, typically 32-bit floating point

The inferencing phase is actually running the algorithm. This can take advantage of lower precision, which means lower power and cooling costs in data centers. In May, Google said that its tensor processing unit (TPU) runs 8-bit integer operations for inferencing. The company claims that it provides six times the efficiency of 16-bit floating point numbers.

Fujitsu is working on a proprietary processor that also takes advantage of 8-bit integers for inferencing, while Nvidia’s tensor cores settle for 16-bit floating-point for the same chores. Microsoft invented an 8-bit floating-point format to work with the company’s Brainwave FPGAs installed in its data centers.

Training with anything lower than 32-bit numbers is hard. Some numbers turn into zeros when represented in 16-bits. That can make the model less accurate and potentially prone to misidentify, for example, a skin blemish as cancer. But lower precision could pay big dividends for training, which uses more processing power than inferencing.

Micikevicius and researchers from Baidu recently published a paper on programming tips to train models with half-precision numbers without losing accuracy. This mixed-precision training means that computers can use half the memory without changing parameters like the number of neural network layers. That can mean faster training.

Micikevicius said that one tactic is to keep a master copy of weights. These weights are updated with tiny numbers called gradients to strengthen the link between neurons in the layers of the network. The master copy stores information in 32-bit floating point and it constantly checks that the 16-bit gradients are not taking the model off course.

The paper also provides a way to preserve the gradients, which can be so small that they turn into zeros when trimmed down to the half-precision format. To prevent these errant zeros from throwing off the model, Micikevicius said that the computer needs to multiply the gradients so that they are all above the point where they can be safely dumbed down.

These programming ploys take advantage of Nvidia’s tensor cores. The silicon can multiply 16-bit numbers and accumulate the results into 32-bits, ideal for the matrix multiplications used in training deep learning models. Nvidia claims that its Volta graphics chips can run more than 10 times more training operations per second than those based on Pascal.

Nvidia’s tensor cores, while unique, are part of the zeitgeist. Wave Computingand Graphcore have both built server chips that accumulate 16-bit multiplier into 32-bits for training operations, while Intel’s Nervana hardware accumulates them into a 48-bit format. Other companies like Groq and Cerebras Systems could take similar tacks.

The industrywide shift could also sow confusion about performance. Last year, Baidu created an open-source benchmark called DeepBench to clock processors for neural network training. It lays out minimum precision requirements of 16-bit floating point for multiplication and 32-bit floating point for addition. It recently extended the benchmark to inferencing.

“Deep learning developers and researchers want to train neural networks as fast as possible. Right now, we are limited by computing performance,” said Greg Diamos, senior researcher at Baidu’s Silicon Valley research lab, in a statement. “The first step in improving performance is to measure it.”