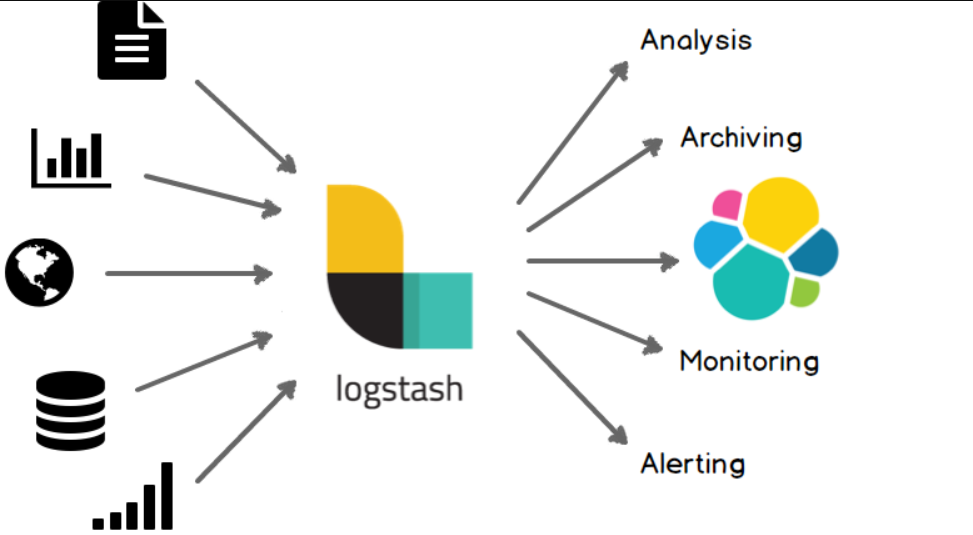

As the volume of machine-generated data continues to grow, organizations require effective tools to collect, process, and analyze this data in real-time. Logstash is a powerful open-source data collection and processing tool that serves as a core component of the Elastic Stack. It enables organizations to ingest, parse, and transform data from a variety of sources, making it a vital tool for log management, analytics, and observability.

Logstash plays a crucial role in modern IT operations, security analytics, and business intelligence. By acting as a pipeline that collects, enriches, and routes data, Logstash ensures that organizations can make better use of their data, improving decision-making and operational efficiency.

What is Logstash?

Logstash is an open-source data processing pipeline designed to collect, process, and forward data to various storage and analysis tools, such as Elasticsearch, Amazon S3, or other databases. It allows users to ingest data from diverse sources, transform the data into a usable format, and export it to a destination for further analysis or visualization.

Logstash is highly extensible, with a rich library of plugins that enable integration with multiple input sources, data processing filters, and output destinations. Its flexibility makes it a preferred choice for handling logs, metrics, events, and other types of data from servers, applications, network devices, and more.

Top 10 Use Cases of Logstash

- Centralized Log Management

Collect and process logs from multiple systems, applications, and devices into a central repository for easier analysis. - Application Performance Monitoring (APM)

Track application logs and metrics to monitor performance, identify bottlenecks, and optimize user experience. - Security Information and Event Management (SIEM)

Enrich and forward logs to security tools to detect, analyze, and respond to security incidents. - Infrastructure Monitoring

Gather metrics from servers, network devices, and containers to monitor system health and performance. - IoT Data Processing

Ingest and process data from IoT devices, enabling real-time analytics and operational insights. - Data Enrichment

Enhance raw log data with additional context, such as geolocation or user agent parsing, for better insights. - Event Correlation

Aggregate logs from distributed systems to identify patterns and correlations that point to root causes of issues. - Cloud Monitoring

Process logs and metrics from cloud platforms like AWS, Azure, and Google Cloud to ensure optimal performance and cost efficiency. - Compliance Reporting

Collect and normalize logs to meet regulatory compliance requirements, such as GDPR, HIPAA, and PCI DSS. - Business Analytics

Ingest and transform data from sales, marketing, and customer engagement platforms for actionable business insights.

What Are the Features of Logstash?

- Wide Input Source Support

Logstash supports numerous input sources, including Syslog, Beats, HTTP, TCP, Kafka, and databases. - Flexible Data Processing

Use filters to parse, enrich, and transform data, such as grok patterns for log parsing or GeoIP for geolocation enrichment. - Extensive Plugin Ecosystem

Choose from over 200 plugins to customize input, filter, and output stages for specific use cases. - Real-Time Data Processing

Process and forward data in real time, ensuring up-to-date insights for monitoring and analytics. - Integration with Elastic Stack

Seamlessly integrate with Elasticsearch and Kibana for storage, search, and visualization. - Scalability and High Performance

Handle large volumes of data efficiently, scaling horizontally by deploying multiple Logstash instances. - Rich Event Metadata

Include metadata such as timestamps, source information, and pipeline stages for better event context. - Error Handling

Handle failed data processing gracefully by using dead letter queues or routing problematic events for further inspection. - Support for Structured and Unstructured Data

Process JSON, XML, CSV, and unstructured text data, making it versatile for different use cases. - Open-Source and Extensible

Customize and extend Logstash’s functionality using community plugins or custom code.

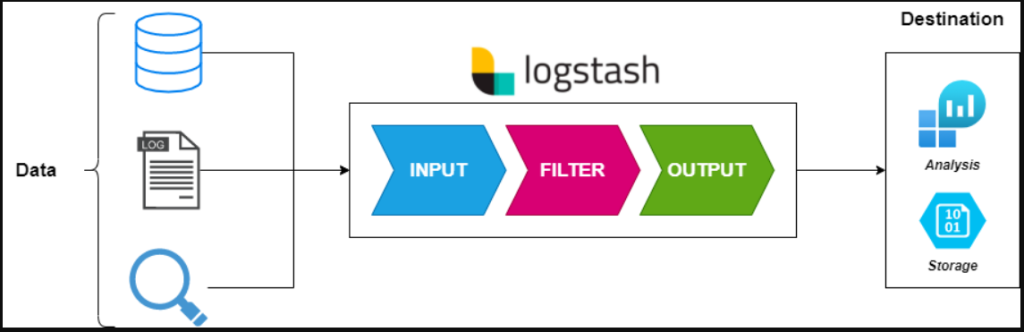

How Logstash Works and Architecture

How It Works:

Logstash operates as a pipeline with three main stages: Input, Filter, and Output. Data flows through these stages, where it is collected, processed, and sent to the desired destination.

Architecture Overview:

- Input Stage:

Collect data from various sources such as log files, databases, or message queues. Inputs define where the data originates and how it enters Logstash. - Filter Stage:

Transform and enrich data using filters like grok (pattern matching), mutate (data modification), and GeoIP (geolocation enrichment). - Output Stage:

Send processed data to destinations like Elasticsearch, S3, or other storage and analysis systems. - Plugins:

Logstash uses plugins for inputs, filters, and outputs, making it flexible to handle diverse data pipelines. - Pipeline Management:

Define multiple pipelines for different use cases, enabling parallel processing of diverse data streams.

How to Install Logstash

Steps to Install Logstash on Linux:

1. Update Your System:

sudo apt update

sudo apt upgrade2. Install Java:

Logstash requires Java. Install it using:

sudo apt install openjdk-11-jdk3. Add the Elastic Repository:

wget -qO - https://artifacts.elastic.co/GPG-KEY-elasticsearch | sudo apt-key add -

sudo apt install apt-transport-https

echo "deb https://artifacts.elastic.co/packages/8.x/apt stable main" | sudo tee /etc/apt/sources.list.d/elastic-8.x.list

sudo apt update4. Install Logstash:

sudo apt install logstash5. Configure Logstash:

- Edit the pipeline configuration file:

sudo nano /etc/logstash/conf.d/logstash.conf- Example configuration:

input {

beats {

port => 5044

}

}

filter {

grok {

match => { "message" => "%{COMBINEDAPACHELOG}" }

}

}

output {

elasticsearch {

hosts => ["http://localhost:9200"]

}

}6. Start Logstash:

sudo systemctl start logstash

sudo systemctl enable logstash7. Test Logstash:

- Send sample data to the configured input and check Elasticsearch or other output destinations for processed logs.

Basic Tutorials of Logstash: Getting Started

1. Creating a Simple Pipeline:

- Define an input (e.g., reading logs from a file), apply a filter (e.g., parsing logs with grok), and set an output (e.g., sending logs to Elasticsearch).

2. Using the Grok Filter:

- Use grok patterns to extract meaningful data from log entries:

filter {

grok {

match => { "message" => "%{COMMONAPACHELOG}" }

}

}3. Testing Pipelines:

- Test pipelines locally using:

echo '{"message": "Test log entry"}' | /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/logstash.conf4. Handling Multiple Pipelines:

- Configure multiple pipelines in

/etc/logstash/pipelines.ymlfor processing different data streams.

5. Integrating with Beats:

- Use Filebeat to ship logs to Logstash:

filebeat.inputs:

- type: log

paths:

- /var/log/*.log

output.logstash:

hosts: ["localhost:5044"]6. Monitoring Logstash:

- Enable monitoring features to track pipeline performance and troubleshoot bottlenecks.