Source: techxplore.com

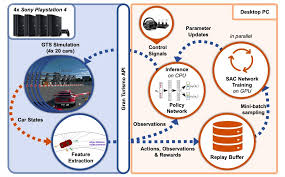

Overview of the system devised by the researchers. Credit: Fuchs et al.

Over the past few decades, research teams worldwide have developed machine learning and deep learning techniques that can achieve human-comparable performance on a variety of tasks. Some of these models were also trained to play renowned board or videogames, such as the Ancient Chinese game Go or Atari arcade games, in order to further assess their capabilities and performance.

Researchers at University of Zurich and SONY AI Zurich have recently tested the performance of a deep reinforcement learning-based approach that was trained to play Gran Turismo Sport, the renowned car racing video game developed by Polyphony Digital and published by Sony Interactive Entertainment. Their findings, presented in a paper pre-published on arXiv, further highlight the potential of deep learning techniques for controlling cars in simulated environments.

“Autonomous driving at high speed is a challenging task that requires generating fast and precise actions even when the vehicle is approaching its physical limits,” Yunlong Song, one of the researchers who carried out the study, told TechXplore. “Autonomous car racing, where the goal is to complete a given course in minimal time, features some of these difficulties of controlling a car close to its physical limitations. To address these challenges and advance the frontier, we consider the task of autonomous car racing in the top-selling car racing game Gran Turismo Sport, which is known for its detailed physics simulation of various cars and tracks.”

The key objective of the recent study carried out by Song and his colleagues was to develop an artificial neural network (ANN)-based controller that can autonomously move a race car within in a simulated track, without requiring any prior knowledge of the car’s dynamics. To perform well at Gran Turismo Sport, the controller should be trying to minimize the amount of time in which it can complete a given track.

To achieve their goal, the researchers first defined a reward function that formulated the ‘racing problem’ as a minimum-time problem, as well as outlining a neural network policy that directly mapped input observations to car control commands. Subsequently, they trained their neural network’s parameters using reinforcement learning, maximizing the reward that their model would receive when performing well.

The researchers trained their neural network-based controller on trials of Gran Turismo Sport, running the game on four Playstation 4 game consoles and a desktop PC. Remarkably, after less than 73 hours of training, their model had already achieved super-human performance.

“Different from classical state estimation, trajectory planning and optimal control methods, our approach does not rely on human intervention, human expert data, or explicit path planning,” Song said. “We found that it could generate trajectories that are qualitatively similar to those chosen by the best human players, while outperforming the best known human lap times in all three of our reference settings, including two different cars on two different tracks.”

The study shows that deep learning-based approaches can also achieve super-human performance on games that require continuous control, rather than a set of separate and strategized actions, like those required to win at Alpha Go. This further highlights the potential of machine learning techniques, particularly those trained using reinforcement learning, for solving complex problems that are difficult or impossible to solve using classical computational approaches.

To get a better sense of how well their approach performed, Song and his colleagues interviewed an expert human Gran Turismo Sport player who achieved top performance at several national and international video game competitions. They asked this player, who requested anonymity, to compete with their neural network-based model and give his opinion about the model’s driving style.

After he completed a few trials, the player said, “The policy drives very aggressively, but I think this is only possible through its precise actions. I could technically also drive the same trajectory, but in 999 out of 1000 cases, me trying that trajectory results in wall contact, which destroys my whole lap time, and I would have to start a new lap from scratch.”

This player’s opinion confirms the super-human capabilities of the model trained by the researchers. More specifically, it highlights the high level of precision that the model can achieve and its ability to consistently execute optimal actions, even in scenarios where these actions are risky and might lead a human player to push their car off-track.

“We are now planning to develop more general AI agents that can race in all kinds of tracks with different car models,” Song said. “We are also working on solving autonomous car overtaking problems, in which the agent has to learn how to overtake its opponents while driving at high speed, and without collisions.”