Source – channelnewsasia.com

University of Washington professor Pedro Domingos shot to prominence after his book was seen on China president Xi Jinping’s bookshelf during the leader’s annual New Year’s Day greetings this year.

SINGAPORE: Artificial intelligence: Two words that have been bandied about everywhere to seemingly give anything a shimmer of technological star dust.

Looking for a washing machine? Choose the latest AI-enabled machine to better clean your clothes. Seeking help from your service provider? There’s an AI chatbot waiting to answer all your queries. Or so it seems.

Yet, the buzzword also throws up questions for many. Does artificial intelligence mean robots? Is it going to take away my job? Is it going to take over humanity?

This is where Mr Pedro Domingos comes in. The professor in University of Washington’s Computer Science and Engineering department is widely known as a thought leader in this field. His book, The Master Algorithm, was even seen on the bookshelf of China president Xi Jinping – a big proponent of AI – at the start of the year.

Channel NewsAsia spoke to Mr Domingos, who was in town at the invitation of StarHub, on Tuesday (Jul 17), to put some of the more common AI misconceptions to bed and get his views on whether Skynet is, indeed, coming.

Q: What is AI and how would you explain it to a 5-year-old?

Domingos: AI is getting machines to do what traditionally needs human intelligence to do.

Things like reasoning, problem solving, common sense, knowledge, understanding what you see, understanding speech and language, and learning. Computers traditionally couldn’t do these, and getting them to do so is what AI is all about.

For a five-year-old, it would be like a toy that they can play with, like another child. Remember those Sony Aibo dogs? They didn’t have a lot of AI, but they were entertaining to children. Now imagine an AI-enabled “dog” that was more like a real dog – that’s the kind of things we want to do with AI.

Q: AI equals robots. Is this correct?

Domingos: They are related, but they are different. A robot is a machine, the brain of the machine is the computer. AI is to a robot the same way the brain is to your body.

Q: Will these AI-powered robots snatch away our jobs?

Domingos: I think AI and robots will cause a lot of changes in the job market. Some jobs might disappear, but I think a lot more jobs will appear than disappear. This has always been the case with automation.

What jobs are at risk, you may ask. A truck driver, for example. If there’re self-driving trucks, then truck drivers might lose their jobs. I think in the short term, we will have self-driving trucks on the freeway, but in the cities, it will be truck drivers. What it will do is alleviate the shortage of truck drivers. But in the long run, truck driving as a job will cease.

Having said that, when ATMs were introduced, people thought this will put bank tellers out of work. Right? Because they do the same job. But there are actually more bank tellers today than there were before ATMs.

What happened is now the bank tellers do all sorts of things besides give cash to people, and I think the same will be true of AI. We will just have people doing very different things from the ones they do today, because (these things) became economically feasible.

Q: AI is powering the use of facial recognition in countries like China and, with it, raising the spectre of Big Brother societies. Will we live in a Minority Report-like world soon?

Domingos: There is that danger. AI for an authoritarian regime is an amazing tool, and facial recognition is an example. I think if you want to use AI for oppressive purposes like controlling your population, there is definitely a lot of opportunities to do that.

But AI can also be used by the people to give themselves more power. AI is like any technology; It gives power to those who have it, so the question is who is going to have it: Is it governments, is it large companies, or is it all of us?

I think it should be all of us. But before we – as citizens, as individuals, as professionals – can take advantage of AI and use it for our ends, we have to understand what it does. Not at a fine level, but it’s like knowing how to operate a car – You don’t have to understand how the engine works, but you need to understand how the steering wheel and the pedals work.

One of the things people should be aware of is, these days, every time they interact with anything online, chances are there is an algorithm learning what they do, what they like, how they behave. And you should realise that you’re teaching the system every time you use it.

For example, if you’re on Netflix and you choose a movie, you should realise that tomorrow it is going to recommend similar movies.

However, people should demand of tech companies, Amazon for example, and say: “Don’t show me more watches because I just bought one.” Right now, we can’t do those things, not because the AI is not capable but because the tech companies are not providing these options.

Users should demand a higher level of control from all these AI systems than they have right now.

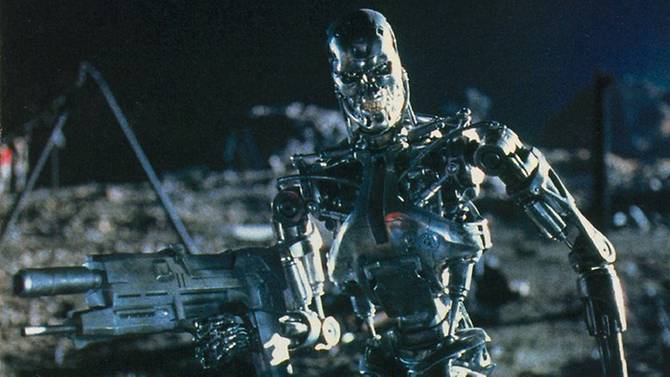

Q: Going a step further, will robots control us in a Skynet-type reality seen in the Terminator movies?

Domingos: I think Skynet is not going to happen and we’re not going to be ruled by robots.

I think it is interesting to understand why it’s so prominent in people’s minds though, other than it makes a good story and we’ve seen that in Terminator.

I think it’s very unlikely that machines will spontaneously turn evil and decide to exterminate us, because the machines, no matter how intelligent they are, only use their intelligence to achieve the goals we set for them. So as long as we set those goals and set the constraints, then we can check the results and it’s very unlikely that the machines will get out of control.

Having said that, there can be bad actors that decide to program machines for bad purposes, and those we have to worry about. Criminals or authoritarian governments – these are all potential issues that could lead to bad uses of AI.

The other danger we have to worry about is that we increasingly put control of important things in the hands of AI, but they (the machines) are not that smart. They make mistakes. They have no common sense. They take you too literally. They give you what they think you want instead of what you really want.

This goes back to the Skynet scenario: As soon as we see anything that exhibits even a small amount of intelligence, we immediately project on to it our human qualities that it doesn’t have because the only intelligence we know is ours. Like free will, and consciousness, and all of that; they don’t have it. They are just problem-solving engines.

So people worry that computers will get too smart and take over the world, but the real problem is that they are too stupid. And they have already taken over the world.