Source: tmc.edu

When the X-ray was discovered at the end of the 19th century, a new medical discipline was born. Radiology became a way to study, diagnose and treat disease. Today, expertise among radiologists, radiation oncologists, nuclear medicine physicians, medical physicists and technicians includes many forms of medical imaging—from diagnostic and cancer imaging to mammography, radiation therapy, ultrasound, computed tomography (CT) and magnetic resonance imaging (MRI).

As we move into the third decade of the 21st century, radiology—perhaps more than any other medical speciality—is poised for transformation. Thanks to artificial intelligence (AI), radiologists foresee a future in which machines enhance patient outcomes and avoid misdiagnosis.

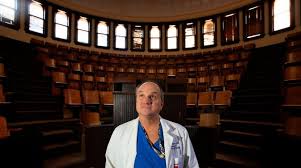

“In the old days, X-rays were very shadowy, very difficult to interpret. They required a lot of expertise. Nowadays, with MRIs, you can see the anatomy really, really well. So now, the next step from that—which is a big jump—is artificial intelligence,” said Eric Walser, M.D., chairman of radiology at The University of Texas Medical Branch (UTMB) at Galveston.

Early machine learning used information from a few cases to teach computers basic tasks, like identifying the human anatomy. Today, AI can distinguish patterns and irregularities in large collections of data, which makes radiology an ideal application. Software can draw from millions of images and make diagnoses with speed and accuracy.

“Ultimately, what you would want out of an AI algorithm is value,” said Eric M. Rohren, M.D., Ph.D., professor and chair of radiology at Baylor College of Medicine and radiology service line chief for Baylor St. Luke’s Medical Center. “It’s pretty clear that the algorithms can do some pretty amazing things. They can detect abnormalities with a high degree of precision. They can see what, perhaps, the human eye cannot see. What’s not known is: What is the value of that in a health care system? Are you truly improving patient outcomes and patient care by introducing a particular algorithm into your practice? Does it have measurable impact in terms of better patient experience, hospital stay and better outcomes following surgery?”

To that end, Baylor College of Medicine has created an internal library of all imaging data from the last decade.

“It’s being put into a research archive so that investigators can come in, pull that data, be able to research that data, develop their own algorithms and link it with the electronic medical records to see pathology and laboratory values and outcomes for those patients,” Rohren said. “Computers, since they don’t have the level of intuition that we have as humans, they really need to be trained in a systematic fashion off a data set that’s been developed specifically for them to learn. That data set can be tens of thousands of examinations in order for the algorithm to be able to determine prospectively and predictively what it’s going to do when it’s faced with a scan it’s never seen before.”

The American College of Radiology joined the AI revolution by creating its Data Science Institute that aims to “develop an AI ecosystem beyond single institutions,” according to the institute’s 2019 annual report.

Houston as a hub

The region dubbed “Silicon Bayou” for its innovation ecosystem is becoming a hot spot for artificial intelligence ventures.

Houston was ranked among the world’s top large cities prepared for artificial intelligence, according to the Global Cities’ AI Readiness Index released in September 2019. The city ranked No. 9 among places with metro area populations of 5 million to 10 million residents.

The report was based on a sur- vey conducted by the Oliver Wyman Forum, part of the Oliver Wyman management consulting firm that tracks how well major cities are prepared to “adapt and thrive in the coming age of AI.”

Researchers at The University of Texas Health Science Center at Houston (UTHealth) have demonstrated that distinction by building an AI platform called DeepSymNet that has been trained to evaluate data from patients who suffered strokes or had similar symptoms.

A team including Sunil Sheth, M.D., an assistant professor of neurology at UTHealth’s McGovern Medical School, and Luca Giancardo, Ph.D., an assistant professor at UTHealth’s School of Biomedical Informatics, created an algorithm to assist doctors outside of major stroke treatment facilities with diagnoses. The work was published online in September in the journal Stroke.

The project started because of difficulties identifying patients who could benefit from an endovascular procedure that opens blocked blood vessels in the brain, a common cause of stroke.

“It’s one of the most effective treatments we can render to patients. It takes them from having severe disability to sometimes almost completely back to normal,” said Sheth, who practices as a vascular neurologist with Memorial Hermann Health System. “The challenge is that we don’t know who will benefit from the treatment.”

Finding out depends on advanced imaging techniques that are not available at most community hospitals, the first stop for the vast majority of stroke patients.

“What we are trying to do, in using Dr. Giancardo’s software, is to see if we could generate the same type of results that we get with advanced imaging techniques but with the type of imaging that we already do routinely in stroke in the less-advanced centers,” Sheth said. “The purpose of this software is that—no matter what hospital you show up at—you can get the same type of advanced evaluation and all of the information you need to make a treatment decision.”

At Memorial Hermann-Texas Medical Center, patients have access to advanced technology and undergo standard imaging called a CT angiogram along with a CT perfusion, which is used to decide if someone would benefit from an endovascular procedure to remove a blood clot.

“We took patients who had both—the CT angiogram, which can be done at any hospital, and the CT perfusion imaging—and then we sent that into Dr. Giancardo’s software. What that essentially did is trained the algorithm to take the CT images and to generate the type of output that the CT perfusion was telling us,” Sheth said. “Then we tested it. Here are a bunch of patients that you’ve never seen before. How good are you at predicting what the CT perfusion is going to say? And that’s what we did in our paper and showed that it did a very good job.”

The study included more than 200 images from a single hospital. The technology hasn’t been implemented clinically.

“The main benefit to a patient is that a lot of hospitals from other nations have the basic imaging, but not more advanced capabilities. So, the approach is that they could get the same information with the infrastructure that is already there,” Giancardo said.

Replacing the radiologist?

The AI disruption in radiology may be predictive of what’s to come in other areas of medicine. But does AI mean that the demand for radiologists will decline?

Walser, UTMB’s radiology chair- man, thinks so.

“There will be fewer of us prob- ably needed,” he said. “Radiologists are going to become more the man- agers of the data rather than the creators of the diagnoses.”

Sheth, the UTHealth neurologist, views AI and radiology as “decision support” for the specialists.

“I don’t think we will ever be at the point where we can say ‘Do x, y and z to this patient because Dr. Giancardo’s software told us to.’ This is going to be something that will help all of the physicians taking care of the patients—the ER doc, the neurologist, the radiologist—make treatment decisions with better data,” he said.

Rohren, the Baylor radiology chair, agrees that AI will improve decision-making without replac- ing the physicians who interpret imaging.

“It will make our jobs and roles a little different than they are now, but it will absolutely not put radiologists out of business,” he said. “Machines and machine learn- ing are very good at information handling, but they are very poor at making judgments based on that information. … The radiologist will continue to be critical.”

Yet Rohren anticipates a trans- formed discipline.

“I think radiology in the future will be a different profession than it is today,” he said. “The radiologist has the potential to be the curator and the purveyor of a large amount of data and information that, given the limitations of human brain power and information systems,

we just don’t have access to today. But with AI working alongside the radiologist, I think the radiologist is in the position to be at the hub of a lot of health care.”

Innovation in the TMC

Optellum, a United Kingdom-based company, brought its AI software for lung cancer diagnosis and treatment to the TMC Innovation Institute this year and spent several months in the TMCx accelerator. The product identifies patients at risk for lung cancer, expedites optimal therapy for those with cancer and reduces intervention for millions who do not need treatment.

Company founder and CEO Vaclav Potesil, Ph.D., said he decided to focus on lung cancer after losing an aunt within a year of her Stage 4 diagnosis. She never smoked.

“I’ve seen firsthand how very healthy people can be killed and it’s still the most common and deadliest cancer worldwide,” he said. “We are really focused on enabling cancer patients to be diagnosed at the earliest possible stage and be cured. It’s not just the modeled data on the computer. It’s addressing the right clinical problems to add value to doctors.”

Potesil noted that two cancerous growths on the left lung of U.S. Supreme Court Associate Justice Ruth Bader Ginsburg were discovered early because of tests on her broken ribs, which were examined after she fell in 2018.

UTMB is starting a new AI radiology project soon, Walser said, that also aides in early detection.

“The computer scans every X-ray from the ICU and if they see something that looks like it might be a problem, they pop it up to the top of my list, so I look at that one first,” he said. “In other words, if there are 200 chest X-rays from Jennie [Sealy Hospital] and there’s one that’s a pneumothorax [collapsed lung] that could kill a patient and it’s all the way down on the bottom of my stack because the last name is Zimmerman, the computer will push it up to the top for me. That’s our first foray into AI.”

Baylor College of Medicine and Baylor St. Luke’s Medical Center researchers have explored using algorithms to interpret breast lesions on mammograms, improve cancer detection with breast MRIs and predict which sinusitis patients might benefit most from surgery, Rohren said.

He hopes for an AI collaboration among institutions in Houston’s medical city.

“I personally believe the Texas Medical Center could become the international hub of AI—not only for radiology, but for any aspect of medicine,” Rohren said. “We have so many elite health care institutions sitting right here right next door to each other. We have outstanding undergraduate universities right here in the city with data scientists and engineers. We have all the components to be able to develop a program. What it will require is for us all to work together.”