Source – wired.com

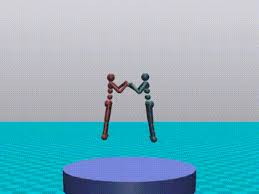

THE GRAPHICS ARE not dazzling, but a simple sumo-wrestling videogame released Wednesday might help make artificial-intelligence software much smarter.

Robots that battle inside the virtual world of RoboSumo are controlled by machine-learning software, not humans. Unlike computer characters in typical videogames, they weren’t pre-programmed to wrestle; instead they had to “learn” the sport by trial and error. The game was created by nonprofit research lab OpenAI, cofounded by Elon Musk, to show how forcing AI systems to compete can spur them to become more intelligent.

Igor Mordatch, a researcher at OpenAI, says such competitions create a kind of intelligence arms race, as AI agents confront complex, changing conditions posed by their opponents. That might help learning software pick up tricky skills valuable for controlling robots, and other real-world tasks.

In OpenAI’s experiments, simple humanoid robots entered the arena without knowing even how to walk. They were equipped with an ability to learn through trial and error, and goals of learning to move around, and beating their opponent. After about a billion rounds of experimentation, the robots developed strategies such as squatting to make themselves more stable, and tricking an opponent to fall out of the ring. The researchers developed new learning algorithms to enable players to adapt their strategies during a bout, and even anticipate when an opponent may change tactics.

OpenAI’s project is an example of how AI researchers are trying to escape the limitations of the most heavily-used variety of machine-learning software, which gains new skills by processing a vast quantity of labeled example data. That approach has fueled recent progress in areas such as translation, and voice and face recognition. But it’s not practical for more complex skills that would allow AI to be more widely applied, for example by controlling domestic robots.

One possible route to more skillful AI is reinforcement learning, where software uses trial and error to work toward a particular goal. That’s how DeepMind, the London-based AI startup acquired by Google, got software to master Atari games. The technique is now being used to have software take on more complex problems, such as having robots pick up objects.

OpenAI’s researchers built RoboSumo because they think the extra complexity generated by competition could allow faster progress than just giving reinforcement learning software more complex problems to solve alone. “When you interact with other agents you have to adapt; if you don’t you’ll lose,” says Maruan Al-Shedivat, a grad student at Carnegie Mellon University, who worked on RoboSumoduring an internship at OpenAI.

OpenAI’s researchers have also tested that idea with spider-like robots, and in other games, such as a simple soccer penalty shootout. The nonprofit has released two research papers on its work with competing AI agents, along with code for RoboSumo, some other games, and for several expert players.

Sumo wrestling might not be the most vital thing smarter machines could do for us. But some of OpenAI’s experiments suggest skills learned in one virtual arena transfer to other situations. When a humanoid was transported from the sumo ring to a virtual world with strong winds, the robot braced to remain upright. That suggests it had learned to control its body and balance in a generalized way.

Transferring skills from a virtual world into the real one is a whole different challenge. Peter Stone, a professor at the University of Texas at Austin, says control systems that work in a virtual environment typically don’t work when put into a physical robot—an unsolved problem dubbed the “reality gap.”

OpenAI has researchers working on that problem, although it hasn’t announced any breakthroughs. Meantime, Mordatch would like to give his virtual humanoids the drive to do more than just compete. He’s thinking about a full soccer game, where agents would have to collaborate, too.