Source:- forbes.com

Machine learning is one of the cornerstones of artificial intelligence. If systems can’t learn, they can’t adapt or apply knowledge from one domain to another. And yet, machine learning is just a component of the overall goals of AI. Beyond machine learning, we still need ways to interact with the external environment, reason about things that are learned, and set goals that drive higher-order aspects of intelligence. Regardless of machine learning’s role in the overall context of AI, researchers and enterprises still find themselves grappling with the challenges of making ML work across the broad range of scenarios and applications they are looking to apply it to.

There are three main types of machine learning approaches: supervised learning, unsupervised learning, and reinforcement learning. In the supervised learning approach, machines learn by example, being fed lots of good quality examples to learn from (“training”) and then creating a model of that learning that it can generalize to similar tasks. In the unsupervised learning approach, machines automatically find patterns and groupings of data that is meaningful, and develop a model through discovery of those patterns. The reinforcement learning method takes a different approach. Rather than being given good examples it or discovering patterns on its own, reinforcement learning (RL) systems are given a final goal and learn through trial and error, discovering the optimal solution or best path to a goal.

Reinforcement learning has generally been applied to solve games and puzzles. From early AI applications in checkers and chess to more recent RL-based solutions that have learned how to play some of the most difficult games such as Go, DOTA, and multi-player games, RL has shown that it can offer significant strength in solving some of the more difficult challenges tasked to AI. Despite the possibilities, RL approaches are not as widely implemented in the enterprise as supervised or unsupervised learning approaches. This is because companies find the more task-oriented supervised learning approaches suitable to the recognition, conversation, and predictive analytics patterns of AI, and data-oriented unsupervised learning approaches applicable to the pattern and anomaly discovery and hyperpersonalization patterns. This leaves the goal-oriented RL approaches suitable for autonomous systems and goal-driven solutions patterns.

Despite RL’s lower enterprise profile, it has a high profile in news and media. DeepMind, acquired by Google in 2015 has been making waves with its approach to QLearning, using the RL method to beat top players at many competitive games. They see RL as a gateway to solving many general problems, and indeed, they see RL as possibly the algorithmic approach to solving the Artificial General Intelligence (AGI) challenge of a generally applicable machine learning method. While this remains to be seen, it has certainly given people much to think about, with personalities like Elon Musk, Max Tegmark, and others warning about the imminent possibility of the superintelligence.

While the fears of an imminent machine takeover is most likely overwrought, in reality, RL is being applied to much more mundane real-world activities such as resource optimization, planning, navigation, and scenario simulation approaches.

Recently Amazon has been making significant waves of their own in the RL space. At the AWS Re:Invent 2018conference in Las Vegas last year, Amazon unveiled the DeepRacer RL platform and a league pitting individual skills to develop RL algorithms that can optimize the path of the autonomous vehicle through a controlled course. While this might seem to be a trivial application, Amazon has been at the forefront of applying RL to their own real world scenarios.

The company applies RL in combination with other ML methods to optimize its warehouse and logistics operations, and assisting with automation in its various fulfillment facilities. The company has also applied RL to solving supply chain optimization problems and helping to discover optimal paths for delivery.

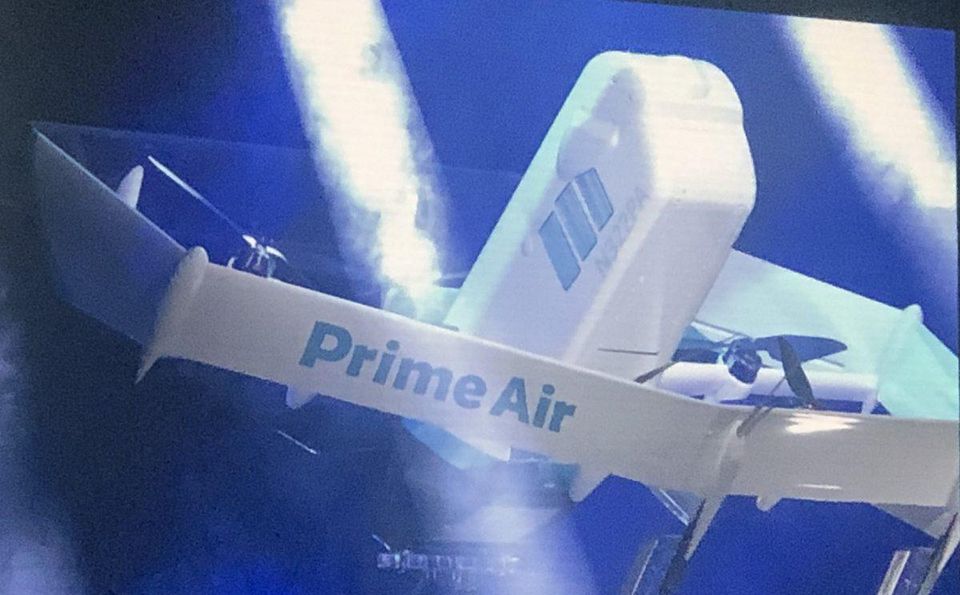

More interestingly, the company has applied RL and other ML approaches to help create the latest iteration of its autonomous drone delivery device. Unveiled at Amazon’s Re:MARS 2019 conference, Amazon will soon be shipping packages via airborne drones to houses around the world, pending regulatory approvals and technological advancements. While RL might be used in controlling flight operations and path discovery, what is more compelling is that the company has used those methods to design the drone itself. According to company sources, Amazon used machine learning to iterate and simulate over 50,000 configurations of drone design before choosing the optimal approach.

The newly designed drone can fly vertically and hover like a quadcopter drone, but can fly in a forward position much like an airplane. The system is outfitted with many multiple and redundant sensors and cameras to observe its surroundings and handle complicated navigation around obstructions. The company demonstrated a video in which the drone was able to detect a clothesline that traversed the landing zone, something that has been particularly challenging for other autonomous drone and computer vision applications.

Amazon is making it clear that it believes that RL should be a first-class participant in the ML portfolio considered by enterprises. And there’s no reason not to believe that RL shouldn’t be as equally and widely implemented as supervised and unsupervised forms of machine learning. Data scientists, machine learning developers, and even casual AI developers need to simply have more experience using and applying RL to the range of problems they are seeing. Once they get confident in those applications, there’s no doubt that we’ll start to see RL being implemented more widely in enterprise and other environments. RL is not just for games anymore.