Source – religionandpolitics.org

The concept of artificial intelligence has been fuel for science fiction since at least 1920, when the Czech writer Karel Čapek published R.U.R., his play about a mutiny led by a throng of robots. Speculation about the future of intelligent machines has run rampant in the intervening decades but recently has taken a more critical turn. Artificial intelligence (AI) is no longer imaginary, and the implications of its future development are far-reaching. As computer scientists confirm their intent to push the limits of AI capabilities, religious communities and thinkers are also debating how far AI should go—and what should happen as it becomes part of the fabric of everyday life.

“Scientists want to be at the cutting edge of research, and they want the contribution to knowledge,” says Brendan Sweetman, chair of philosophy at Rockhurst University. “But at the same time, a lot of what they do raises moral questions.”

Artificial intelligence is already pervasive. It’s embedded in iPhone’s Siri and Amazon’s Alexa, which are apps designed to answer questions (albeit in a limited way). It powers the code that translates Facebook posts into multiple languages. It’s part of the algorithm that allows Amazon to suggest products to specific users. The AI that is enmeshed in current technology is task-based, or “weak AI.” It is code written to help humans do specific jobs, using a machine as an intermediary; it’s intelligent because it can improve how it performs tasks, collecting data on its interactions. This often imperceptible process, known as machine learning, is what affords existing technologies the AI moniker.

The sensationalizing of AI is not a product of weak AI. It is, instead, a fear of “strong AI,” or what AI could someday become: artificial intelligence that is not task-based, but rather replicates human intelligence in a machine.

This strong AI, also known as artificial general intelligence (AGI), has not yet been achieved, but would, upon its arrival, require a rethinking of most qualities we associate with uniquely human life: consciousness, purpose, intelligence, the soul—in short, personhood. If a machine were to possess the ability to think like a human, or if a machine were able to make decisions autonomously, should it be considered a person?

Religious communities have a significant stake in this conversation. Various faiths hold strong opinions regarding creation and the soul. As artificial intelligence moves forward, some researchers are engaging in thought experiments to prepare for the future, and to consider how current technology should be utilized by religious groups in the meantime.

“The worst-case scenario is that we have two worlds: the technological world and the religious world.” So says Stephen Garner, author of an article on religion and technology, “Image-Bearing Cyborgs?” and head of the school of theology at Laidlaw College in New Zealand. Discouraging discourse between the two communities, he says, would prevent religion from contributing a necessary perspective to technological development—one that, if included, would augment human life and ultimately benefit religion. “If we created artificial intelligence and in doing so we somehow diminished personhood or community or our essential humanity in doing it, then I would say that’s a bad thing.” But, he says, if we can create artificial intelligence in such a way that allows people to live life more fully, it could bring them closer to God.

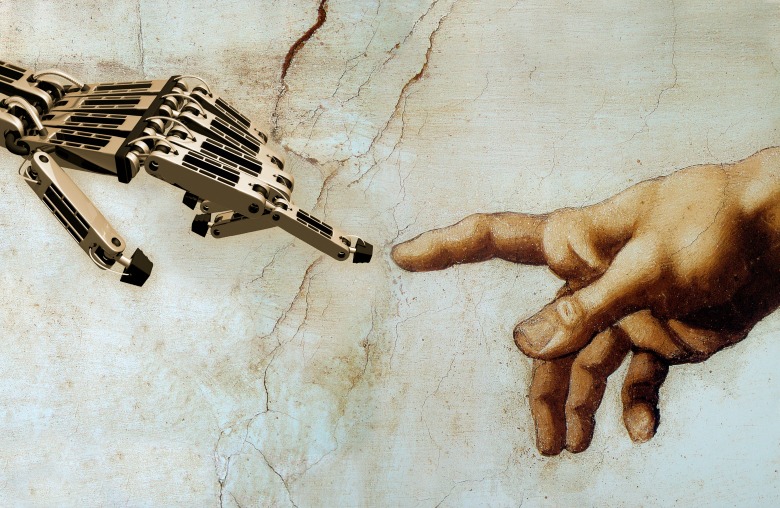

The personhood debate, for Christianity and Judaism in particular, originates with the theological term imago Dei, Latin for “image of God,” which connotes humans’ relationship to their divine creator. The biblical book of Genesis reads, “God created mankind in his own image.” From this theological point of view, being made in the divine image affords uniqueness to humans. Were people to create a machine imbued with human-like qualities, or personhood, some thinkers argue, these machines would also be made in the image of God—an understanding of imago Dei that could, in theory, challenge the claim that humans are the only beings on earth with a God-given purpose.

This technological development could also infringe on acts of creation that, according to many religious traditions, should only belong to a god. “We are not God,” Garner says. “We have, potentially, inherently within us, a vocation to create”—including, he says, by utilizing technology. Human creation, however, is necessarily limited. It’s the difference between a higher power creating out of nothing, and humans creating with the resources that are on earth.

Russell Bjork, a professor of computer science at Gordon College and graduate of Gordon-Conwell Theological Seminary and MIT, worries that creating a machine with strong artificial intelligence could quickly allow it to become an outlet for idolatry. It would be idolatrous, he says, to utilize strong AI as a method to defeat death and save the human race—predictions he feels scientists and futurists are wont to make. “To place one’s trust in anything other than God the creator is idolatry,” he says. “At that point it moves way beyond the realm of mirroring God’s creativity.”

Noreen Herzfeld, professor of theology and computer science at St. John’s University, further explained these issues in her paper, “Creating in Our Own Image: Artificial Intelligence and the Image of God.” She writes, “If we hope to find in AI that other with whom we can share our being and our responsibilities, then we will have created a stand-in for God in our own image.”

At this point, artificial intelligence is simply a tool for improving human experience. It can help us build cars, diagnose illnesses, and make financial decisions. It is easy to imagine a world in which our technology slowly becomes more and more intelligent, more and more self-aware. As weak AI evolves into strong AI, says James F. McGrath, author of “Robots, Rights and Religion” and a New Testament professor at Butler University, humanity will have grown accustomed to treating it like an object. Strong AI, by definition though, is human-like in intelligence and ability. Its development, he says, would force humans to reconsider how to appropriately interact with this technology—what rights the machines should be afforded, for instance, if their intelligence affords them a designation beyond that of mere tools. “Are we going to be generous in our granting of rights in the absence of clear answers to these questions, or are we going to be stingy?” McGrath says. “Do we risk enslaving a sentient, self-aware entity, or do we say, ‘We’re going to do whatever it takes to make sure that that doesn’t happen even by accident’?”

The debate around this issue has, in both pop culture and the scientific community, produced significant anxiety. The concept of the singularity, popularized by Raymond Kurzweil in his book The Singularity is Near, is an example of how taking the wrong path can lead the evolution of AI awry: It is, at its most basic, a fear that if we enlist machines to perform all of our tasks, transferring our knowledge to them, they will eventually become much more intelligent than humans—and possibly decide to dominate us. Those in the world of computer science who believe this fear has legitimacy have created an organization, the Machine Intelligence Research Institute, bent on preventing it.

But beyond speculation, there are ethical questions that need answering now, says J. Nathan Matias, a visiting scholar at the MIT Media Lab. Matias is co-author of a forthcoming paper on the intersection of AI and religion. “AI systems are already being used today to determine who police are going to investigate,” he says. “They’re used today to do sting operations of people who are imagined as potential future domestic abusers or sexual predators. They’re being used to decide who is going to get [financial] credit or not, based upon anticipated future solvency.” Religious communities should participate in conversations regarding these dilemmas, he says, and should involve themselves in the application of the AI that exists today.

Matias also points to Facebook’s algorithms that recommend content to users—a form of weak AI. In this way, AI can help make a post go viral. When a heartbreaking story is popular online, it directly influences the flow of prayer and charity. “We already have these attention algorithms as a clear example of what are shaping the contours of things like prayer or charitable donations or the theological priorities of a community,” he says. Such algorithms, like that employed by Facebook, dictate the political news—true or not—that people see. Religious groups, then, have a keen interest in the development of artificial intelligence and its ethical implications.

The ties between religious thinkers and artificial intelligence developers should be made stronger, according to Lydia Manikonda, a PhD student at Arizona State University who is working, along with several other researchers, on the paper with Matias about religion and artificial intelligence. She says, “These systems are made by us. How are we going to teach these systems what is right and what is wrong?” Addressing the morality of machines in advance of the development of strong artificial intelligence would help prevent the kind of cataclysmic robot-dictatorship that abounds in science fiction. And because artificial intelligence, by definition, builds on itself, embedding ethical principles in code now is imperative to developing an ethical machine in the future.

Machines in possession of strong artificial intelligence would likely rattle the basic understanding of the role of religion. “Just as the printing press had a significant impact on religious scholarship, both positive and negative, so the internet and then finally AI—those too will have a significant impact on both the institutions of religion and the way religions understand themselves,” says Daniel Araya, a technological innovation researcher and policy analyst. “AI is now speculated about in the way people used to speculate on the nature of God. Could it replace religion on a whole?”

Randall Reed, an associate professor of religion at Appalachian State University, says imbuing a machine with the ability to be omniscient, omnipotent, and omnipresent, would automatically create parallels to, and tensions with, monotheistic religions. “In the Christian tradition, we ascribe the big ‘omnis,’” he says, to God. “At least two, and maybe even three of those, become definitional of a superintelligent artificial intelligence.” With this type of AI, he says, “you have something that, through the internet, can be everywhere, can know everything, and has the ability to solve all the problems and understand them in ways that humans have never been able to do.”

Araya speculates that machines with strong artificial intelligence could become objects of worship in and of themselves: “There’d be religious movements that worship AI.” If a machine possesses cures for long-existing fatal diseases, knew how to improve education, and brought order to society, would humans idolize it?

The machines could, according to McGrath, develop their own sects or entirely new religions. A machine, he says, could think religion “doesn’t compute.” Or, if programmed to prioritize ethical concerns “might have a very different perspective. I can certainly imagine there being denominations that the AI develops.”

As a thought experiment, Reed raises the possibility that some branches of Christianity will try to convert machines with strong artificial intelligence to follow their God. And some Christian scientists, in the development of this new technology, may try to go beyond imbuing it with general rules of ethics, instead coding it to work within a clearly defined set of Christian values.

Regardless, the overwhelming reaction to the development of a machine with strong AI is likely to be fear. “If you’re in a religious community that is incredibly suspicious of existing technologies or past technologies and their implications, then a technology like artificial intelligence would be incredibly disturbing—to some extent, seeing it as another Tower of Babel, another [instance of] humanity attempting to usurp God’s position in the world,” says Garner. According to Heidi A. Campbell, an associate professor of communication at Texas A&M University and Garner’s co-author for Networked Theology: Negotiating Faith in Digital Culture, Christian churches have typically been slow to adapt to new technologies, having held vigorous debate regarding the televising of sermons and the use of the internet in the recent past.

The resistance should give religious communities pause. As Christopher J. Benek, a pastor and Christian transhumanist, puts it, “We are quick to assume that AI will be so different from us. My experience with technology is that it usually is heightening who we are currently.” What communities should do now, in preparation for the eventual creation of a machine with strong AI, he says, is think about how the technology created reflects their values. For Benek, those values stem from emulating Jesus. How can technology advance those principles? Artificial intelligence may change rapidly, but religious and scientific communities already have tools to explore its ethical and moral limits. “Part of this is working on ourselves formationally,” Benek says, “to work on the technology we have right now.”