Source: dailymaverick.co.za

South Africa’s overall investment in artificial intelligence (AI) over the last decade is significant, with around $1.6-billion invested to date. These investments have seen businesses experimenting with a range of different technologies, including chatbots, robotic process automation and advanced analytics.

This is a welcome reminder that fears about AI, automation and the impact of the Fourth Industrial Revolution (4IR) on the job market are sometimes overstated and alarmist. Microsoft, for example, is investing in a pair of data centres in South Africa that will create 100,000 jobs, imbue local workers with new, contemporary skills, and provide essential infrastructure for facing the economic challenges and opportunities to come.

But there are dangers.

With scrutiny and hindsight, the root of a failed AI project is often because of a gap between what was expected and what transpired or was realised. This gap between expectation and reality comes from biases, and these biases take numerous forms. Biases can emerge at various stages of projects – they may appear to be absent at the outset, but manifest as solutions age, or are applied to different projects than those for which they were originally intended.

One of the most common biases is business strategy bias or the belief that AI will address a problem which it is ill-suited to. Closely related is problem-statement bias, which is a misunderstanding of the sorts of use cases AI can address.

In both of these instances, missteps upfront have a cascading effect. Employ AI unnecessarily or misdirect its efforts and other aspects of the business invariably suffer. But it’s also possible that turning to AI could have unexpected consequences, even if it’s arguably the right tool for the job. Look at Google, which has found itself on the receiving end of internal dissent and protest as a result of the AI-powered Project Maven, an initiative to harness AI for better-targeted drone strikes.

Or, more worryingly, the recent debacle Boeing has faced with its now-grounded 737 Max aircraft. The evidence is mounting that rather than being a failure of AI, Boeing’s problems stem from a leadership failure: the company’s executives rushed design and production and failed to institute appropriate checks and measures because of a fear of having the company’s revenue base threatened by a competitor’s product.

With the power of hindsight, of course, many of AI’s failings, especially those attributable to one sort of bias or another, seem obvious. But it’s very difficult to predict the next big failure as it stands. If decision-makers want to anticipate problems rather than only being able to respond to them retroactively, they need to understand the risks of AI and the causes that underpin them.

Data-based biases

Even with an applicable instance of AI use, other potential pitfalls exist. AI models are based on two things: sets of data, and how those sets are processed. Incomplete or inaccurate data sets mean the raw materials upon which the algorithm is expected will inevitably produce inaccurate, false or otherwise problematic outcomes. This is called information bias.

Consider, for example, the Russian interference in the 2016 US presidential election. Facebook’s business model relies on its ability to provide hyper-targeted advertising to advertisers. The algorithms the social network uses to profile users and sell space to advertisers is constantly adjusted, but Facebook didn’t deem it a necessity to look for links between Russian ad buyers, political advertisements, and politically undecided voters in swing states.

Similarly, training chatbots on datasets like Wikipedia or Google News content is unlikely to produce the sort of quality results one would expect if the source material was gleaned from actual human conversations. The way news or encyclopaedias are structured simply doesn’t align with or account for the nuances and peculiarities of contemporary, text-based human conversation.

Closely linked to information bias is procedural bias and its sub-biases – how an algorithm processes what data matters, and how that data is chosen. If sampling or other data selection or abbreviation takes place, further room for error is introduced.

When analysing data, it’s essential the correct methodology is used. Applying the wrong algorithm necessarily leads to incorrect outcomes, and the decision as to which algorithm to apply can itself be the result of personal preferences or other biases in the more conventional sense.

It’ also essential to ensure that datasets, and the way they’re handled, don’t put companies in other potentially compromising positions. For example, global companies need to take care they don’t fall foul of new privacy regulations, such as the European Union’s General Data Protection Regulation (GDPR) or California’s Consumer Privacy Act (CCPA). Companies need to ensure that datasets that are meant to consist exclusively of anonymised data, for instance, don’t inadvertently include personally identifiable records elsewhere.

A variant of methodology bias is cherry-picking, where a person selects data or algorithms – or a combination of them – most likely to produce outcomes that align with their preconceptions. That is, where the dataset chosen is selected precisely because it’s the one most likely to support a pre-decided or preferred outcome. Accurate and useful outcomes depend on neutrality, and neutrality depends on people.

“We used to talk about garbage in, garbage out,” says Wendy Hall, a professor of computer science at Southampton University and the author of a review into artificial intelligence commissioned by the UK government. “Now, with AI, we talk about bias in, bias out.” Hall says how AI is designed is at least as crucial for curtailing bias as monitoring the inputs upon which it depends.

Diversity and bias

The technology sector has an undeniable and well-documented problem with representation and diversity. Women and minorities are grossly and starkly underrepresented, and the resultant homogeneity of industry participants means not only that the biases that come from a lack of diversity are destined to find their way into AI, but that spotting them after the fact – before they do any harm – is necessarily going to be difficult.

In a rapidly shifting cultural and legal landscape, diversity bias also risks causing companies reputational damage or leaving them open to costly litigation. Reputational damage might be more difficult to quantify than legal challenges, but its ramifications can be far further reaching, tarnishing a company for years to come.

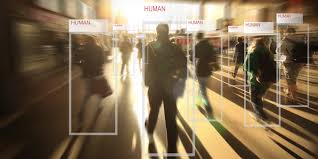

Examples of where a lack of diversity has proven to be problematic for AI-based services include instances where facial recognition tools have been shown to be grossly inaccurate when applied to people with dark skin, crime detection services erroneously flagging minorities more than other subjects, and self-educating chatbot conversations with end-users rapidly descending into hate speech.

Broadly speaking, customers tend to be heterogeneous. Thus, AI systems built to address their needs must be similarly heterogeneous both in their design and in their responses.

How does one avoid introducing bias into AI due to a lack of diversity? Quite simply, by ensuring the teams behind the AI are diverse. It’s also key that diversity not only encompasses varied ethnicity but accounts for differences in background, class, lived experiences and other variances.

It’s also important to ensure there’s diversity all the way down the value chain because doing so means any biases that escape detection earlier on are vastly more likely to be picked up before the results or outcomes damage the business, whether internally or externally.

Greater representation internally also tends to foster trust externally. In a data-driven economy, having customers feel represented and trust a business means they’re more likely to be willing to provide it with personal data because they’re more likely to believe the products created from doing so will benefit them. As data is the fuel that powers AI, this, in turn, leads to better AI systems.

Varied and inclusive representation also guards against cultural bias, which is a bias whereby one’s own cultural mores inform one’s decision-making. Consider, for instance, how some cultures’ scripts are read from right to left – rather than the reverse as is standard in the West – the differences between Spanish-speaking South Americans and Continental Spanish speakers, or the enormous differences in dietary preferences than can exist between an immigrant neighbourhood and its neighbours in the very same city.

Oversight and implementation

Another link in the chain where bias can creep in is in oversight. If a committee or other overarching body in an organisation signs off on something it shouldn’t – whether due to ignorance, ineptitude or external pressures – an AI-motivated path may be undertaken that ought not to be. Alternatively, incorrect methodologies may be endorsed, or potentially harmful outcomes being seen to be tacitly or explicitly endorsed.

Meanwhile, once approval has been given, actual implementation may reveal that the real-world implications haven’t been considered. It’s here, too, that ethical considerations come to the fore. The output from an algorithm may suggest an action that doesn’t correspond with an organisation’s ethical positions or the culture in which it operates.

By way of example, an algorithm created five years ago – and devoid of bias when it was coded – may no longer be applicable or appropriate for today’s users, and using it will create biased outputs. It’s here that human intervention can be essential, and where transparency can be beneficial, because embracing it can broaden the audience that might be both able, and willing, to flag any problems.

The nuance of human intelligence is invaluable when it comes to shaping AI, and a reminder that it’s that intelligence that’s ultimately liable for anything generated by, or resulting from, placing faith in an artificial version of it.

The biases outlined above exist in people long before they do in machines, so while it’s imperative to weed them out of machines, it’s also an excellent opportunity for reflection. If we examine our own human weaknesses and failings and try to address them, we’re vastly less likely to introduce them into the AI systems we create, whether now or in the future.