Source: engadget.com

Google’s latest flagship phone, the Pixel 4, is renowned for its exceptional camera software. If you’re curious about how that was achieved, Google has revealed more about how Portrait Mode works, describing how its dual-pixel auto-focus system functions.

Portrait Mode takes images with a shallow depth of field, focusing on the primary subject and blurring out the background for a professional look. It does this by using machine learning to estimate how far away objects are from the camera, so the primary subject can be kept sharp and everything else can be blurred.

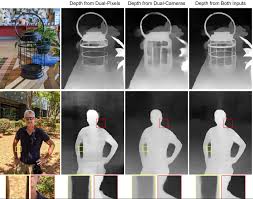

In order to estimate depth, the Pixel 4 captures an image using two cameras, the wide and telephoto cameras, which are 13 mm apart. This produces two slightly different views of the same scene, which, like information from human eyes, can be used to estimate depth. In addition, the cameras also use a dual pixel technique in which every pixel is split in half and is captured by a different half of the lens to give even more depth information.

Using both dual cameras and dual pixels allows a more accurate estimation of the distance of objects from the camera, which leads to a crisper image. Machine learning is used to determine how to weight the different outputs for the best photo.

In addition, Google has also improved the bokeh or blurred background effect. Previous, bokeh blurring was performed using a technique called tone mapping which makes shadows brighter relative to highlights. This lowers the overall contrast of the image, however. So for Portrait Mode, the software first blurs the raw image and then applies tone mapping, which makes the background nicely blurred but also as saturated and rich as the foreground.