Source: alyticsindiamag.com

The current state-of-the-art reinforcement learning techniques require many iterations over many samples from the environment to learn a target task. For instance, the game Dota 2 learns from batches of 2 million frames every 2 seconds. The infrastructure that handles RL at this scale should be not only good at collecting a large number of samples, but also be able to quickly iterate over these extensive amounts of samples during training. To be efficient requires to overcome a few common challenges:

Should service a large number of read requests from actors to a learner for model retrieval as the number of actors increases.

The processor performance is often restricted by the efficiency of the input pipeline in feeding the training data to the compute cores.

As the number of computing cores increases, the performance of the input pipeline becomes even more critical for the overall training runtime.

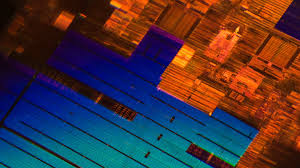

So, Google has now introduced Menger, a massive large-scale distributed reinforcement learning infrastructure with localised inference. This can also scale up to several thousand actors across multiple processing clusters reducing the overall training time in the task of chip placement. Chip placement or chip floor design is time-consuming and manual. Earlier this year, Google demonstrated how the problem of chip placement could be solved through the lens of deep reinforcement learning and bring down the time of designing a chip.

With Menger, Google tested the scalability and efficiency through TPU accelerators on-chip placement tasks.

How It Works

The above illustration is an overview of a distributed RL system with multiple actors placed in different Borg cells. Google’s Borg system, introduced in 2015, is a cluster manager that runs thousands of jobs, from many thousands of different applications, across tens of thousands of machines. With increasing updates from multiple actors within an environment, the communication between learner and actors is throttled, and this leads to an increase in convergence time.

The main responsibility here, wrote the researchers, is maintaining a balance between a large number of requests from actors and the learner job. They also state that adding caching components not only reduces the pressure on the learner to service the read requests but also further distributes the actors across multiple Borg cells. This, in turn, reduces computation overhead.

Menger uses Reverb, an open-sourced data storage system designed to implement experience replay in a variety of on-policy/off-policy algorithms for machine learning applications that provides an efficient and flexible platform. Reverb’s sharding helped balance the load from a large number of actors across multiple servers, instead of throttling a single replay buffer server while minimising the latency for each replay buffer server. However, the researchers also state that using a single Reverb replay buffer service does not cut the job. It doesn’t scale well in a distributed RL setting with multiple actors. It becomes inefficient with multiple actors.

The researchers claim that they have successfully used Menger infrastructure to drastically reduce the training time.

Key Takeaways

Reinforcement learning applications have slowly found themselves in unexpected domains. But, implementing RL techniques is tricky. The performance accuracy trade-off looms large in research. With Menger, the researchers have tried to answer the shortcomings of RL infrastructure. However, its promising results in the intricate task of chip placement has the potential to shorten the chip design cycle and other challenging real-world tasks as well.

Reduces the average read latency by a factor of ~4.0x, leading to faster training iterations, especially for on-policy algorithms.

Efficient scaling of Menger is due to the sharding capability of Reverb.

The training time was reduced from ~8.6 hours down to merely one hour compared to the state-of-the-art.