Source – home.bt.com

Google’s artificial intelligence company has created a program capable of teaching itself how to walk and jump without prior input.

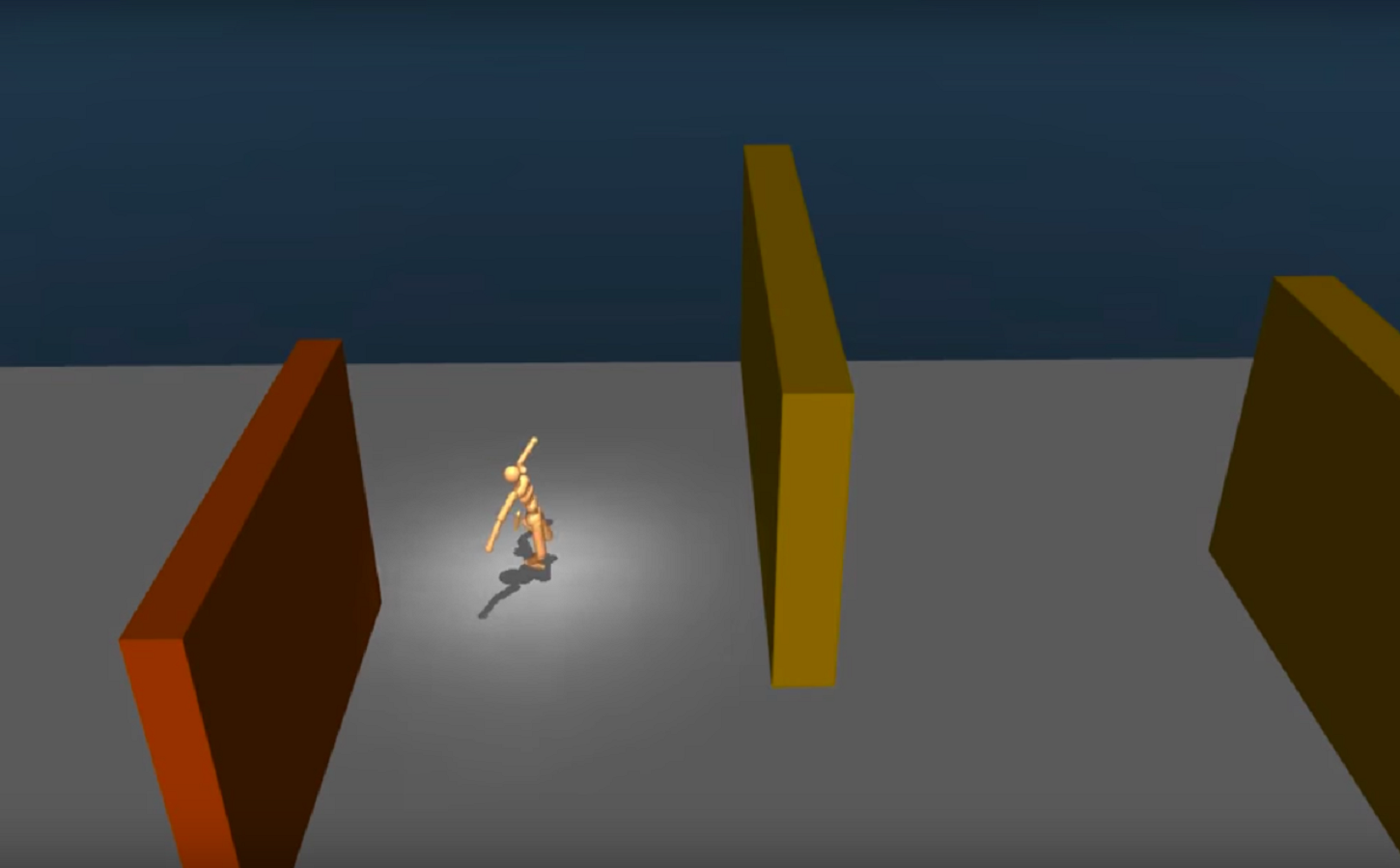

DeepMind’s website says it is on a “scientific mission” to push boundaries in AI. Their latest creation was capable of making an avatar overcome a series of obstacles simply by giving them an incentive to get from one point to another.

The AI was given no prior information on how it should walk, and what it came up with is rather unique.

A paper on the work published in Cornell University Library states that the technology uses the reinforcement learning paradigm – which allows “complex behaviours to be learned directly from simple reward signals”.

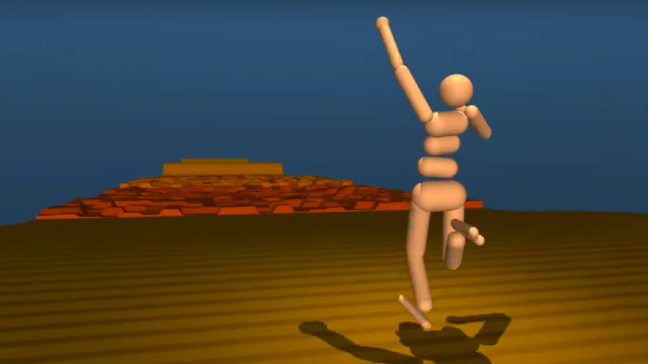

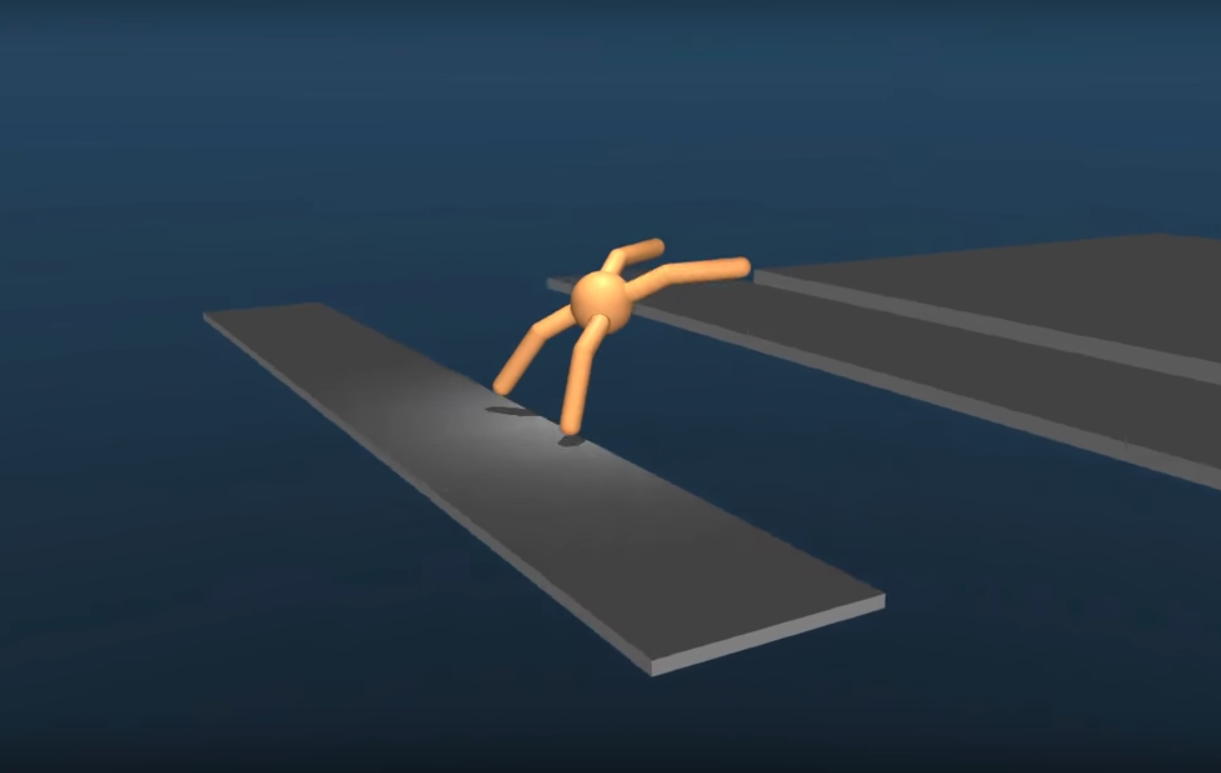

The avatars traversing the habitats included a humanoid, a pair of legs and a spider-like four-legged creation tasked with leaping across gaps.

The researchers set out to investigate whether the use of complex terrain and obstacles, as shown in the experiment, can aid the learning of complex behaviour.

“Our experiments suggest that training on diverse terrain can indeed lead to the development of non-trivial locomotion skills such as jumping, crouching, and turning for which designing a sensible reward is not easy,” they wrote.

“We believe that training agents in richer environments and on a broader spectrum of tasks than is commonly done today is likely to improve the quality and robustness of the learned behaviours – and also the ease with which they can be learned,” added the researchers.

“In that sense, choosing a seemingly more complex environment may actually make learning easier.”