Source:- itworld.com

A service mesh brings security, resiliency, and visibility to service communications, so developers don’t have to

One of the shifts occurring in IT under the banner of digital transformationis the breaking down of large, monolithic applications into microservices—small, discrete units of functionality—that run in containers—software packages that include all of the service’s code and dependencies that can be isolated and easily moved from one server to another.

Containerized architectures like these are easy to scale up and run in the cloud, and individual microservices can be quickly rolled out and iterated. However, communication among these microservices becomes increasingly complex as applications get bigger and multiple instances of the same service run simultaneously. A service mesh is an emerging ar6chitectural form that aims to dynamically connect these microservices in a way that reduces administrative and programming overhead.

What is a service mesh?

In the broadest sense, a service mesh is, as Red Hat describes it, “a way to control how different parts of an application share data with one a

nother.” This description could encompass a lot of different things, though. In fact, it sounds an awful lot like the middleware that most developers are familiar with from client-server applications.

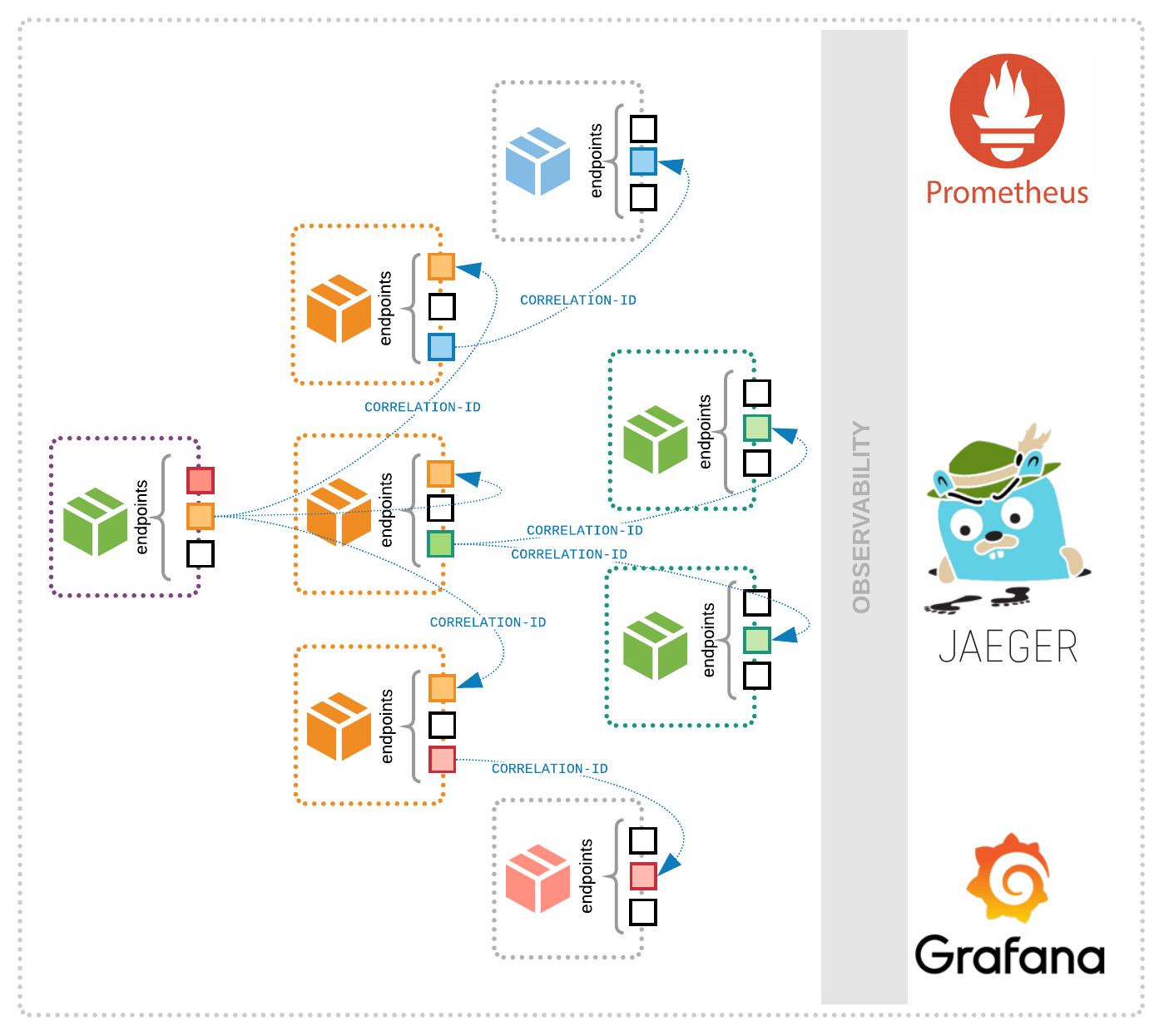

What makes a service mesh unique is that it is built to accommodate the unique nature of distributed microservice environments. In a large-scale application built from microservices, there might be multiple instances of any given service, running across various local or cloud servers. All of these moving parts obviously makes it difficult for individual microservices to find the other services they need to communicate with. A service mesh automatically takes care of discovering and connecting services on a moment to moment basis so that both human developers and individual microservices don’t have to.

Think of a service mesh as the equivalent of software-defined networking (SDN) for Level 7 of the OSI networking model. Just as SDN creates an abstraction layer so network admins don’t have to deal with physical network connections, a service mesh decouples the underlying infrastructure of the application from the abstract architecture that you interact with.

The idea of a service mesh arose organically as developers began grappling with the problems of truly enormous distributed architectures. Linkerd, the first project in this area, was born as an offshoot of an internal project at Twitter. Istio, another popular service mesh with major corporate backing, originated at Lyft. (We’ll look in more detail at both of these projects in a moment.)

Service mesh load balancing

One of the key features a service mesh provides is load balancing. We usually think of load balancing as a network function—you want to prevent any one server or network link from getting overwhelmed with traffic, so you route your packets accordingly. Service meshes do something similar at the application level, as Twain Taylor describes, and understanding that gives you a good sense of what we mean when we say a service mesh is like software-defined networking for the application layer.

In essence, one of the jobs of the service mesh is to keep track of which instances of various microservices distributed across the infrastructure are “healthiest.” It might poll them to see how they’re doing or keep track of which instances are responding slowly to service requests and send subsequent requests to other instances. The service mesh can do similar work for network routes, noticing when messages take too long to get to their destination, and take other routes to compensate. These slowdowns might be due to problems with the underlying hardware, or simply to the services being overloaded with requests or working at their processing capacity. The important thing is that the service mesh can find another instance of the same service and route to it instead, thus making the most efficient use of the overall application’s capacity.

Service mesh vs. Kubernetes

If you’re somewhat familiar with container-based architectures, you may be wondering where Kubernetes, the popular open source container orchestration platform, fits into this picture. After all, isn’t the whole point of Kubernetes that it manages how your containers communicate with one another? As the Kublr team points out on their corporate blog, you could think of Kubernetes’ “service” resource as a very basic kind of service mesh, as it provides service discovery and round-robin balancing of requests. But fully featured service meshes provide much more functionality, like managing security policies and encryption, “circuit breaking” to suspend requests to slow-responding instances, load balancing as we describe above, and much more.

Keep in mind that most service meshes actually do require an orchestration system like Kubernetes to be in place. Service meshes offer extended functionality, not a replacement.

Service mesh vs. API gateways

Each microservice will provide an application programming interface (API) that serves as the means by which other services communicate with it. This raises the question of the differences between a service mesh and other more traditional forms of API management, like API gateways. As IBM explains, an API gateway stands between a group of microservices and the “outside” world, routing service requests as necessary so that the requester doesn’t need to know that it’s dealing with a microservices-based application. A service mesh, on the other hand, mediates requests “inside” the microservices app, with the various components being fully aware of their environment.

Another way to think about it, as Justin Warren writes in Forbes, is that a service mesh is for east-west traffic within a cluster and an API gateway is for north-south traffic going into and out of the cluster. But the whole idea of a service mesh is still early and in flux. Many service meshes—including Linkerd and Istio—now offer north-south functionality as well.

Service mesh architecture

The idea of a service mesh has emerged only in the last couple of years, and there are number of different approaches to solving the “service mesh” problem, i.e., managing communications for microservices. Andrew Jenkins of Aspen Mesh identifies three possible choices regarding where the communication layer created by the service mesh might live:

- In a library that each of your microservices import

- In a node agent that provides services to all the containers on a particular node

- In a sidecar container that runs alongside your application container

The sidecar-based pattern is one of the most popular service mesh patterns out there—so much so that it has in some ways become synonymous with service meshes generally. While that’s not strictly speaking true, the sidecar approach has gotten so much traction that this is the architecture we’re going to look at in more detail.

Sidecars in a service mesh

What does it mean to say a sidecar container “runs alongside” your application container? Red Hat has a pretty good explanation. Every microservices container in a service mesh of this type has another proxy container corresponding to it. All of the logic required for service-to-service communication is abstracted out of the microservice and put into the sidecar.

This may seem complicated—after all, you’re effectively doubling the number of containers in your application! But you’re also using a design pattern that is key to simplifying distributed apps. By putting all that networking and communications code into a separate container, you have made it part of the infrastructure and freed developers from implementing it as part of the application.

In essence, what you have left is a microservice that can be laser-focused on its business logic. The microservice doesn’t need to know how to communicate with all of the other services in the wild and crazy environment where they operate. It only needs to know how to communicate with the sidecar, which takes care of the rest.

Service meshes: Linkerd, Envio, Istio, Consul

So what are the service meshes available for use? Well, there aren’t exactly off-the-shelf commercial products out there. Most service mesh are open source projects that take some finagling to implement. The big names are:

- Linkerd (pronounced “linker-dee”)—Released in 2016, and thus the oldest of these offerings, Linkerd was spun off from a library developed at Twitter. Another heavy hitter in this space, Conduit, was rolled into the Linkerd project and forms the basis for Linkerd 2.0.

- Envoy—Created at Lyft, Envoy occupies the “data plane” portion of a service mesh. To provide a full service mesh, it needs to be paired with a “control plane,” like…

- Istio—Developed in collaboration by Lyft, IBM, and Google, Istio is a control plan to service proxies such as Envoy. While Istio and Envoy are a default pair, each can be paired with other platforms.

- HashiCorp Consul—Introduced with Consul 1.2, a feature called Connect added service encryption and identity-based authorization to HashiCorp’s distributed system for service discovery and configuration, turning it into a full service mesh.

Which service mesh is right for you? A comparison is beyond the scope of this article, but it’s worth noting that all of the products above have been proven in large and demanding environments. Linkerd and Istio have the most extensive feature sets, but all are evolving rapidly. You might want to check out George Miranda’s breakdown of the features of Linkerd, Envoy, and Istio, though keep in mind that his article was written before Conduit and Linkerd joined forces.