Source – cio.in

The idea of artificial intelligence (AI) has always seemed futuristic, but today there is no shortage of companies offering AI solutions. However, does AI offer tangible business solutions, especially in datacenters? Take for example, the case of monitoring energy usage in a datacenter. In a typical datacenter, the manager has to keep track of hundreds of computer room air conditioners (CRACs) with thermostats and manually adjust them. But, if an AI system was in place, it could learn via the algorithm to automatically adjust the cooling depending on different times of the day along with different utilization rates. Essentially, the AI system will help the datacenter to self-optimize in terms of energy efficiency.

Hence, today organizations are racing to adopt technological innovations like AI that will help their datacenters proliferate without affecting their capacity or expansion. The global AI market is currently valued at more than USD 179 billion and is expected to reach USD 968 billion by 2021, growing at a CAGR of above 50 percent, according to Technavio’s research report Global Artificial Intelligence Market 2017-2021.

Similarly, International Data Corporation (IDC) predicts that the market for AI/cognitive solutions will experience a CAGR of 55.1 percent over the 2016-2020 forecast period, primarily driven by technology companies like IBM, Intel and Microsoft. By 2021, workloads such as deep learning and AI will become important factors for datacenter designs and architectures, according to Gartner research.

Deloitte defines AI as the theory and development of computer systems able to perform tasks that normally require human intelligence. As businesses look to shift their focus from embedding technology in a product/service and automating operations to uncovering insights that can inform operational and strategic decisions across an organization by using machine learning, the role of datacenters will become much more crucial.

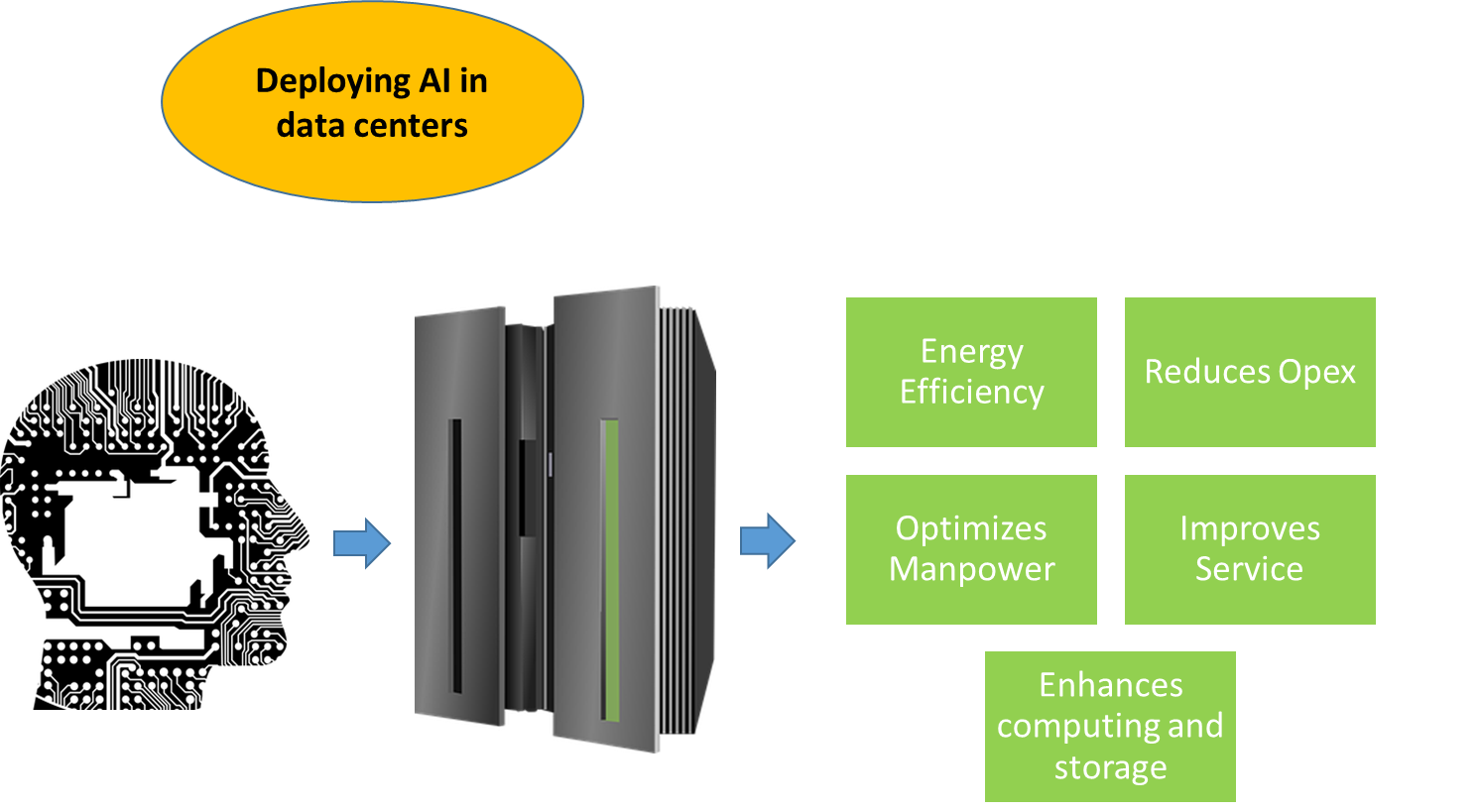

The use of AI in datacenters has increased considerably as they are proving to be effective in reducing energy consumption and operating costs, improving uptime, helping in long-term planning etc. AI in conjunction with smart management and monitoring solutions can be applied to analyze power, cooling, capacity, security, overall health and status of critical backend systems. Following are the top applications of AI in datacenters:

Energy efficiency: Constant uptime demands energy efficiency. According to 2016 research by the Ponemon Institute, the average cost of a single datacenter outage today is approximately USD 740,357. AI algorithms can help a datacenter to “self-optimize” in terms of efficiency with respect to energy usage. Vigilent, a company that uses IoT, machine learning and prescriptive analytics in mission-critical environments, reduces datacenter cooling capacity by employing real-time monitoring and machine language software to match cooling needs with the exact cooling capacity.

Reduces Opex: Off-the-shelf smart management and monitoring solutions can be embedded with AI systems to reduce and control datacenter operating expenses. Businesses will be able to leverage AI-derived insights to optimize performance of data-based operations, adjust workflows, and extend their physical infrastructure. Google reduced the overall datacenter power utilization by 15 percent by using a custom AI smart management and monitoring solution that employs machine learning to control about 120 datacenter variables from fan speeds to windows.

Improves uptime, troubleshooting and service: AI mitigates datacenters’ energy consumption, improves uptime and reduces costs without compromising on performance. Datacenter managers can deploy AI and robotic technology side-by-side that enables them to connect or disconnect a network quickly and easily and also introduce more security and manageability. With AI layered with robotic technology, any adjustments to the network can be made automatically once it is identified significantly reducing threats like ransomware, malware or viruses from spreading while allowing IT managers time to investigate. Facebook’s Big Sur system leverages NVIDIA’s Tesla Accelerated Computing Platform and the gains in performance and latency helped Facebook process more data, shortening the time needed to train its neural networks.

Optimizes allocation of technical personnel: AI can help datacenter managers to help monitor networks and other infrastructure. AI when used in conjunction with robotic automation can be used to significantly reduce human intervention in datacenters. The AI software can help robots to respond to requests, analyze them and make adjustments as and when needed. For example, the Dynatrace platform offers AI-based full-stack monitoring that provides IT professionals with smart monitoring capabilities making it easier for them to do their jobs.

As AI and machine learning improve, the scope and complexity of the tasks that can be automated increases and the personnel behind DevOps will be freed from mundane tasks and focus on more innovative and creative solutions to problems at hand. In a recent blog post, California-based Litbit said that it has developed the first AI-powered datacenter operator called Dac, which uses machine learning to find potential problems in a datacenter like loose electrical hook-ups and leaking water. Another company called Wave2Wave has developed a rack-mounted robot called ROME (Robotic Optical Switch for Datacenters) for making physical optical connections in a few seconds. The robot manages the cable connections, plucking components mechanically, almost like an old-school telecommunications switchboard where the panel-inserted electrical cords established voice connections.

Optimizes server compute and storage systems: Automation is becoming widely accepted in datacenters with the market now seeking automated datacenter infrastructure management technologies. With the advent of AI, datacenter architects have to develop networks with high bandwidth, low-latency and innovative architectures such as InfiniBand or Omni-Path.

There is an increasing trend towards embedding machine learning algorithms aimed at key optimization, categorization, search and pattern detection tasks. So far, companies have been using a CPU-based environment for handling the vast majority of machine learning and AI workloads. But, that kind of compute capacity may not be enough and there is an innate need for hybrid or heterogeneous architectures where the core processors are complemented by special-purpose accelerators to deliver greater compute densities and throughput for the different applications. Today, architects must integrate technologies such as NVDIA GPUs, Advanced Micro Devices’ GPUs and Intel’s Xeon Phi into the datacenter. Cloud file syncing and sharing service Dropbox uses optical character recognition (OCR) with the help of deep learning to recognize words in documents scanned in its mobile apps.

In conclusion, the resurgence of AI will not only effect how datacenter operations take place, but also change the face of the personnel employed in a datacenter. According to a Gartner research note on “Predicts 2017: Artificial Intelligence”, more than 10 percent of IT hires in customer service will write scripts for bot interactions in two years; by 2020, 20 percent of companies will dedicate workers to monitor and guide neural networks. It has been predicted that organizations that use AI will achieve long-term success four times more than others. Employing AI offers enterprises the opportunity to give customers an improved experience at every point of interaction and datacenters are the key to unlocking that experience.