Source – infoworld.com

With every innovation comes new complications. Containers made it possible to package and run applications in a convenient, portable form factor, but managing containers at scale is challenging to say the least.

Kubernetes, the product of work done internally at Google to solve that problem, provides a single framework for managing how containers are run across a whole cluster. The services it provides are generally lumped together under the catch-all term “orchestration,” but that covers a lot of territory: scheduling containers, service discovery between containers, load balancing across systems, rolling updates/rollbacks, high availability, and more.

In this guide we’ll walk through the basics of setting up Kubernetes and populating it with container-based applications. This isn’t intended to be an introduction to Kubernetes’s concepts, but rather a way to show how those concepts come together in simple examples of running Kubernetes.

Choose a Kubernetes host

Kubernetes was born to manage Linux containers. However, as of Kubernetes 1.5, Kubernetes also supports Windows Server Containers, though the Kubernetes control plane must continue to run on Linux. Of course, with the aid of virtualization, you can get started with Kubernetes on any platform.

If you’re opting to run Kubernetes on your own hardware or VMs, one common way to do this is to obtain a Linux distribution that bundles Kubernetes. This does away with the need for setting up Kubernetes on a given distribution—not only the download-and-install process, but even some of the configure-and-manage process.

CoreOS Tectonic, to name one such distro, focuses on containers and Kubernetes to the near-total exclusion of anything else. RancherOS takes a similar approach, and likewise automates much of the setup. Both can be installed in a variety of environments: bare metal, Amazon AWS VMs, Google Compute Engine, OpenStack, and so on.

Another approach is to run Kubernetes atop a conventional Linux distribution, although that typically comes with more management overhead and manual fiddling. Red Hat Enterprise Linux has Kubernetes in its package repository, for instance, but even Red Hat recommends its use only for testing and experimentation. Rather than try to cobble something together by hand, Red Hat stack users are recommended to use Kubernetes by way of the OpenShift PaaS, as OpenShift now uses Kubernetes as its own native orchestrator.

Many conventional Linux distributions provide special tooling for setting up Kubernetes and other large software stacks. Ubuntu, for instance, provides a tool called conjure-up that can be used to deploy the upstream version of Kubernetes on both cloud and bare-metal instances.

Choose a Kubernetes cloud

Kubernetes is available as a standard-issue item in many clouds, though it appears most prominently as a native feature in Google Cloud Platform (GCP). GCP offers two main ways to run Kubernetes. The most convenient and tightly integrated way is by way of Google Container Engine, which allows you to run Kubernetes’s command-line tools to manage the created cluster.

Alternatively, you could use Google Compute Engine to set up a compute cluster and deploy Kubernetes manually. This method requires more heavy lifting, but allows for customizations that aren’t possible with Container Engine. Stick with Container Engine if you’re just starting out with containers. Later on, after you get your sea legs and want to try something more advanced, like a custom version of Kubernetesor your own modifications, you can deploy VMs running a Kubernetes distro.

Amazon EC2 has native support for containers, but no native support for Kubernetes as a container orchestration system. Running Kubernetes on AWS is akin to using Google Compute Engine: You configure a compute cluster, then deploy Kubernetes manually.

Many Kubernetes distributions come with detailed instructions for getting set up on AWS. CoreOS Tectonic, for instance, includes a graphic installerbut also supports the Terraform infrastructure provisioning tool. Alternatively, the Kubernetes kops tool can be used to provision a cluster of generic VMs on AWS (typically using Debian Linux, but other Linux flavors are partly supported).

Microsoft Azure has support for Kubernetes by way of the Azure Container Service. However, it’s not quite “native” support in the sense of Kubernetes being a hosted service on Azure. Instead, Kubernetes is deployed by way of an Azure Resource Manager template. Azure’s support for other container orchestration frameworks, like Docker Swarm and Mesosphere DC/OS, works the same way. If you want total control, as per any of the other clouds described here, you can always install a Kubernetes-centric distro on an Azure virtual machine.

One quick way to provision a basic Kubernetes cluster in a variety of environments, cloud or otherwise, is to use a project called Kubernetes Anywhere. This script works on Google Compute Engine, Microsoft Azure, and VMware vSphere (vCenter is required). In each case, it provides some degree of automation for the setup.

Your own little Kubernetes node

If you’re only running Kubernetes in a local environment like a development machine, and you don’t need the entire Kubernetes enchilada, there are a few ways to set up “just enough” Kubernetes for such use.

One that is provided by the Kubernetes development team itself is Minikube. Run it and you’ll get a single-node Kubernetes cluster deployed in a virtualization host of your choice. Minikube has a few prerequisites, like the kubectl command-line interface and a virtualization environment such as VirtualBox, but those are readily available as binaries for MacOS, Linux, and Windows.

For CoreOS users on MacOS, there is Kubernetes Solo, which runs on a CoreOS VM and provides a Status Bar app for quick management. Solo also includes the Kubernetes package manager Helm (more on Helm below), so that applications packaged for Kubernetes are easy to obtain and set up.

Spinning up your container cluster

Once you have Kubernetes running, you’re ready to begin deploying and managing containers. You can ease into container ops by drawing on one of the many container-based app demos available.

Take an existing container-based app demo, assemble it yourself to see how it is composed, deploy it, and then modify it incrementally until it approaches something useful to you. If you have chosen to find your footing by way of Minikube, you can use the Hello Minikube tutorial to create a Docker container holding a simple Node.js app in a single-node Kubernetes demo installation. Once you get the idea, you can swap in your own containers and practice deploying those as well.

The next step up is to deploy an example application that resembles one you might be using in production, and becoming familiar with more advanced Kubernetes concepts such as pods (one or more containers that comprise an application), services (logical sets of pods), replica sets (to provide self-healing on machine failure), and deployments (application versioning). Lift the hood of the WordPress/MySQL sample application, for instance, and you’ll see more than just instructions on how to deploy the pieces into Kubernetes and get them running. You will also see implementation details for many concepts used by production-level Kubernetes applications. You’ll learn how to set up persistent volumes to preserve the state of an application, how to expose pods to each other and to the outside world by way of services, how to store application passwords and API keys as secrets, and so on.

Weaveworks has an example app, the Sock Shop, that shows how a microservices pattern can be used to compose an application in Kubernetes. The Sock Shop will be most useful to people familiar with the underlying technologies—Node.js, Go kit, and Spring Boot—but the core principles are meant to transcend particular frameworks and illustrate cloud-native technologies.

If you glanced at the WordPress/MySQL application and imagined there might be a pre-baked Kubernetes app that meets your needs, you’re probably right. Kubernetes has an application definition system called Helm, which provides a way to package, version, and share Kubernetes applications. A number of popular apps (GitLab, WordPress) and app building blocks (MySQL, Nginx) have Helm “charts” readily available by way of the Kubeapps portal.

Navigating Kubernetes

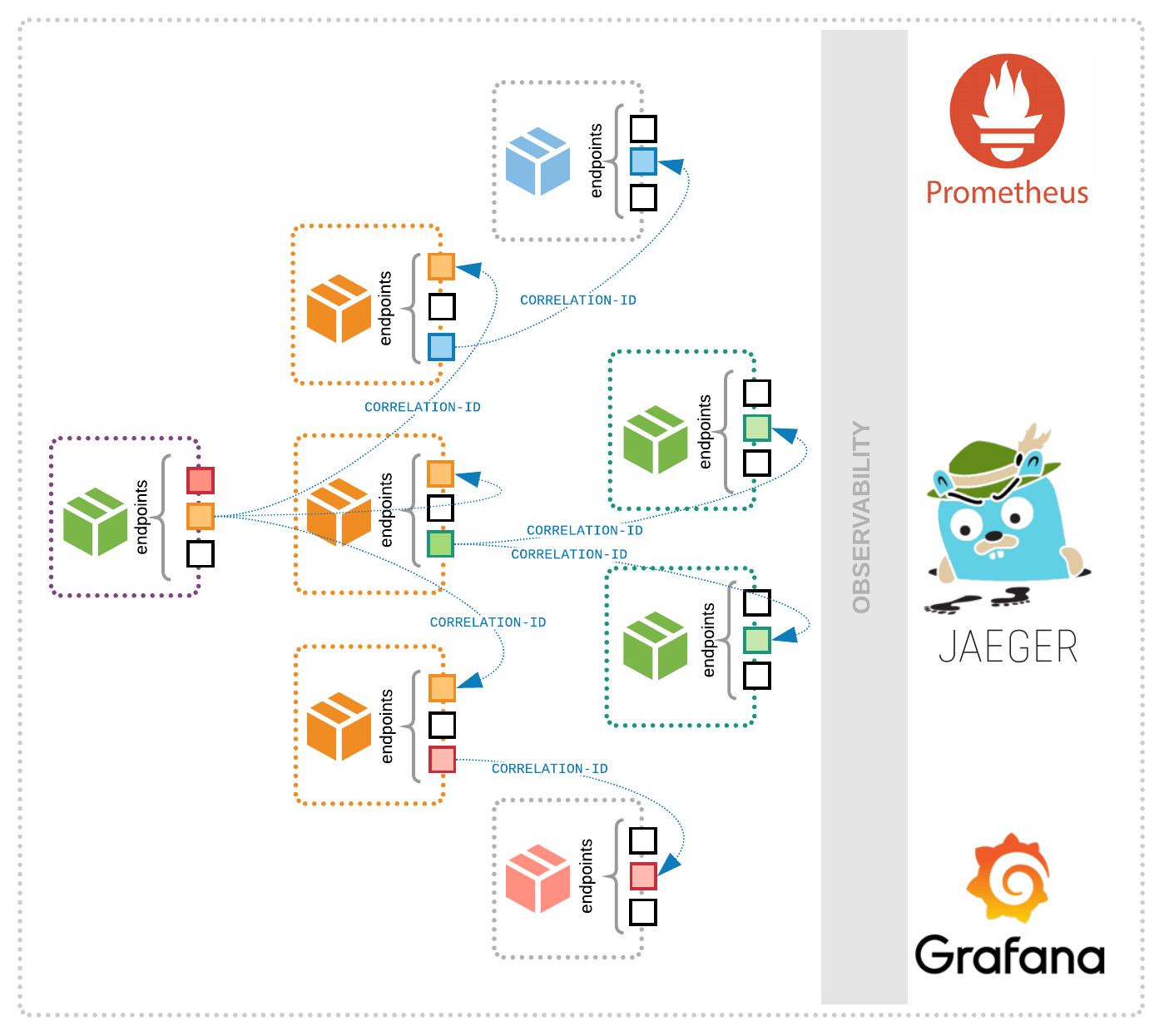

Kubernetes simplifies container management through powerful abstractions like pods and services, while providing a great deal of flexibility through mechanisms like labels and namespaces, which can be used to segregate pods, services, and deployments (such as development, staging, and production workloads).

If you take one of the above examples and set up different instances in multiple namespaces, you can then practice making changes on components in each namespace independent of the others. You can then use deployments to allow those updates to be rolled out across pods in a given namespace, incrementally.