Source: devops.com

As opposed to a traditional monolith, microservices architecture can theoretically bring many benefits. Microservices decouple software elements, enable reusable components and allow independent development cycles. However, in practice, microservices are prone to many issues.

For example, teams accustomed to a traditional monolithic build process may find it difficult to reshape their established practices. Furthermore, as the number of microservices increases into the hundreds, managing separate CI/CD pipelines for each service becomes untenable, especially as DevOps components aren’t always optimized to fit every pipeline precisely.

These are some of the real-world problems that Dan Garfield, chief technology evangelist at Codefresh, brought up in episode five of the DevNetwork Dev Professional Series. In this article, we follow his talk to examine the benefits of microservices, their hangups in a real-world CI/CD case study and solutions for scaling delivery as complexity and numbers increase.

Monoliths in Space

To get a better idea of why we need microservices, Garfield uses an analogy from space—the Apollo 13 mission. Apollo 13 was made of two ships: the lunar module and the command module. In software terms, these were essentially two giant monoliths.

When the command module experienced an oxygen failure, its crew needed to repurpose oxygen filters from the lunar module. However, the lunar module engineers designed their oxygen filters differently, causing a major headache to reassemble and retrofit these parts.

This analogy goes to show how monolithic-style development can create tunnel vision. When teams adopt guidelines that work only for the monolith, they are usually incompatible externally. This stifles the possibility of reusable components, thus decreasing code value and extending overall development effort.

“Being able to reuse components and scale independently is the benefit of microservices,” said Garfield.

Expedia’s Microservices Journey

To see real-world microservices architecture in action, take Expedia. Previously, Expedia services had existed within monoliths, which meant much duplication. Services were detrimentally tethered, meaning if a service broke you had to fix the entire monolith.

Garfield describes how Expedia isolated its architecture into individual microservices to represent abilities such as car search, car sorting, bookings, airline search and others. While this aided elasticity and usability, the microservices journey came with issues.

Problems in Microservice Heaven

Expedia ran into multiple problems within their microservices journey. Though Expedia had valid business reasons to adopt microservices, “they didn’t have a plan for how to scale their CI/CD that was realistic,” expressed Garfield.

Foremost, as the number of microservices expanded into the hundreds and thousands, standardizing CI/CD became quite difficult. The organization was using one CI/CD pipeline per service, meaning they now had to support thousands of pipelines, each connected to an individual Git repository. A microservice may also have different pipelines for production, internal use or testing environments, leading to even more duplication.

When you scale microservices, you also encounter a new set of roadblocks related to network and storage. This became extremely evident when Expedia realized the sheer network bandwidth and related costs from running validation and integration testing on thousands of separate CI/CD pipelines.

“The scale of automation itself is where most people have the biggest bottlenecks,” said Garfield.

Consolidating codebases with plugins and shared libraries became a common issue. Expedia developers experienced issues with Jenkins and were forced to programmatically or manually edit each pipeline, copy and pasting code from one to the next. In short, the flow was not easy, and differing approaches between teams led to shadow IT.

Solutions For Scaling Microservices

To help ease large-scale microservice environments and CI/CD pipelines, Garfield recommended taking a few approaches:

- Use a container-based pipeline: The first principle Garfield suggested is using a container-based pipeline. If a pipeline is containerized, you can run it independently with different language versions. According to Garfield, “shared libraries are not the solution,” as they create version-specific conflicts. Using a Docker image is better than shared libraries because users can self-serve images with whatever version they want.

There are many resources on Docker Hub to ease common CI/CD steps such as building a Docker image, git clone, running unit tests, linting projects and security scanning. Using these images can help avoid wasted development effort.

- Consolidate to a single pipeline that operates with context: Instead of managing thousands of pipelines for all your microservices, Garfield suggested you use a single malleable pipeline for all integrations and deployments. His method involves Triggers, which carry information to direct actions.

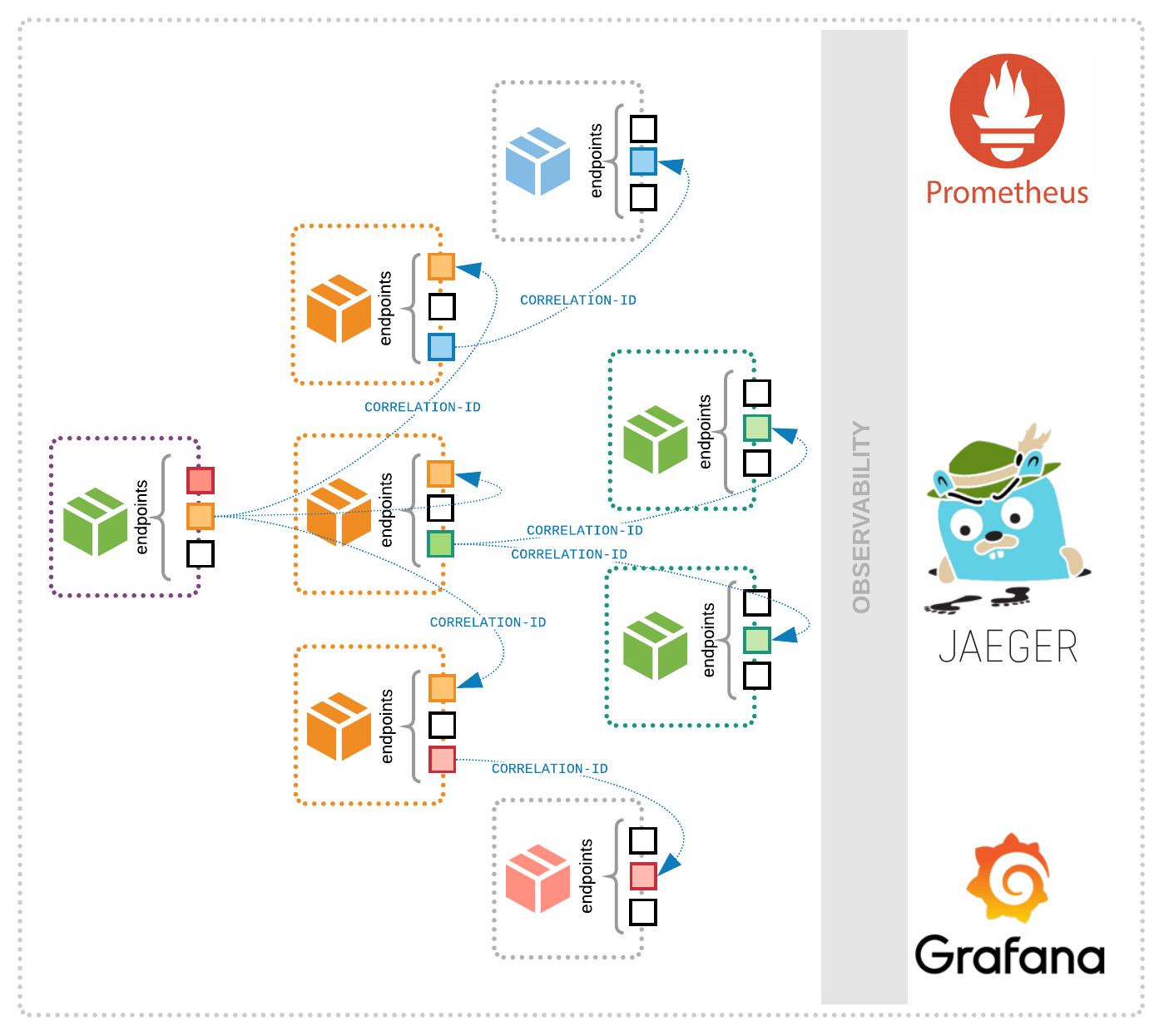

In this setup, Triggers carry context, or metadata, allowing a pipeline to change its behavior accordingly. These Triggers could be time-based or centered around actions like a repo Git commit, or pushing a new image. When a trigger is initiated, the trigger brings in relevant dependencies to perform an action, such as cloning a repository or cloning a codebase. Further steps can then consume these unique criteria.

Garfield demonstrated how this is accomplished in practice within the Codefresh CI platform with native Kubernetes integrations. The platform also enables you to filter and view by certain criteria; a window into commits and changes.

Garfield acknowledged this overall strategy does mean making microservices a bit more uniform, but perhaps the ease of deployment could outweigh the development constraints.

- Adopt canary release testing: If an organization has hundreds or thousands of microservices, spinning up each microservice to run independent tests can quickly become cost-prohibitive. As Garfield noted, “testing early becomes less useful as infrastructure complexity arises.”

To solve this issue, teams can adopt a canary release strategy. Canary release is a practice in which new software is first released and tested among a small subgroup of users. It’s a good way to “limit the blast radius of a change that goes wrong,” said Garfield.

Lasting Image: Pipeline Reusability

Adopting a single container-based CI pipeline could help resolve the expanding set of issues with large-scale microservices testing and deployment. By templatizing pipelines, many microservices could abstract complexity and reuse the same modular components. Essentially, make CI/CD reusable in the same way microservices are.