Source: computerweekly.com

Unlike digital-first organisations, traditional businesses have a wealth of enterprise applications built up over decades, many of which continue to run core business processes.

In this series of articles we investigate how organisations are approaching the modernisation, replatforming and migration of legacy applications and related data services.

We look at the tools and technologies available encompassing aspects of change management and the use of APIs and containerisation (and more) to make legacy functionality and data available to cloud-native applications.

This post is written by Ben Stopford, lead technologist, office of the CTO at Confluent – the company is known for its work as an event streaming platform for Apache Kafka.

Stopford writes as follows…

Both mainframes and databases have an important place in today’s world, but one that is no longer as exclusive as it once was. When customers interact with companies today they connect to a host of different backend systems spanning mobile, desktop, call centres and stores. Throughout this, they expect a single joined-up experience whether its viewing payments and browsing catalogues, being guided by machine learning routines or interfacing with sensors they interact with in the world.

This is a job that is bigger than a mainframe, application or database.

It’s a job that necessitates a whole estate of different applications that work together.

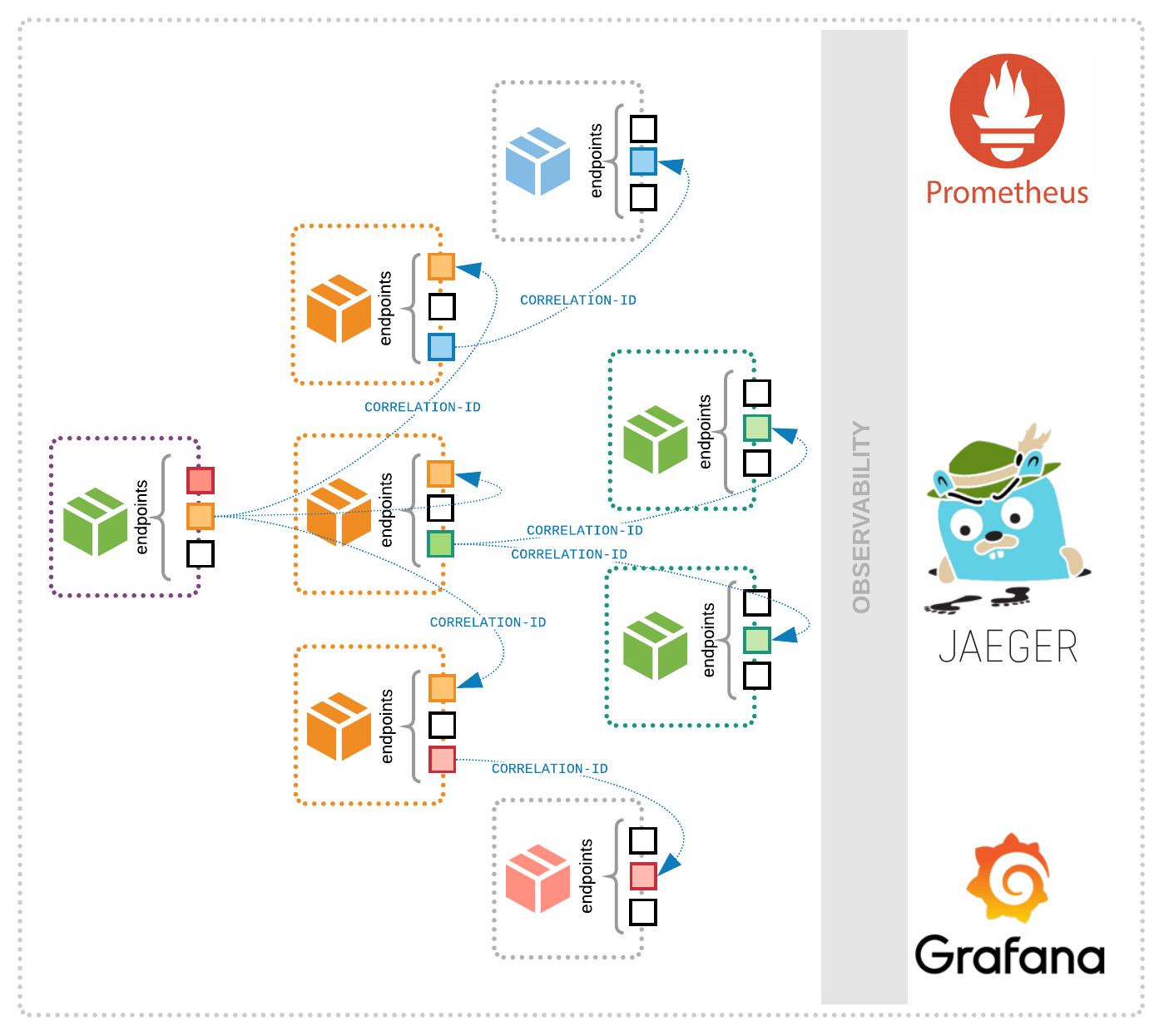

Microservices are an example of this. Cloud is a facilitator. But whatever the incarnation, data always needs to flow from application to application, microservice to microservice or data centre to data centre. In fact, any company that blends software, data and people together in some non-trivial way has to face this problem head-on.

Data in-flight

Event streaming systems help with this by providing first-class data infrastructure that connects and processes data in flight: a real-time conduit that connects microservices, clouds and on-premise data centres together. The architectures that emerge, whether they are at Internet giants like Apple and Netflix or at the stalwarts of the FTSE 500, all have the same aim: to make the many disparate (but critical) parts of a software estate appear, from the outside, to be one.

Of course, mainframes and databases will always be a part of these estates, but increasingly they are individual pieces in an ever-growing and ever-evolving puzzle.

From monoliths to microservices

Think for a moment about why you chose microservices?

If the answer is simply to handle higher throughputs or provide more predictable latencies, there are other, less intrusive solutions available that will scale monolithic applications.

A better reason and rationale is an understanding that microservices let you scale in ‘people-terms’: that means teams working autonomously, releasing independently of one another and not being held up by the teams they depend on, or those that depend on them. Event streaming plays an important role in this, as without it, a microservice architecture inherits many of the issues of the monolith you’re trying to replace.

These issues can be manageable in some cases, for example, if the whole company is one big web application, but where business processes run independently of one another it’s desirable to keep the software and teams that run those different services as decoupled as possible.

For example, connecting your on-premise website to a fraud detection system that runs in the cloud using event streams ensures that both the fraud detection can scale elastically using cloud resources while also being supplied by real-time datasets. But more importantly, should the fraud system go down, the main website remains unaffected.

Events streams provide the physical separation needed to do this. A kind of central nervous system that connects applications at the data layer while keeping them decoupled at the software layer. Whether you’re building something greenfield, evolving a monolith, or going cloud-native, the benefits are the same.

Take events to heart

[So my core technology proposition here is that] event streaming needs to be at the heart of any IT infrastructure… [but why is this so?]

A good way to consider a question like this is to start with the end [goal] in mind. Most companies would like their data to be secure, organised and available in a self-service manner so that teams get access to real-time data of high quality and with minimal fuss. That’s the nirvana for most large organisations, whether it’s the data science team trying to project forward revenues or an application team trying to improve sales conversion rates.

But building a platform that provides secure, self-service data involves solving a host of subtle underlying concerns. Concerns which have many overlaps with data management techniques applied in databases. Data in flight isn’t that different from data at rest after all. So, while the business benefits of connecting the data in a company in an organised way should be relatively self-explanatory, it’s the devil in the details that leads efforts to go awry.

What makes event streaming systems interesting for organisation-level initiatives like these is that, like databases, they are a first-class data infrastructure with processing, transactions, SQL, schema validation and more built-in. Tools that are as important when managing the streams of data that flow between applications as they are when managing the data in the databases that each application uses.

The road to evolution

But this analogy only goes so far i.e. event streaming systems differ from databases in many significant ways.

+The home ground lies in high throughput connectivity, interfacing with the different components in an IT architecture effortlessly while also providing the processing primitives of a first-class data product. It’s the combination of these database-like tools applied to at-scale, real-time datasets that makes all the difference. After all, real-world architectures aren’t static entities, drawn on a whiteboard.