Source: marktechpost.com

NVIDIA’s open-source toolkit, NVIDIA NeMo( Neural Models), is a revolutionary step towards the advancement of Conversational AI. Based on PyTorch, it allows one to build quickly, train, and fine-tune conversational AI models.

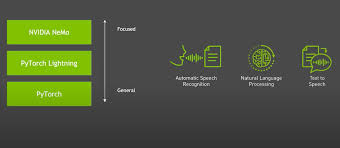

As the world is getting more digital, Conversational AI is a way to enable communication between humans and computers. The set of technologies behind some fascinating technologies like automated messaging, speech recognition, voice chatbots, text to speech, etc. It broadly comprises three areas of AI research: automatic speech recognition (ASR), natural language processing (NLP), and speech synthesis (or text-to-speech, TTS).

Conversational AI has shaped the path of human-computer interaction, making it more accessible and exciting. The latest advancements in Conversational AI like NVIDIA NeMo help bridge the gap between machines and humans.

NVIDIA NeMo consists of two subparts: NeMo Core and NeMo Collections. NeMo Core deals with all models generally, whereas NeMo Collections deals with models’ specific domains. In Nemo’s Speech collection (nemo_asr), you’ll find models and various building blocks for speech recognition, command recognition, speaker identification, speaker verification, and voice activity detection. NeMo’s NLP collection (nemo_nlp) contains models for tasks such as question answering, punctuation, named entity recognition, and many others. Finally, in NeMo’s Speech Synthesis (nemo_tts), you’ll find several spectrogram generators and vocoders, which will let you generate synthetic speech.

There are three main concepts in NeMo: model, neural module, and neural type.

- Models contain all the necessary information regarding training, fine-tuning, neural network implementation, tokenization, data augmentation, infrastructure details like the number of GPU nodes,etc., optimization algorithm, etc.

- Neural modules are a sort of encoder-decoder architecture consisting of conceptual building blocks responsible for different tasks. It represents the logical part of a neural network and forms the basis for describing the model and its training process. Collections have many neural modules that can be reused whenever required.

- Inputs and outputs to Neural Modules are typed with Neural Types. A Neural Type is a pair that contains the information about the tensor’s axes layout and semantics of its elements. Every Neural Module has input_types and output_types properties that describe what kinds of inputs this module accepts and what types of outputs it returns.

Even though NeMo is based on PyTorch, it can also be effectively used with other projects like PyTorch Lightning and Hydra. Integration with Lightning makes it easier to train models with mixed precision using Tensor Cores and can scale training to multiple GPUs and compute nodes. It also has some features like logging, checkpointing, overfit checking, etc. Hydra also allows the parametrization of scripts to keep it well organized. It makes it easier to streamline everyday tasks for users.