Source – devops.com

OverOps has launched a namesake platform employing machine learning algorithms to capture data from an IT environment that identify potential issues before a DevOps team decides to promote an application into production.

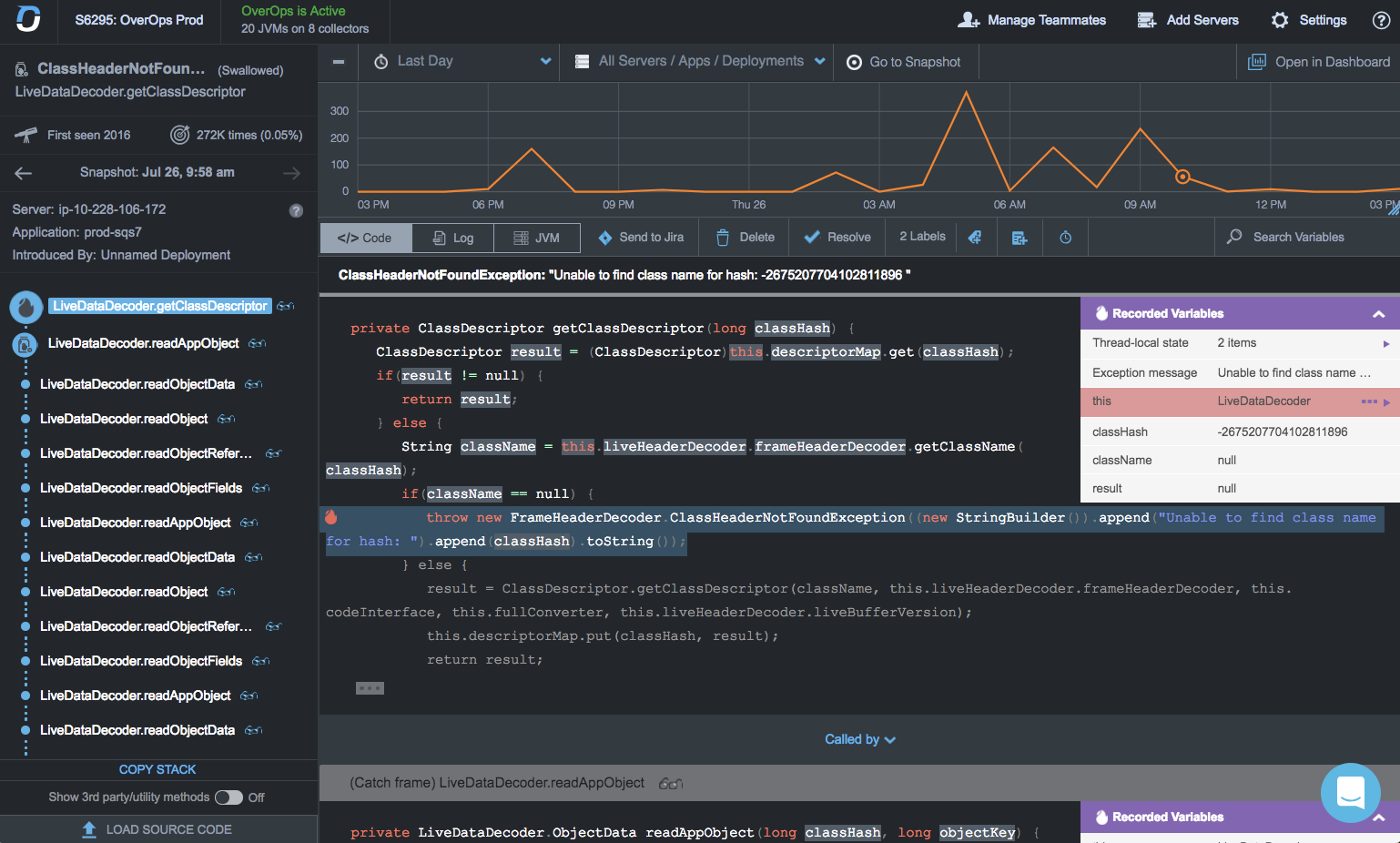

Company CTO Tal Weiss said the OverOps Platform is unique in that, rather than relying on log data, it combines static and dynamic analysis of code as it executes to detect issue. That data then can be accessed either via dashboards or shared with other tools via an open application programming interface (API). The dashboards included with the OverOps Platform are based on open source project Grafana software.

That approach makes it possible to advance usage of artificial intelligence (AI) within IT operations without necessarily requiring that every tool in a DevOps pipeline be upgraded to include support for machine learning algorithms, Weiss said.

OverOps also includes in the platform access to an AWS Lambda-based framework or separate on-premises serverless computing framework to enable DevOps teams to also create their own custom functions and workflows.

Weiss said OverOps is designed to capture machine data about every error and exception at the moment they occur, including details such as the value of all variables across the execution stack, the frequency and failure rate of each error, the classification of new and reintroduced errors and the associated release numbers for each event. Log data is, by comparison, relatively shallow in that it is challenging to determine precise root cause analysis when trying to troubleshoot an issue, he said, noting the OverOps Platform offers visibility into the uncaught and swallowed exceptions that would otherwise be unavailable in log files.

DevOps teams spend an inordinate amount of time analyzing log files in the hopes of discovering an anomaly. But as IT environments continue to scale out, the practicality of analyzing millions, possibly even billions, of log files becomes impractical. OverOps is making the case for employing machine learning algorithms to analyze events before the log file is even created, which eliminates the need to find some way to store log files before they can be analyzed.

There’s naturally a lot of trepidation when it comes to anything to do with machine learning algorithms and other form of AI to manage IT. But as the complexity of IT environments continues to increase, it’s clear DevOps teams will need to rely more on AI to mange IT at levels of scale that were once considered unimaginable. For example, while microservices based on containers may accelerate the rate at which applications can be developed and updated, they also can introduce a phenomenal amount of operational complexity. Most DevOps professionals would rather automate as much as possible the manual labor associated with operations, especially if that leads to more certainty about the quality of the software being promoted into a production environment.

Of course, while making use of machine learning algorithms to analyze code represents a step forward in terms of automation, it’s still a very long way from eliminating the need for DevOps teams altogether.