Source: straitstimes.com

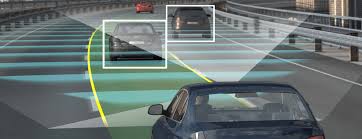

Who should be held legally responsible when a self-driving car hits a pedestrian? Should the finger be pointed at the car owner, manufacturer or the developers of the artificial intelligence (AI) software that drives the car?

Struggles to assign liability for accidents involving bleeding-edge technologies like AI have dominated global conversations even as trials are ongoing in many places around the globe including in Singapore, South Korea and Europe.

During the third annual edition of the TechLaw.Fest forum last Wednesday, panellists said that Singapore’s laws are currently unable to effectively assign liability in the case of losses or harm suffered in accidents involving AI or robotics technology.

“The unique ability of autonomous robots and AI systems to operate independently without any human involvement muddies the waters of liability,” said lawyer and Singapore Academy of Law Robotics and Artificial Intelligence Sub-committee co-chair Charles Lim.

He was speaking in a webinar titled “Why Robot? Liability for AI system failures”.

The 11-member Robotics and Artificial Intelligence Sub-committee published its report on what can be done to establish civil liability in such cases last month.

“There are multiple factors (in play) such as the AI system’s underlying software code, the data it was trained on, and the external environment the system is deployed in,” he said.

For example, an accident caused by a self-driving car’s AI system could be due to a bug in the system’s software, or even an unusual situation such as a monitor lizard crossing the road that the system has not been trained to recognise.

A human’s decisions could still influence events leading up to an accident too, as existing self-driving cars have a manual mode that allows a human driver to take control.

A software update applied by the AI system’s developers could also introduce new, unforeseen bugs.

This makes retracing every step of an AI system’s decision-making process to prove liability a complex and costly task, Mr Lim said.

Fellow panellist and robotics and AI sub-committee member Josh Lee said a first step for lawmakers here could be to figure out what to call the person behind the wheel.

“We propose that the person be called a ‘user-in-charge’, because he or she may not be carrying out the task of driving, but retains the ability to take over when necessary,” he said. “This can have application beyond driverless vehicles… in many scenarios today, such as medical diagnosis.”

The term “user-in-charge” was first mooted by the United Kingdom Law Commission in 2018, where proposals were tabled to put such users in a fully automated environment under a separate regulatory regime.

AI and robotics sub-committee member and lawyer Beverly Lim said during the webinar that the one person who should not be held liable is the driver behind the wheel, because he would have bought the vehicle thinking the AI would be a comparatively more reliable driver.

But the biggest issue arising from liability involving robotics and AI is ensuring that victims will be able to get compensation.

“For example, how are they supposed to prove (liability) when they don’t have access to the data, or might not understand it?” said Ms Lim.