Source: infoq.com

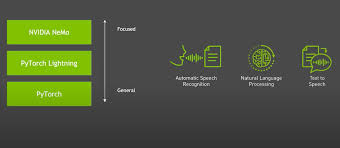

PyTorch, Facebook’s open-source deep-learning framework, announced the release of version 1.4. This release, which will be the last version to support Python 2, includes improvements to distributed training and mobile inference and introduces support for Java.

This release follows the recent announcements and presentations at the 2019 Conference on Neural Information Processing Systems (NeurIPS) in December. For training large models, the release includes a distributed framework to support model-parallel training across multiple GPUs. Improvements to PyTorch Mobile allow developers to customize their build scripts, which can greatly reduce the storage required by models. Building on the Android interface for PyTorch Mobile, the release includes experimental Java bindings for using TorchScript models to perform inference. PyTorch also supports Python and C++; this release will be the last that supports Python 2 and C++ 11. According to the release notes:

The release contains over 1,500 commits and a significant amount of effort in areas spanning existing areas like JIT, ONNX, Distributed, Performance and Eager Frontend Improvements and improvements to experimental areas like mobile and quantization.

Recent trends in deep-learning research, particularly in natural-language processing (NLP), have produced larger and more complex models such as RoBERTa, with hundreds of millions of parameters. These models are too large to fit within the memory of a single GPU, but a technique called model-parallel training allows different subsets of the parameters of the model to be handled by different GPUs. Previous versions of PyTorch have supported single-machine model parallel, which requires that all the GPUs used for training be hosted in the same machine. By contrast, PyTorch 1.4 introduces a distributed remote procedure call (RPC) system which supports model-parallel training across many machines.

After a model is trained, it must be deployed and used for inference or prediction. Because many applications are deployed on mobile devices with limited compute, memory, and storage resources, the large models often cannot be deployed as-is. PyTorch 1.3 introduced PyTorch Mobile and TorchScript, which aimed to shorten end-to-end development cycle time by supporting the same APIs across different platforms, eliminating the need to export models to a mobile framework such as Caffe2. The 1.4 release allows developers to customize their build packages to only include the PyTorch operators needed by their models. The PyTorch team reports that customized packages can be “40% to 50% smaller than the prebuilt PyTorch mobile library.” With the new Java bindings, developers can invoke TorchScript models directly from Java code; previous versions only supported Python and C++. The Java bindings are only available on Linux.

Although rival deep-learning framework TensorFlow ranks as the leading choice for commercial applications, PyTorch has the lead in the research community. At the 2019 NeurIPS conference in December, PyTorch was used in 70% of the papers presented which cited a framework. Recently, both Preferred Networks, Inc (PFN) and research consortium OpenAI annouced moves to PyTorch. OpenAI claimed that “switching to PyTorch decreased our iteration time on research ideas in generative modeling from weeks to days.” In a discussion thread about the announcement, a user on Hacker News noted:

At work, we switched over from TensorFlow to PyTorch when 1.0 was released, both for R&D and production… and our productivity and happiness with PyTorch noticeably, significantly improved.

The PyTorch source code and release notes for version 1.4 are available on GitHub.