Source – https://analyticsindiamag.com/

Raspberry Pi is all set to ramp up its machine learning abilities, co-founder Eton Upton said at tinyML Summit 2021. Upton said the in-house chip-development team has been working towards improving the hardware’s machine learning skills.

The Raspberry Pi 400 is a computer integrated into a single compact keyboard that runs on Raspberry Pi 4, released in 2019. The Raspberry Pi 4 came up with a significant upgrade in its hardware, architecture, and operating system.

Pi 4 can run basic machine learning algorithms using its built-in camera input for image recognition. It can accomplish basic tasks such as recognising objects, observing movement or running basic inference tasks. It allows for better load and run-time algorithms since code can be compiled quickly; thanks to its faster CPU and RAM. Machine learning tasks also perform twice as much better on Pi 4 as it is vastly more powerful than its previous iterations.

Going by Upton’s tinyML summit presentation, most of their work appears to be focused on developing lightweight accelerators for ultra-low power machine learning applications.

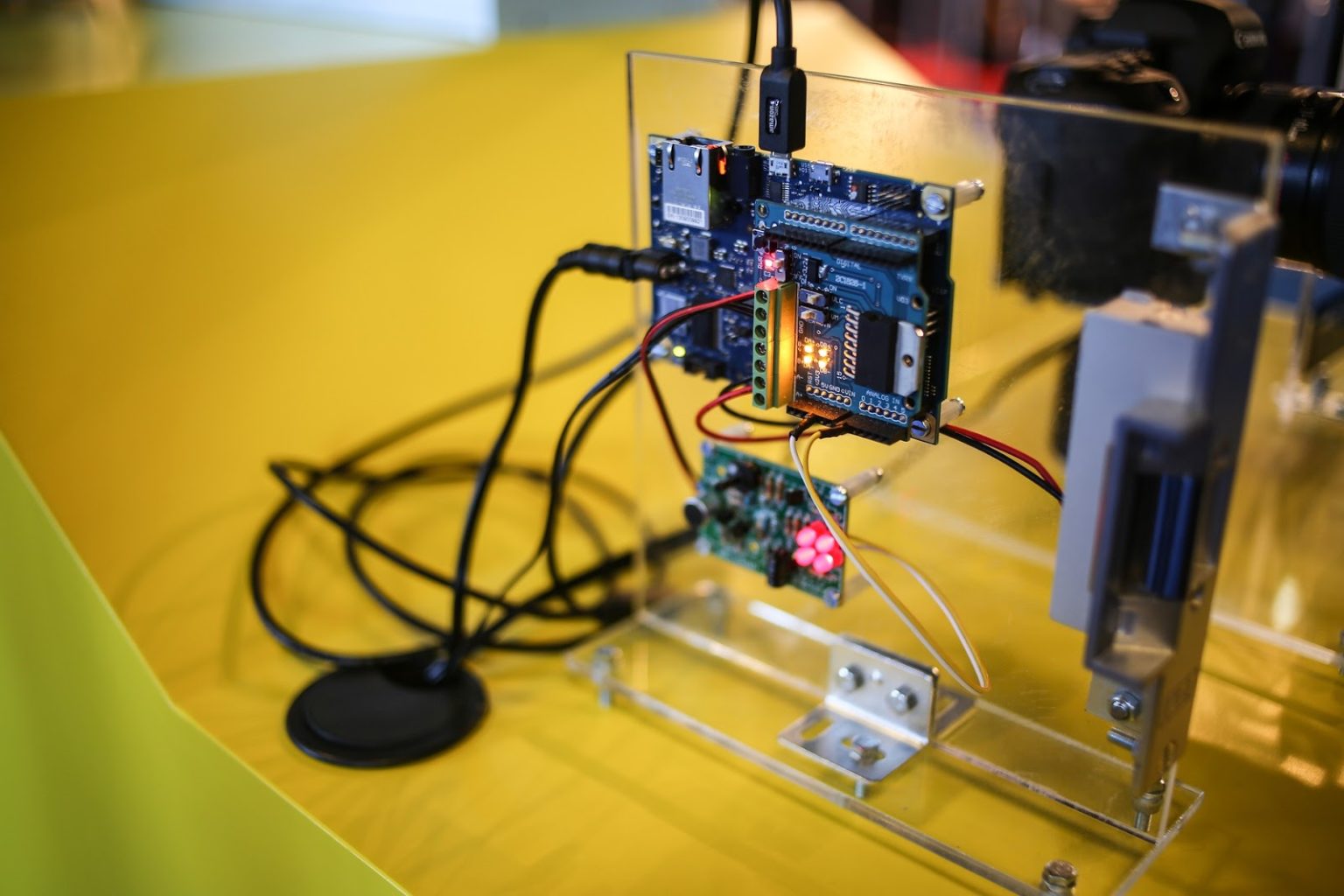

For this, Raspberry Pi will use either of the three current generations of ‘Pi Silicon’ boards or low-cost, high-performance boards, two of which – SparkFun’s MicroMod RP2040 and Arduino’s Nano RP2040 Connect – are from board partners.

The last one works on an ArduCam Pico4ML from ArduCam that incorporates a camera, microphone, screen, and machine learning into the Pico package. It is a standalone microcontroller that does not need a CPU.

He also said the future chips might have lightweight accelerators, possibly 4-8 multiply-accumulates (MACs) per clock cycle compared to less than one MACs per clock cycle of RP2040.