Source – https://www.statnews.com/

Assistive technologies such as handheld tablets and eye-tracking devices are increasingly helping give voice to individuals with paralysis and speech impediments who otherwise would not be able to communicate. Now, researchers are directly harnessing electrical brain activity to help these individuals.

In a study published Wednesday in the New England Journal of Medicine, researchers at the University of California, San Francisco, describe an approach that combines a brain-computer interface and machine learning models that allowed them to generate text from the electrical brain activity of a patient paralyzed because of a stroke.

Other brain-computer interfaces, which transform brain signals into commands, have used neural activity while individuals attempted handwriting movements to produce letters. In a departure from previous work, the new study taps into the speech production areas of the brain to generate entire words and sentences that show up on a screen.

This may be a more direct and effective way of producing speech and helping patients communicate than using a computer to spell out letters one by one, said David Moses, a UCSF postdoctoral researcher and first author of the paper.Related:

Virtual vocal tract creates speech from brain signals, a potential aid for ALS and stroke patients

The study was conducted in a single 36-year-old patient with anarthria, a condition that renders people unable to articulate words because they lose control of muscles tied to speech, including in the larynx, lips, and tongue. The anarthria was brought on by a stroke more than 15 years ago that paralyzed the man.

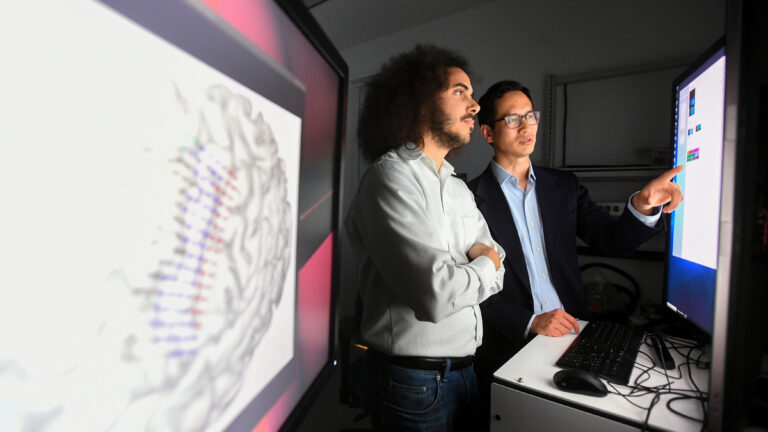

The researchers implanted an array of electrodes in the patient’s brain, in the area that controls the vocal tracts, known as the sensorimotor cortex. They measured the electrical activity in the patient’s brain while he was trying to say a word and used a machine learning algorithm to then match brain signals with specific words. With this code, the scientists prompted the patient with sentences and asked him to read them, as though he were tying to say them out loud. The algorithm interpreted what the patient was trying to say with 75% accuracy.

Although the experiment was only conducted in one patient and only included asking the patient to try to say up to 50 words, the study shows that “the critical neural signals [for speech production] exist and that they can be leveraged for this application,” said Vikash Gilja, an associate professor at the University of California, San Diego, who was not involved in the study.

To Moses and his team, this study represents a proof of concept. “We started with a small vocabulary to prove in principle that this is possible,” he said, and it was. “Moving forward, if someone was trying to get brain surgery to get a device that could help them communicate, they would want to be able to express sentences made up of more than just those 50 words.”

STAT spoke with Moses to learn more about the development of the technology and how it could be applied in the future. This interview has been edited for length and clarity.

What problems were you seeking to address?

It’s kind of easy for us to take speech for granted. We have met people who are unable to speak because of paralysis, and it can be an extremely devastating condition for them to be in. It hadn’t been understood before if the brain signals that normally control the vocal tract can be recorded by an implanted neural device and translated into attempted speech.

Can you describe how the technology works? What information goes into it and how is that analyzed to produce words?

This is in no way mind reading; our system is able to generate words based on the person’s attempts to speak. While he’s trying to say the words that he’s presented [with], we record his brain activity, use machine learning models to detect subtle patterns, and understand how those patterns are associated with words. Then we use those models with a natural language model to decode actual sentences when he is trying to speak.

What’s the importance of including the natural language model?

You could imagine when you’re typing on your phone and it figures, “Oh, this might not be what you want to say,” that can be very helpful. Even with the results that we report, it’s imperfect. It helps to be able to use the language model and the structure of English to improve your predictions.

What was surprising to you about what you learned during this study?

One of the very pleasant surprises was that you were able to see these functional patterns of brain activity that have remained intact for someone who hasn’t spoken in over a decade. As long as someone [can imagine] producing the sounds of what their vocal tract would normally do, it’s possible for us to be able to record that activity and identify these patterns.

How did you feel when you realized that the system was in fact producing the words that the patient was trying to say?

My first thought was “OK, that’s just one sentence. It could have been a fluke.” But then when we saw that it was working sentence after sentence. It was extremely thrilling and rewarding. I know that the participant also felt this way, because you can tell from looking at him that he was getting very excited.

What are the next steps to improving the system?

We need to validate this in more than one person. And we want to know how far this technology can go. Can this, for example, be used to help someone who’s locked in completely — who only has eye movements and cannot move any other muscles. If we show that it can work reliably in people with that level of paralysis, then I think that that’s a strong indicator that this is really a viable approach.

How do you envision this technology being applied in the future?

The ultimate goal really for us is to completely restore speech to someone who’s lost it. That would mean any sound someone wants to make, the system is able to produce that sound for them by synthesizing their voice. You could even restore some personal aspects of the speech, such as intonation, pitch, and accent. It’s going to be a lot of effort and we have a lot of work to do, but I think this is a really strong start.