Source – electronics360.globalspec.com

“The development of full artificial intelligence could spell the end of the human race. Once humans developartificial intelligence, it will take off on its own and redesign itself at an ever-increasing rate. Humans, who are limited by slow biological evolution, couldn’t compete and would be superseded.” This sounds like it could be an excerpt from the screenplay to the 1984 film “The Terminator,” but it’s actually physicist Stephen Hawking in late 2014, talking to the BBC.

As part of the scientific advisory board for the Future of Life Institute based in Boston, Hawking and engineer/entrepreneur Elon Musk helped to create an open letter on artificial intelligence that highlighted both the positive and negative aspects of AI. While the letter is intended to be informative rather than alarmist, it’s hard to ignore a singular phrase rumbling just beneath the surface: “Our AI systems must do what we want them to do.”

The good news is that AI researchers are thinking seriously about the ethical issues involved, sometimes referred to as “roboethics.” That term was coined by Italian robotics pioneer Gianmarco Veruggio, who organized the EURON (European Research Robotics Network) Roboethics Atelier and coordinated the EURON Roboethics Roadmap in 2006. As an overview of ethical issues involved in robot development, the Roadmap raises philosophical questions that still resonate over a decade later.

One of these relates to the very definition of a robot itself: Is a robot merely a machine, incapable of autonomy superior to that of its designer? Or do robots represent the evolution of a new species, that will exceed both the limited intellectual capacity and flawed moral dimensions of humankind?

Heady stuff—and perhaps a bit premature, considering the current level of technological development. Yet there’s certainly no question that AI has already had a significant impact on numerous sectors of society—and, as a consequence, raised ethical concerns and questions lacking easy answers.

Consider, for instance, an emergency situation in which a self-driving car has to weigh the large probability of a small accident against the small risk of a major accident. MIT’s Moral Machine uses self-driving car scenarios such as that to illustrate the sort of moral decisions that may be made by machine intelligence. While autonomous vehicles may not be ubiquitous yet, various reports indicate the very real possibility for automakers to roll them out en masse before federal laws regulating their use are in place.

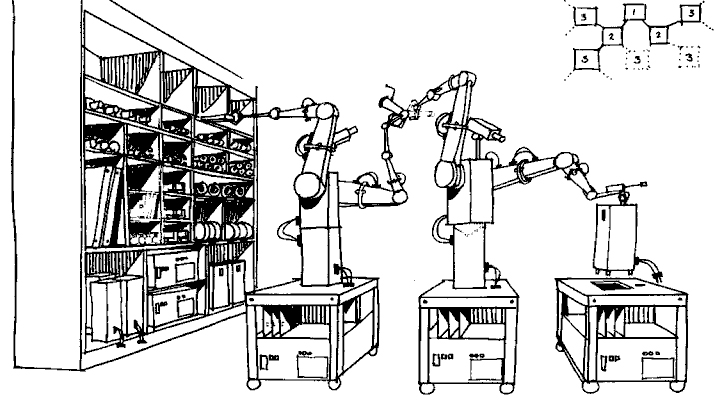

Perhaps job displacement hits even closer to home. Is it wrong to allow robots to take jobs once held by people? If the answer that immediately leaps to mind is “yes,” consider this: Would it not be equally wrong to arrest society’s ability to technologically advance? (Imagine, for instance, what the world would look like today if the Industrial Revolution had somehow been stopped in its tracks.) Then, looking further down the road, as robots become increasingly capable of steering the wheels of production, is it possible that humanity will be propelled into a “leisure society” where work is no longer necessary? Wonderful as that might sound at first blush, could that also mean a lesser need for self-sufficiency, and a softening of humanity’s edge—factors that make Hawking’s concern that much more of a credible scenario?

A recent study headed by Future of Humanity Institute at the University of Oxford included a widespread survey of AI experts, and arrived at some very date-specific predictions as to when machines would be able to assume different types of job tasks. These start within the next decade—and, to give some examples, include language translator by 2024; truck driver by 2027; retail worker by 2031; and surgeon (yes, surgeon) by 2053.

Another expert, MIT Initiative on the Digital Economy director Erik Brynjolfsson, recently told web magazine CityLab that the decline in American factory jobs is not the result of work being shipped overseas—but rather of increased factory automation. He also stated his belief that, “in 50 years, there may be very few jobs which can’t be done by machines.”

Brynjolfsson is, however, cautiously optimistic about the possibilities. With less need to work and greater resources at its disposal, he says, humanity can turn to addressing longstanding problems such as disease, environmental degradation and so on; he also believes that “smart machines can help us have a lighter footprint on the planet.” His open letter on the digital economy proposes ways in which humanity can positively shape the workforce-related effects of technological change: public policy changes, new organizational models for business, ongoing research and openness to new ways of thinking. The Future of Life Institute open letter also links to an outline of recommended research priorities for maximizing AI’s societal benefit.

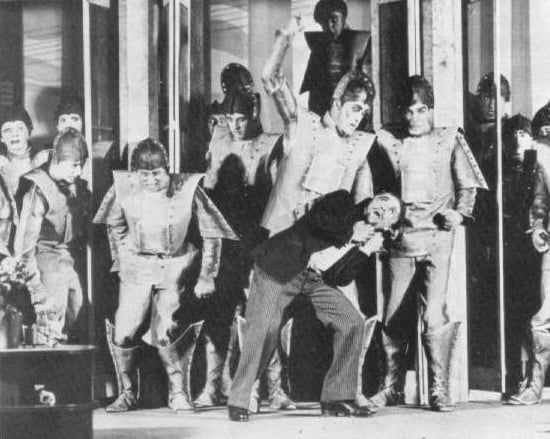

Production still from “R.U.R.” Source: Public domain/Wikimedia Commons.Recommendations such as these strike a decidedly more positive chord than the fears about AI takeover stirred by a century’s worth of dystopian science fiction—dating back at least as far as the 1920 play R.U.R., a fantasy about a rebellion of robot workers leading to the extinction of the human race. Incidentally, that work, by Czech writer Karel Čapek, was responsible for introducing the word “robot” into the English language.

In closing, perhaps a somewhat more light-hearted take on the possibility of being replaced by a machine might be in order. Based on a 2013 Oxford Martin School study, the website Will Robots Take My Job? prompts a user to enter a job title, then spits back various statistics, including an “automation risk level.” Examples range from professions like forensic science technician (0.97 percent probability of automation, or “Totally Safe”) to jobs like vegetation pesticide handler (97 percent probability, phrased as “You Are Doomed”). On the bright side, the listing for each of the fields includes a link to current career opportunities.