Source:

Today, Scikit-learn is being utilized by organizations over the globe, including any semblance of Spotify, JP Morgan, Booking.com, Evernote, and many more. Doubtlessly – scikit-learn gives a helpful tool with simple-to-understand language structure. Among the pantheon of famous Python libraries, scikit-learn positions in the top echelon alongside Pandas and NumPy. These three Python libraries give a total answer for different strides of the AI pipeline. Not to mention, we all love the perfect, uniform code and functions that scikit-learn gives.

The most recent release of scikit-learn, v0.22, has more than 20 dynamic contributors today. V0.22 has added some brilliant highlights to its arms stockpile that give goals to some major existing agony focuses alongside some new highlights which were accessible in different libraries however frequently caused package conflicts. Alongside bug fixes and performance upgrades, here are some new highlights that are included in scikit-learn’s latest version.

Stacking Classifier and Regressor

Stacking is one of the more developed troupe systems made popular by Machine Learning competition winners at DataHack and Kaggle. Stacking is an ensemble learning strategy that utilizes predictions from various models (for instance, choice tree, KNN or SVM) to assemble a new model. The mlxtend library gives an API to execute Stacking in Python. Presently, Scikit-Learn, with its familiar API can do the same and it’s truly instinctive.

Permutation-Based Feature Importance

As the name recommends, this procedure gives an approach to appoint significance to each element by permuting each component and catching the drop in performance. Presently, Sklearn has an inbuilt facility to do permutation-based feature significance.

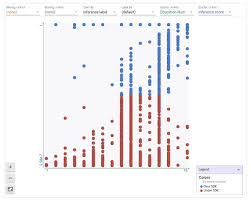

Multiclass Support for ROC UAC

The ROC-AUC score for binary grouping is too helpful particularly with regards to imbalanced datasets. However, there was no help for Multi-Class order till now and we needed to physically code to do this.

Now, there is a new plotting API that makes it convenient and easy to plot and compare ROC-AUC curves from different Machine Learning models.

KNN-Based Imputation

In the KNN-based ascription technique, the missing estimations of an attribute are ascribed utilizing the properties that are generally like the character whose qualities are missing. The supposition behind utilizing KNN for missing qualities is that a point worth can be approximated by the estimations of the focuses that are nearest to it, based on other factors.

Tree Pruning

Pruning gives another choice to control the size of a tree. XGBoost and LightGBM have pruning coordinated into their usage. In its most recent version, Scikit-learn gives this pruning functionality making it possible to control overfitting in most tree-based estimators once the trees are assembled.

The recent release certainly has some critical updates as we just observed. It’s worth exploring and utilizing the latest functionality of Scikit-learn.