Source: zdnet.com

Who doesn’t love shiny things? Well… robots for one. The same goes for transparent objects.

At least, that’s long been the case. Machine vision has stumbled when it comes to shiny or reflective surfaces, and that’s limited use cases for automation even as advances in the field push robots into more and more new spaces.

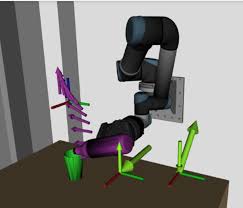

Now, researchers at robotics powerhouse Carnegie Mellon report success with a new technique to identify and grasp objects with troublesome surfaces. Rather than relying on expensive new sensor technology or intensive modeling and training via AI, the system instead goes back to basics, relying on a simple color camera.

To understand why it’s necessary to understand how robots currently sense objects prior to grasping. Cutting edge computer vision systems for pick-and-place applications often rely on infrared cameras, which are great for sensing and precisely measuring the depth of an object — useful data for a robot devising a grasping strategy — but fall short when it comes to visual quirks like transparency. Infrared light passes right through clear objects and is reflected and scattered by reflective surfaces.

Color cameras, however, can detect both. Just look at any color photo and you’ll clearly discern a glass on a table or a shiny metal railing, each with lots of rich detail. That was the vital clue. The CMU researchers built on this observation and developed a color camera system capable of recognizing shapes using color and, crucially, sensing transparent or reflective surfaces.

“We do sometimes miss,” David Held, an assistant professor in CMU’s Robotics Institute, acknowledged, “but for the most part it did a pretty good job, much better than any previous system for grasping transparent or reflective objects.”

That the solution is low-cost and the sensors battle-tested give it a tremendous leg up when it comes to the potential for adoption. The researchers point out that other attempts at robotic grasping of transparent objects have relied on training systems based on trial and error or on expensive human labeling of objects.

In the end, it’s the end, it’s not new sensors, but new strategies to use them that may give robots the powers they need to function in everyday life.