Source – theweek.co.uk

“Success in creating effective AI could be the biggest event in the history of our civilisation. Or the worst. We just don’t know,” said Professor Stephen Hawking at this week’s Web Summit in Lisbon.

Along with many benefits, said Hawking, artificial intelligence (AI) brings many “dangers, like powerful autonomous weapons, or new ways for the few to oppress the many”.

The physicist called for new regulation to ensure humanity could prevent AI from threatening its existence.

“Perhaps we should all stop for a moment and focus not only on making our AI better and more successful, but also on the benefit of humanity,” he added.

Hawking is not the only luminary from the science and technology world to have warned about the future of AI.

We should all “be very careful about artificial intelligence”, Bill Gates said earlier this year. “If I had to guess at what our biggest existential threat is, it’s probably that. With artificial intelligence, we’re summoning the demon.”

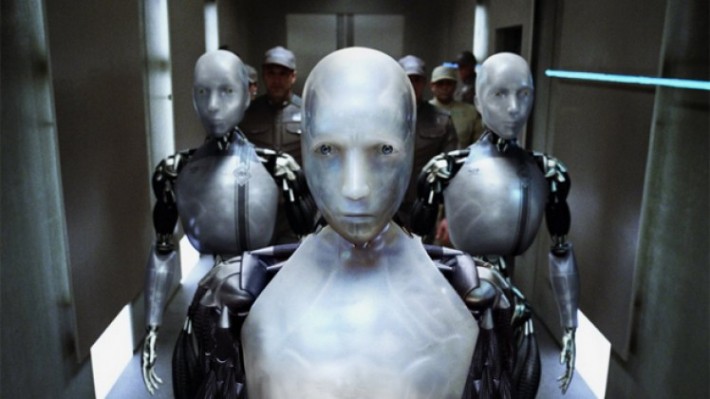

In recent months, there have been headlines about killer robots and about automation replacing human workers, but also about algorithms that help diagnose cancer, and the successful development of self-driving cars.

“Some scholars argue it is the most pressing existential risk humanity might ever face, while others mostly dismiss the hypothesised danger as unfounded doom-mongering,” says Motherboard’s Phil Torres.

So should we be worried about the spread of AI? And what we can do about it?

The reaction to AI

A YouGov survey last year of more than 2,000 people found that public attitudes towards AI vary greatly depending on its application.

Some 70% of respondents were happy for intelligent machines to carry out seemingly menial jobs such as crop monitoring – but this fell to 49% when it came to household tasks, while only 23% would be happy for robots to perform medical operations. And a mere 17% were comfortable with the idea of so-called sex robots.

One of the primary worries about AI is its impersonal nature.

In August, Tesla and SpaceX CEO Elon Musk, along with 115 other AI and robotics specialists, signed an open letter urging the UN to recognise the dangers of lethal autonomous weapons and to ban their use internationally.

According to the Human Rights Watch organisation, the US, China, Israel, South Korea, Russia and the UK “have been investing in developing weapons systems with decreasing levels of human control in the critical functions of selecting and engaging targets”.

Steven Finlay, author of Artificial Intelligence and Machine Learning for Business, believes that autonomous armed robots that can track and target people, using facial recognition software, are just around the corner.

AI and robotics are advancing so quickly that within years, wars could be fought with autonomous weapons and vehicles, reports The Guardian.

Regulate or exterminate?

AI regulation is one answer to the problem.

“A healthy modern democracy requires ordinary citizens to participate in public discussions about rapidly advancing technologies. We desperately need new policies, regulations, and safety nets for those displaced by machines,” says The Nation’s Katharine Dempsey.

Elon Musk agrees. Back in 2014, he said: “I’m increasingly inclined to think that there should be some regulatory oversight, maybe at the national and international level, just to make sure that we don’t do something very foolish.”

Arguably, the greatest worry is that machines may become better at making decisions than humans, enslaving humanity to automated decision-makers and whoever controls them.

AI-based systems are already replacing many jobs. For example, some AI machines can spot skin cancer as accurately as a human doctor, Wired reports.

Many of our choices are already influenced by AI, via websites such as Amazon and Facebook. Algorithms determine the content we see online, and make recommendations about everything from what we watch on TV and where we eat to who we date.

“What makes this scenario so dangerous is that it isn’t being planned by some overarching master intelligence or machine overlord,” says Steven Finlay. “We are creating the very technology that could lead to our demise.”

A change for the better?

It’s not all doom and gloom.

“I’m optimistic that we can create an inspiring future with AI if we win the race between the growing power of AI and the growing wisdom with which we manage it, but that’s going to require planning and work,” physicist Max Tegmark, author of Life 3.0: Being Human in the Age of Artificial Intelligence, told Motherboard.

While Tegmark advocates regulation, he argues that there also needs to be a focus on the upside to using AI. “If people just focus on the downsides, they get paralysed by fear and society gets polarised and fractured,” he concludes.