Source: devops.com

Even experienced DevOps teams can struggle with the nuances of managing microservices in production–where a greater spotlight on network communications can introduce new operational challenges beyond those common to traditional architectures. But, by being smart about your software-based routing components, it’s possible to avoid or reduce the impact of roadblocks in the following areas.

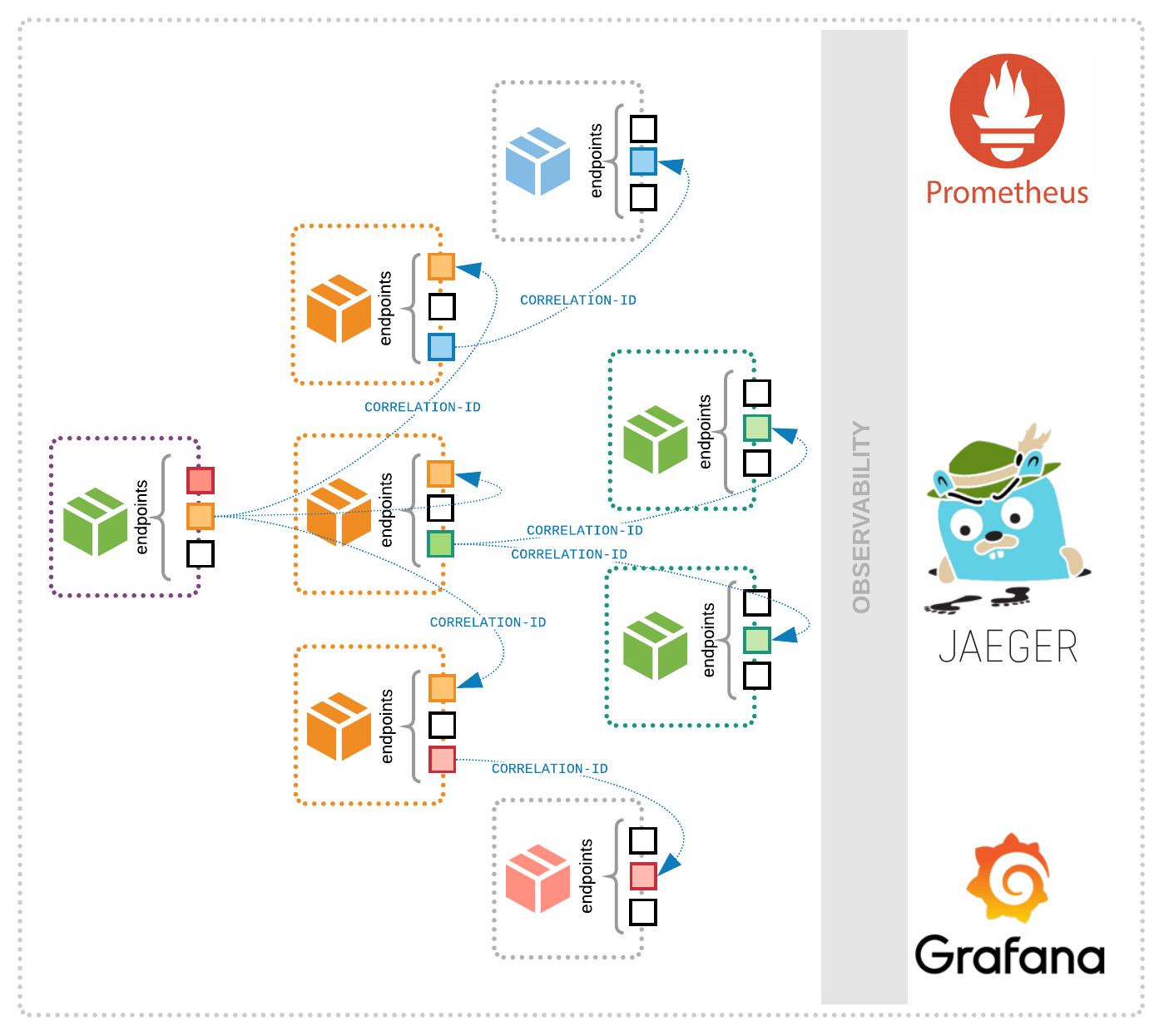

Implementing Tracing and Monitoring

Even applications that are rigorously tested during development will exhibit anomalies in production. To address these, DevOps teams require effective tracing and monitoring tools to gain visibility into what’s happening within clusters at runtime.

As a strategy, developers should select routing solutions that integrate well with their existing monitoring and tracing backends. Remember that while a microservice may be developed by a certain internal team, once deployed it becomes available for others. If the application is instrumented to be traceable, compatible tracing tools can dynamically identify the source of service invocations and their call flows–enabling other teams to utilize the microservice. When the application is also designed to provide metrics, monitoring tools can usefully track the microservice’s key resource consumption metrics to assess the scalability of its subcomponents under load, and recognize any performance limitations that require attention.

Because all inter-service communications travel through software routing elements, you can strategically design your application to enable microservices tracing and monitoring data to be collected. Teams can then put this data to work to more easily overcome challenges when building out new capabilities.

Mitigating Communication Failures

Considering the myriad communications taking place within production microservices-based environments, it only makes sense that this architecture will be prone to communication failures in which microservices simply cannot be reached. Whether these failures occur as the result of an error by the container, host, a network partition, or a short-term interruption in the availability of the service itself, resilient safeguards must be in place to mitigate any impact to an application’s user base.

For example: When an instance fails, the load balancer can be used to automatically reroute requests to healthy instances, and then return traffic to the failed instance upon detecting that it’s again available. In scenarios where an entire microservice becomes unavailable, client services should leverage circuit breaking to stop requests, return an error and initiate a fallback response. Doing so avoids dangerous repetition of retry requests, which only eat up resources and can lead to cascading failures.

Each of these mitigation strategies could be accomplished by placing the necessary logic with each client. However, this technique would be both cumbersome to implement and prone to errors. The better approach to these challenges is to leverage routing solutions to perform instance health checks and circuit breaking, allowing DevOps teams to control how their applications respond to faults, rather than relying on logic at the network layer.

Handling Unanticipated Load Spikes

In production environments, loads can spike unexpectedly and for any number of reasons–ranging from simple sudden popularity with users to malicious distributed denial-of-service (DDoS) attacks. Whatever the root cause, applications must be well-prepared to withstand these events without failing.

To ready applications to handle load spikes, rate limiting is a simple (but powerful) technique that allows operators to specify the request rates to front-end services. Implementing this safeguard through a routing solution answers the challenge of avoiding downtime due to surprise changes in load intensity.

Securing Microservices Communications and Permissions

Security is always a constant concern when it comes to operating production deployments. However, microservices environments often have absolutely zero enforcement of internal network communications permissions. They also face the challenge of implementing proper encryption to secure sensitive data within both internal and external traffic.

While allowing any service to access any other offers flexibility, the risks are substantial. In order to safeguard microservices that access and expose sensitive data, it’s wise to limit access to only those client services with a vetted business justification. Routing technologies make it possible to enforce these secure policies using network segmentation.

At the same time, routing solutions can help businesses encrypt the in-flight transport of critical data, which is not only a best practice, but in many industries a necessity from a regulatory compliance perspective. Service meshes can offer the benefit of providing secure TLS encryption for all internal east-west traffic, while edge routers offer to encrypt external north-south using a provided certificate. Also of note for north-south traffic: combining routing technology with services such as Let’s Encrypt can serve to fully automate lifecycle management of trusted certificates, eliminating the need for human intervention entirely.

Achieving High Availability

Leveraging software routing technology to achieve high availability is more cost-effective and efficient than traditional hardware failover solutions. It offers the opportunity to build production microservices environments that are quite resilient. For example: Routing implementation should utilize a highly-available architecture that includes a horizontally scalable data plane and a separate fault tolerant control plane. While the data plane allows for adding instances as necessary to meet capacity and resilience needs, the control plane is prepared to tolerate failures and ensure that users experience seamless and uninterrupted uptime.

Deploying Across Multi-Cloud and Heterogeneous Environments

Larger enterprises often utilize deployments across multiple cloud and/or on-prem environments, each of which may rely on disparate container orchestration technologies. Highly portable routing technologies capable of supporting a range of orchestration tools can vastly reduce the challenges developers face in supporting multiple environments. This allows them to deploy the same familiar solution and a common routing layer model across deployments.

Routing technology is a key variable in determining the capabilities and ease with which DevOps teams are able to overcome challenges to the success of their production microservices deployments. By making a carefully considered choice in selecting a routing solution, teams can better prepare for these challenges–or even avoid them altogether.