Source: zdnet.com

Models require algorithms and data, and when it comes to data, machine learning models have especially ravenous appetites. Yet, when it comes to transforming data into features consumed by the model, data scientists often have to reinvent the wheel as feature generation lacks the configuration management and automation processes associated with model workflows.

Tecton, a startup that recently emerged from stealth, is introducing a cloud-based service intended to address this bottleneck. They are striving, for feature generation, to introduce the same type of maintainability and reusability that Informatica did when it introduced automated data transformations to the data warehousing world back at its founding.

The cofounders of the company came from Uber, where they were responsible for Project Michelangelo, the company’s machine learning platform.

At Uber, Michelangelo took care of the full lifecycle of ML from managing data to training, evaluating, and deploying models, followed by making and monitoring the predictions. Among the lessons learned from developing the Michelangelo platform was that feature generation lacked the degree of rigorous lifecycle automation that model development. All too often, data scientists had to keep reinventing the wheel when generating features, and the beginnings of that automation were built into the platform.

Those are the lessons that company founders took to creating Tecton. It addresses part of the problem impacting data scientists: they are often spending too much of their time performing data engineering. A couple years ago, in a survey jointly sponsored by Ovum (now Omdia) and Dataiku, we found that even in the best of circumstances, data scientists will still likely spend up to half their time wrestling with data.

In a company blog, Tecton lays out the problem in exhaustive detail. Many cloud AutoML services can automate aspects of algorithm development and feature selection, along with the workflow of moving models from training to deployment and production, but they lack any such automation with the feature engineering that feeds the model with data.

Tecton applies the same DevOps automation to feature development that is the norm for coding the logic of models. All of the relevant artifacts to building a feature (e.g., data sources, transformations, and a recipe for processing) are stored and versioned in a Git repository, just as modeling logic is.

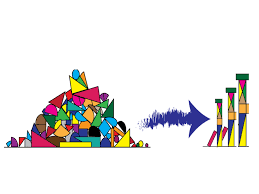

The result is that the legwork involved with creating and deploying the data pipelines used for generating features is automated. This doesn’t mean that data scientists no longer need data engineers, but they can become less reliant on them. Data engineers are still needed at the outset of building a feature for connecting to data sources. But it then allows data scientists to work in their Python notebooks to design the feature transformation logic. The next step is specifying configuration information (e.g., specify data sources, frequency of transformations, and whether to serve the feature online or offline in batch). All these artifacts are stored in a Git repository where they can be versioned, shared, and reused.

The operable notion is that data scientists should not get mired in the business of designing, deploying, and running data pipelines, and they shouldn’t need to bother data engineers each time they have to design new features or run another job. Once the feature is developed, Tecton automates the operation of the pipeline, triggering the appropriate execution engine such as Spark for batch, or Flink or Kafka Streams for streaming. This addresses one part of the model deployment bottleneck as data scientists don’t have to worry about wiring up the right execution engines.

To track lifecycle and support reusability, Tecton retains data lineage data and makes the metadata searchable in a “feature store’ catalog. The same pipelines can be used, both for training and live production data, and by versioning these pipelines, they can readily be iterated by data scientists on a self-service basis when modifying or generating new features.

Having emerged from stealth within the past couple months, Tecton’s offering is currently running in private preview on AWS. As noted above, it manages and automates feature pipelines and includes a feature store for storing historical feature and label data; includes an SDK for retrieving training data; plus a web-based UI for tracking features and monitoring data quality and data drift issues. Once the AWS offering hits general release, Tecton will follow with other clouds later. To us, this would make a useful complement to cloud AutoML services, helping them automate the missing mile of data.