Source – techradar.com

“Artificial Intelligence” is currently the hottest buzzword in tech. And with good reason – after decades of research and development, the last few years have seen a number of techniques that have previously been the preserve of science fiction slowly transform into science fact.

Already AI techniques are a deep part of our lives: AI determines our search results, translates our voices into meaningful instructions for computers and can even help sort our cucumbers (more on that later). In the next few years we’ll be using AI to drive our cars, answer our customer service enquiries and, well, countless other things.

But how did we get here? Where did this powerful new technology come from? Here’s ten of the big milestones that led us to these exciting times.

Getting the ‘Big Idea’

The concept of AI didn’t suddenly appear – it is the subject of a deep, philosophical debate which still rages today: Can a machine truly think like a human? Can a machine be human? One of the first people to think about this was René Descartes, way back in 1637, in a book called Discourse on the Method. Amazingly, given at the time even an Amstrad Em@iler would have seemed impossibly futuristic, Descartes actually summed up some off the crucial questions and challenges technologists would have to overcome:

“If there were machines which bore a resemblance to our bodies and imitated our actions as closely as possible for all practical purposes, we should still have two very certain means of recognizing that they were not real men.”

He goes on to explain that in his view, machines could never use words or “put together signs” to “declare our thoughts to others”, and that even if we could conceive of such a machine, “it is not conceivable that such a machine should produce different arrangements of words so as to give an appropriately meaningful answer to whatever is said in its presence, as the dullest of men can do.”

He then goes on to describe the big challenge of now: creating a generalised AI rather than something narrowly focused – and how the limitations of current AI would expose how the machine is definitely not a human:

“Even though some machines might do some things as well as we do them, or perhaps even better, they would inevitably fail in others, which would reveal that they are acting not from understanding, but only from the disposition of their organs.”

So now, thanks to Descartes, when it comes to AI, we have the challenge.

The Imitation Game

The second major philosophical benchmark came courtesy of computer science pioneer Alan Turing. In 1950 he first described what became known as The Turing Test, and what he referred to as “The Imitation Game” – a test for measuring when we can finally declare that machines can be intelligent.

His test was simple: if a judge cannot differentiate between a human and a machine (say, through a text-only interaction with both), can the machine trick the judge into thinking that they are the one who is human?

Amusingly at the time, Turing made a bold prediction about the future of computing – and he reckoned that by the end of the 20th century, his test will have had been passed. He said:

“I believe that in about fifty years’ time it will be possible to programme computers, with a storage capacity of about [1GB], to make them play the imitation game so well that an average interrogator will not have more than 70 percent chance of making the right identification after five minutes of questioning. … I believe that at the end of the century the use of words and general educated opinion will have altered so much that one will be able to speak of machines thinking without expecting to be contradicted.”

Sadly his prediction is a little premature, as while we’re starting to see some truly impressive AI now, back in 2000 the technology was much more primitive. But hey, at least he would have been impressed by hard disc capacity – which averaged around 10GB at the turn of the century.

The first Neural Network

“Neural Network” is the fancy name that scientists give to trial and error, the key concept unpinning modern AI. Essentially, when it comes to training an AI, the best way to do it is to have the system guess, receive feedback, and guess again – constantly shifting the probabilities that it will get to the right answer.

What’s quite amazing then is that the first neural network was actually created way back in 1951. Called “SNARC” – the Stochastic Neural Analog Reinforcement Computer – it was created by Marvin Minsky and Dean Edmonds and was not made of microchips and transistors, but of vacuum tubes, motors and clutches.

The challenge for this machine? Helping a virtual rat solve a maze puzzle. The system would send instructions to navigate the maze and each time the effects of its actions would be fed back into the system – the vacuum tubes being used to store the outcomes. This meant that the machine was able to learn and shift the probabilities – leading to a greater chance of making it through the maze.

It’s essentially a very, very, simple version of the same process Google uses to identify objects in photos today.

The first self-driving car

When we think of self-driving cars, we think of something like Google’s Waymo project – but amazingly way back in 1995, Mercedes-Benz managed to drive a modified S-Class mostly autonomously all the way from Munich to Copenhagen.

According to AutoEvolution, the 1043 mile journey was made by stuffing effectively a supercomputer into the boot – the car contained 60 transputer chips, which at the time were state of the art when it came to parallel computing, meaning that it could process a lot of driving data quickly – a crucial part of making self-driving cars sufficiently responsive.

Apparently the car reached speeds of up to 115mph, and was actually fairly similar to autonomous cars of today, as it was able to overtake and read road signs. But if we were offered a trip? Umm… We insist you go first.

Switching to statistics

Though neural networks had existed as a concept for some time (see above!), it wasn’t until the late 1980s when there was a big shift amongst AI researchers from a “rules based” approach to one instead based on statistics – or machine learning. This means that rather than try to build systems that imitate intelligence by attempting to divine the rules by which humans operate, instead taking a trial-and-error approach and adjusting the probabilities based on feedback is a much better way to teach machines to think. This is a big deal – as it is this concept that underpins the amazing things that AI can do today.

Gil Press at Forbes argues that this switch was heralded in 1988, as IBM’s TJ Watson Research Center published a paper called “A statistical approach to language translation”, which is specifically talking about using machine learning to do exactly what Google Translate works today.

IBM apparently fed into their system 2.2 millions pairs of sentences in French and English to train the system – and the sentences were all taken from transcripts of the Canadian Parliament, which publishes its records in both languages – which sounds like a lot but is nothing compared to Google having the entire internet at its disposal – which explains why Google Translate is so creepily good today.

Deep Blue beats Garry Kasparov

Despite the shift in focus to statistical models, rules-based models were still in use – and in 1997 IBM were responsible for perhaps the most famous chess match of all time, as it’s Deep Blue computer bested world chess champion Garry Kasparov – demonstrating how powerful machines can be.

The bout was actually a rematch: in 1996 Kasparov bested Deep Blue 4-2. It was only in 1997 the machines got the upper hand, winning two out of the six games outright, and fighting Kasparov to a draw in three more.

Deep Blue’s intelligence was, to a certain extent, illusory – IBM itself reckons that its machine is not using Artificial Intelligence. Instead, Deep Blue uses a combination of brute force processing – processing thousands of possible moves every second. IBM fed the system with data on thousands of earlier games, and each time the board changed with each movie, Deep Blue wouldn’t be learning anything new, but it would instead be looking up how previous grandmasters reacted in the same situations. “He’s playing the ghosts of grandmasters past,” as IBM puts it.

Whether this really counts as AI or not though, what’s clear is that it was definitely a significant milestone, and one that drew a lot of attention not just to the computational abilities of computers, but also to the field as a whole. Since the face-off with Kasparov, besting human players at games has become a major, populist way of benchmarking machine intelligence – as we saw again in 2011 when IBM’s Watson system handily trounced two of the game show Jeopardy’s best players.

Siri nails language

Natural language processing has long been a holy grail of artificial intelligence – and crucial if we’re ever going to have a world where humanoid robots exist, or where we can bark orders at our devices like in Star Trek.

And this is why Siri, which was built using the aforementioned statistical methods, was so impressive. Created by SRI International and even launched as a separate app on the iOS app store, it was quickly acquired by Apple itself, and deeply integrated into iOS: Today it is one of the most high profile fruits of machine learning, as it, along with equivalent products from Google (the Assistant), Microsoft (Cortana), and of course, Amazon’s Alexa, has changed the way we interact with our devices in a way that would have seemed impossible just a few years earlier.

Today we take it for granted – but you only have to ask anyone who ever tried to use a voice to text application before 2010 to appreciate just how far we’ve come.

The ImageNet Challenge

Like voice recognition, image recognition is another major challenge that AI is helping to beat. In 2015, researchers concluded for the first time that machines – in this case, two competing systems from Google and Microsoft – were better at identifying objects in images than humans were, in over 1000 categories.

These “deep learning” systems were successful in beating the ImageNet Challenge – think something like the Turing Test, but for image recognition – and they are going to be fundamental if image recognition is ever going to scale beyond human abilities.

Applications for image recognition are, of course, numerous – but one fun example that Google likes to boast about when promoting its TensorFlow machine learning platform is sorting cucumbers: By using computer vision, a farmer doesn’t need to employ humans to decide whether vegetables are ready for the dinner table – the machines can decide automatically, having been trained on earlier data.

GPUs make AI economical

One of the big reasons AI is now such a big deal is because it is only over the last few years that the cost of crunching so much data has become affordable.

According to Fortune it was only in the late 2000s that researchers realised that graphical processing units (GPUs), which had been developed for 3D graphics and games, were 20-50 times better at deep learning computation than traditional CPUs. And once people realised this, the amount of available computing power vastly increased, enabling the the cloud AI platforms that power countless AI applications today.

So thanks, gamers. Your parents and spouses might not appreciate you spending so much time playing videogames – but AI researchers sure do.

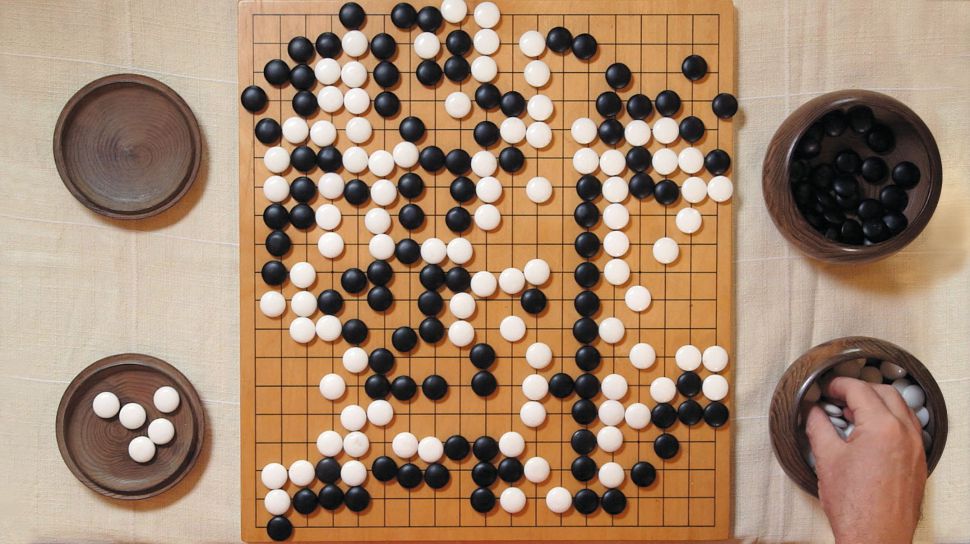

AlphaGo and AlphaGoZero conquer all

In March 2016, another AI milestone was reached as Google’s AlphaGo software was able to best Lee Sedol, a top-ranked player of the boardgame Go, in an echo of Garry Kasparov’s historic match.

What made it significant was not just that Go is an even more mathematically complex game than Chess, but that it was trained using a combination of human and AI opponents. Google won four out of five of the matches by reportedlyusing 1920 CPUs and 280 GPUs.

Perhaps even more significant is news from last year – when a later version of the software, AlphaGo Zero. Instead of using any previous data, as AlphaGo and Deep Blue had, to learn the game it simply played thousands of matches against itself – and after three days of training was able to beat the version of AlphaGo which beat Lee Sedol 100 games to nil. Who needs to teach a machine to be smart, when a machine can teach itself?