Source – bloomberg.com

Imagine that tomorrow, some smart kid invented a technology that let people or physical goods pass through walls, and posted instructions for how to build it cheaply from common household materials. How would the world change?

Lots of industries would probably become more productive. Being able to walk through walls instead of being forced to use doors would make it easier to navigate offices, move goods in and out of warehouses and accomplish any number of mundane tasks. That would give the economy a boost. But the negative might well outweigh the positive. Keeping valuables under lock and key would no longer work. Anyone could break into any warehouse, bank vault or house with relative ease. Most of the methods we use to keep private property secure rely on walls in some ways, and these would be instantly made ineffective. Thieves and home invaders would run rampant until society could implement alternative ways of keeping out intruders. The result might be an economic crash and social chaos.

This demonstrates a general principle — technological innovations are not always good for humanity, at least in the short term. Technology can create negative externalities — a economics term for harm caused to third parties. When those externalities outweigh the usefulness of the technology itself, invention actually makes the world worse instead of better — at least for a while.

Machine learning, especially a variety known as deep learning, is arguably the hottestnew technology on the planet. It gives computers the ability to do many tasks that only humans were able to perform — recognize images, drive cars, pick stocks and lots more. That has made some people worried that machine learning will make humans obsolete in the workplace. That’s possible, but there’s a potentially bigger danger from machine learning that so far isn’t getting the attention it deserves. When machines can learn, they can be taught to lie.

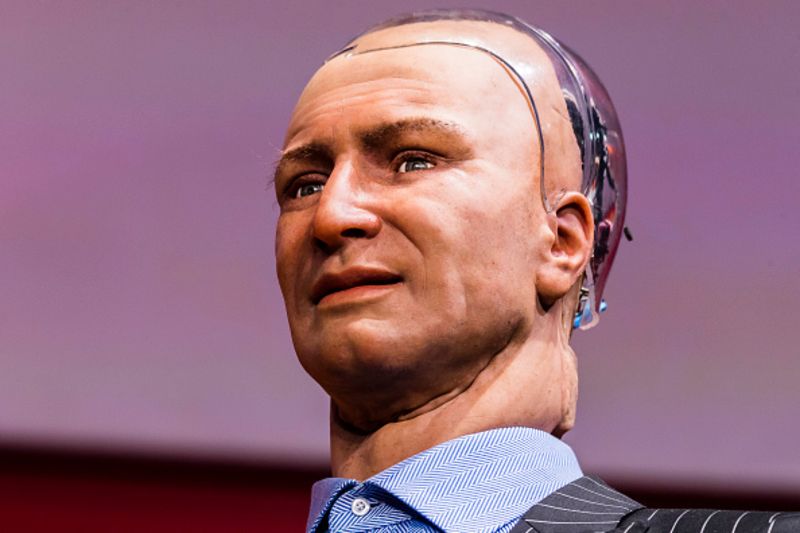

Human beings can doctor images such as photographs, but it’s laborious and difficult. And faking voices and video is beyond our capability. But soon, thanks to machine learning, it will probably be possible to easily and quickly create realistic forgeries of someone’s face and make it seem as if they are speaking in their own voice. Already, lip-synching technology can literally put words in a person’s mouth. This is just the tip of the iceberg — soon, 12-year-olds in their bedrooms will be able to create photorealistic, perfect-sounding fakes of politicians, business leaders, relatives and friends saying anything imaginable.

This lends itself to some pretty obvious abuses. Political hoaxes — so-called “fake news” — will spread like wildfire. The hoaxes will be discovered in short order — no digital technology is so good that other digital technology can’t detect the phony — but not before it puts poisonous ideas into the minds of people primed to believe them. Imagine perfect-looking fake video of presidential candidates spouting racial slurs, or admitting to criminal acts.

But that’s just the beginning. Imagine the potential for stock manipulation. Suppose someone releases a sham video of Tesla Inc. Chief Executive Officer Elon Musk admitting in private that Tesla’s cars are unsafe. The video would be passed around the internet, and Tesla stock would crash. The stock would recover a short while later, once the forgery was revealed — but not before the manipulators had made their profits by short-selling Tesla shares.

This is far from the most extreme scenario. Imagine a prankster creating a realistic fake video of President Donald Trump declaring that an attack on North Korean nuclear facilities was imminent, then putting the video where the North Koreans can see it. What are the chances that North Korea’s leadership would realize that it was a fraud before they were forced to decide whether to start a war?

Those who view these extreme scenarios as alarmist will rightfully point out that no fake will ever be undetectable. The same machine learning technology that creates forgeries will be used to detect them. But that doesn’t mean we’re safe from the brave new world of ubiquitous fakes. Once forgeries get so good that humans can’t detect them, our trust in the veracity of our eyes and ears will forever vanish. Instead of trusting our own perceptions, we will be forced to place our trust in the algorithms used for fraud detection and verification. We evolved to trust our senses; switching to trust in machine intelligence instead will be a big jump for most people.

That could be bad news for the economy. Webs of trade and commerce rely on trust and communication. If machine learning releases an infinite blizzard of illusions into the public sphere — if the walls that evolution built to separate reality from fantasy break down — aggregate social trust could decrease, hurting global prosperity in the process.

For this reason, the government should probably take steps to penalize digital forgery pretty harshly. Unfortunately, the current administration seems unlikely to take that step, thanks to its love of partisan news. And governments like Russia’s seem even less likely to curb the practice. Ultimately, the combination of bad government with powerful new technology represents a much bigger danger to human society than technology alone.