Source: allaboutcircuits.com

Machine learning’s ability to perform intellectually demanding tasks across various fields, materials science included, has caused it to receive considerable attention. Many believe that it could be used to unlock major time and cost savings in the development of new materials.

The growing demand for the use of machine learning to derive fast-to-evaluate surrogate models of material properties has prompted scientists at the National Institute for Materials Science in Tsukuba, Japan, to demonstrate that it could be the key driver of the “next frontier” of materials science in recently published research.

Insufficient Materials Data

To learn, machines rely on processing data using both supervised and unsupervised learning.

With no data, however, there is nothing to learn from.

Unfortunately, potential technological advances in machine learning and its potential applications in materials science are not being fully exploited due to a considerable lack of volume and diversity of materials data. This, the Japanese researchers believe, is greatly stifling progress.

However, a machine learning framework known as “transfer learning” is said to have great potential in being able to overcome the problem of a relatively small data supply. This framework relies on the concept that various property types – for example, physical, electrical, chemical, and mechanical – are physically interrelated.

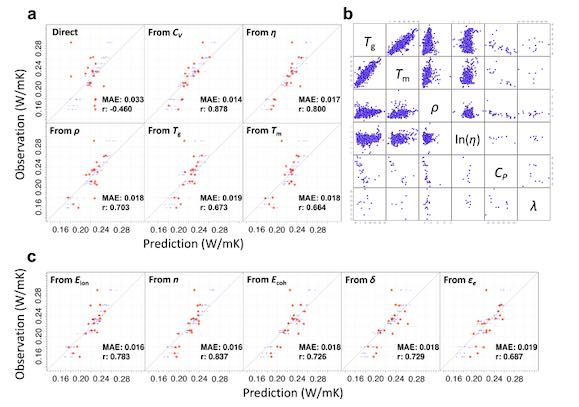

To successfully predict a target property from a limited supply of training data, the researchers used models of related proxy properties that have been pretrained using sufficient data. These models are then able to capture common features that are relevant to the target task.

This repurposing of machine-acquired features on the target task has demonstrated high prediction performance even when the data sets are very small

Facilitating the Widespread Use of Transfer Learning

To facilitate the widespread use and boost the power of transfer learning, the Japanese researchers created their own pre-trained model library, XenonPy.MDL.

In its first release, the library is comprised of more than 140,000 pre-trained models for various properties of small molecules, polymers, and inorganic crystalline materials. Along with these models, the researchers provide literature that describes some of their most outstanding successes of applying transfer learning in varying scenarios. Examples such as being able to build models using the framework in conjunction with only dozens of pieces of materials data nicely hammer home the effectiveness of the transfer learning framework.

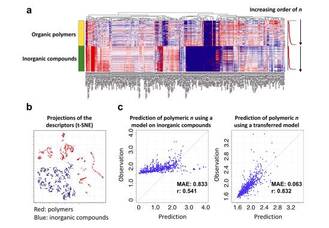

The researchers also highlight how they discovered that transfer learning transcends across different disciplines of materials science, such as underlying bridges between organic and inorganic chemistry.

Indispensable to Machine Learning-Centric Workflows

Although transfer learning is being used often across various fields of machine learning, its use in materials science is still lacking.

Furthermore, the limited availability of openly accessible big data will likely continue in the near future, the researchers say, due to a distinct lack of incentives for data sharing, a problem partly caused by the conflicting goals of stakeholders across academia, industry, and public and government organizations. This means that the relevance of transfer learning will only grow and contribute further to the success of machine learning-centric workflows in materials science.