Source – https://www.dailyuw.com/

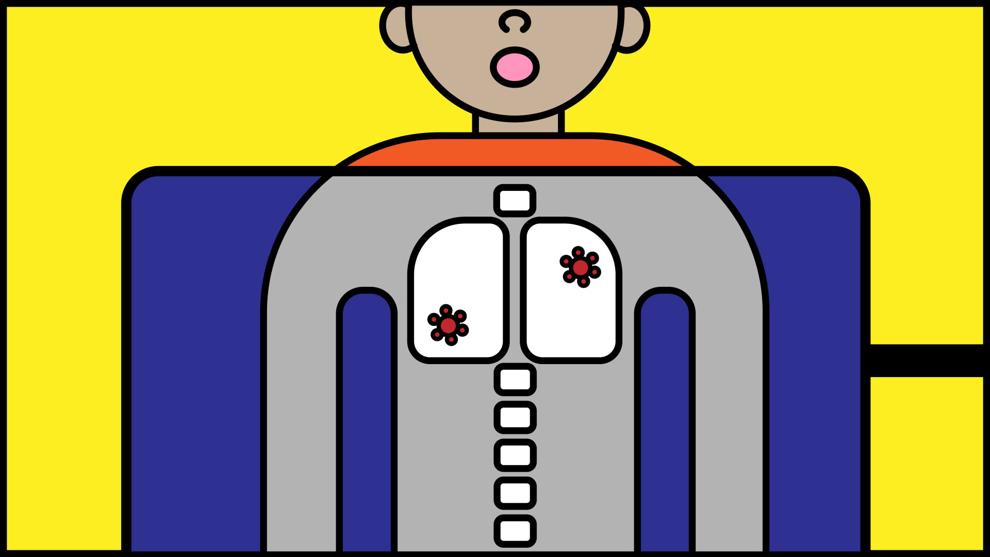

A UW Allen School team recently published an article in Nature Machine Intelligence finding models predicting COVID-19 diagnosis from X-rays are relying on shortcuts.

Several research groups have developed artificial intelligence (AI) models to diagnose COVID-19 based on chest radiography, with the intention of increasing COVID-19 testing accessibility.

When UW M.D. and Ph.D. students Alex DeGrave and Joseph Janizek heard about this, they immediately thought something in the models might be amiss.

Janizek said they had been studying AI models predicting pneumonia from chest X-rays and found many of the models were using shortcuts — or aspects of images unrelated to the actual disease — to make the predictions. DeGrave and Janizek thought the COVID-19 diagnosis models might be doing the same thing.

“We figured that the combination of the high profile of these new studies coming out, and the likelihood of the data being sort of problematic, made it a really good place to kind of apply what we’ve been looking at,” Janizek said.

Shortcut learning occurs when AI learns to associate things from the training data that are not meaningfully associated in real life. Janizek and DeGrave found the COVID-19 prediction AI models associated having labels on the bottom of the X-ray image with a COVID-19 diagnosis.

DeGrave, Janizek, and their Ph.D. advisor and associate professor Su-In Lee created and trained deep convolutional neural network AI models to replicate what had been done in published studies. The team found the AI performed well on data from the same hospital system as the training data, but when given data from a different hospital system the accuracy was reduced by half.

This trend was something they had noticed in pneumonia models as well, which suggested AI models might be using shortcuts to make diagnosis predictions, Janizek said.

Traditionally, AI functions like a “black box.” The AI model receives large amounts of data to learn from, then users ask the model to make a prediction about a new piece of data. The AI will give an answer, but users typically have no idea why this answer should be reliable.

The team employed a variety of techniques to open up the black box of COVID-19 diagnosis AI models. DeGrave, Janizek, and Lee used saliency maps, which highlighted regions the AI used to determine COVID-19 diagnosis. They also used generative adversarial networks, which involves illustrating what the AI “thinks” is important about the image, in addition to manually modifying the image to see how AI’s COVID-19 diagnosis would change.

“The reason why we used this large set of techniques, three complimentary techniques, is because I think they all overlap each other’s pitfalls nicely,” DeGrave said. “They really complement each other and make the set of experiments much stronger.”

The team found the AI models were using parts of the image, such as annotations, labels, and body positioning, that had nothing to do with COVID-19 to make a COVID-19 diagnosis prediction. These AI models were particularly reliant on shortcut learning because in the limited data available, X-ray images from COVID-19 positive and COVID-19 negative individuals were from different sources.

“Having a problem this severe is fairly unique to COVID,” DeGrave said. “However, there’s a less severe version of the problem that we just see all over the place as well.”

Applying AI to make diagnosis predictions is a popular area of study, but DeGrave said to “be wary also of any other models for any other conditions that were trained in the problematic nature exposed in this paper.”

Janizek said he was surprised when he discovered people were planning to use these problematic models in a clinical setting.

“There needs to be more of these kinds of watchdog type papers, where people are really looking at the reproducibility of existing models and problems that exist out there,” DeGrave said.