Introduction to Data Engineering Tools

Data engineering is a crucial component of the data lifecycle that involves collecting, transforming, storing, and managing large datasets. With the increase in data volumes and complexities, traditional data management tools have become inadequate for meeting the modern data demands. This is where data engineering tools come into play. In this article, we’ll explore what data engineering tools are, why they are important, and their popular types.

What are Data Engineering Tools?

Data engineering tools are software applications that facilitate the process of managing and processing data. These tools offer features for data transformation, data integration, data storage, and data processing. They are designed to handle large datasets and enable organizations to extract insights and value from their data.

Why are Data Engineering Tools Important?

Data engineering tools play a critical role in modern data-driven organizations. They enable organizations to store, process, and analyze large volumes of data with ease. Data engineering tools offer speed, accuracy, and scalability, allowing organizations to extract insights faster and make informed decisions. They also help to reduce the complexity involved in managing data, making it more accessible to analysts and business stakeholders.

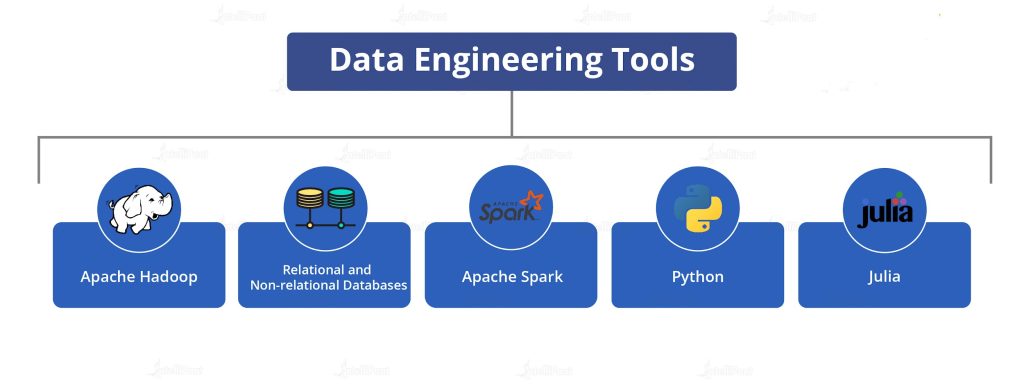

Popular Data Engineering Tools in the Market

There are numerous data engineering tools in the market, and each tool has its unique features and functionalities. Here are some popular data engineering tools:

Hadoop

Hadoop is an open-source data processing framework that provides distributed storage and processing of large datasets across clusters of computers using simple programming models.

Apache Spark

Apache Spark is a distributed computing system that provides in-memory data processing and operates on large datasets. It is widely used for real-time data processing, machine learning, and graph processing.

Apache Kafka

Apache Kafka is an open-source message broker that enables the publishing and subscription of data streams in real-time. Kafka is designed to handle large volumes of data and ensures high fault tolerance and scalability.

Amazon Web Services (AWS) Glue

AWS Glue is a fully managed ETL service that makes it easy to move data between data stores. It automates the process of discovering, converting, and moving data with minimal coding.

Google Cloud Dataflow

Google Cloud Dataflow is a serverless data processing service that enables the development of data processing pipelines. It supports both batch and streaming data processing and is highly scalable.

Key Features and Functions of Data Engineering Tools

Data engineering tools offer several essential functions required for managing and processing data. Here are some key features of data engineering tools:

Data Extraction and Transformation

Data engineering tools enable the extraction of data from various sources and facilitate the process of transforming data into the desired format.

Batch and Stream Processing

Data engineering tools offer batch and stream processing capabilities that enable the processing of both historical and real-time data.

Data Storage and Management

Data engineering tools provide features for storing and managing data in various formats, including structured, semi-structured, and unstructured data.

Data Integration and ETL

Data engineering tools facilitate the integration of data from multiple sources and automate the process of Extract, Transform, and Load (ETL).

Advantages of Using Data Engineering Tools

Using data engineering tools offers numerous benefits for data-driven organizations. Here are some advantages of using data engineering tools:

Improved Data Quality

Data engineering tools enable the cleaning and normalization of data, resulting in improved data quality.

Efficient Data Processing

Data engineering tools provide features for processing large datasets efficiently, resulting in faster insights and decision-making.

Cost Savings

Using data engineering tools enables organizations to manage and process data without the need for expensive hardware or infrastructure, resulting in cost savings.

Challenges in Implementing Data Engineering Tools

Complexity of Data Engineering Tools

Data engineering tools are complex software systems that require a significant amount of time and effort to implement and maintain. To implement these tools effectively, organizations need skilled personnel who can understand the system’s architecture and can make modifications accordingly. The complexity of these tools can also lead to issues like interoperability and integration, making it difficult to create a cohesive data processing environment.

Data Security and Compliance

Data security and compliance are critical concerns for organizations dealing with large amounts of data. Implementing data engineering tools without these considerations can create security risks and compliance issues that can lead to data breaches and legal consequences. Data engineers must ensure that the data is secure and compliant with relevant regulations, standards, and policies.

Inadequate IT Infrastructure

Data engineering tools require robust IT infrastructure, including hardware, software, and network architectures. Inadequate infrastructure can lead to performance issues and slow data processing, making the data engineering tool ineffective. The cost of implementing these tools can increase significantly if organizations need to invest in new infrastructure, leading to budgetary concerns.

Best Practices for Data Engineering Tool Implementation

Define Clear Objectives

To implement data engineering tools effectively, organizations must have a clear understanding of their objectives. Data engineering must align with organizational goals and objectives, and the chosen tool must support these. Having clear objectives helps organizations to choose the right tool, data pipeline, and infrastructure required.

Choose the Right Tool for the Job

Choosing the right tool for the job is critical for effective implementation. Not all data engineering tools are the same. Different tools have different capabilities and limitations, and selecting a tool that meets the specific needs of the organization can make all the difference. Organizations must consider factors such as the data type, volume, and processing requirements when selecting the tool.

Design an Effective Data Pipeline

An effective data pipeline is essential for successful data engineering tool implementation. An optimized pipeline ensures that the data moves smoothly through the system and is processed efficiently. Organizations need to design the pipeline carefully, considering factors such as data transformation, data validation, and data storage.

Future of Data Engineering Tools

Increased Automation

Automation is the future of data engineering tools. Automated data processing will reduce the need for manual intervention, resulting in faster and more efficient data processing. Organizations can expect to see increased automation in data transformation, integration, and data flow management.

Advanced Machine Learning Capabilities

Machine learning is already a critical part of data engineering tools, and the future of these tools is expected to see advanced machine learning capabilities, including predictive analytics, natural language processing, and deep learning.

Conclusion and Recommendations for Data Engineering Tool Selection

Implementing data engineering tools is essential for organizations that require large-scale data processing. However, the complexity of these tools, data security, and infrastructure requirements can present significant challenges. Organizations must select the right tool, define clear objectives, and design an effective data pipeline, taking these challenges into account. Looking to the future, automation and advanced machine learning capabilities offer exciting possibilities for data engineering tools. Organizations that embrace these advanced capabilities will be better positioned for success in the future.In conclusion, data engineering tools have revolutionized the way organizations process, store, and analyze data, helping them to make more informed decisions. With the emergence of new technologies and evolving business requirements, the future of data engineering tools looks promising. It is important for organizations to carefully evaluate their data engineering requirements and select tools that align with their objectives and long-term strategies. By following best practices and staying updated with the latest trends, organizations can take full advantage of these tools and stay ahead of the competition.

FAQ

What are Data Engineering Tools?

Data Engineering Tools are software applications that enable organizations to process, store, and analyze large volumes of data. These tools provide the necessary infrastructure and capabilities to extract, transform, and load data, perform batch and stream processing, and integrate disparate data sources.

What are the popular Data Engineering Tools in the market?

There are several popular Data Engineering Tools in the market, including Hadoop, Apache Spark, Apache Kafka, Amazon Web Services Glue, and Google Cloud Dataflow. These tools offer a range of features and functionalities, and organizations should evaluate their requirements to select the most suitable tool.

What are the advantages of using Data Engineering Tools?

Data Engineering Tools offer several advantages, including improved data quality, efficient data processing, and cost savings. These tools enable organizations to process large volumes of data quickly and accurately, leading to better insights and informed decision-making.

What are the challenges in implementing Data Engineering Tools?

Implementing Data Engineering Tools can be challenging due to their complexity, data security and compliance concerns, and the need for adequate IT infrastructure. Organizations need to carefully plan and execute their implementation strategies and follow best practices to avoid potential pitfalls.