Introduction to Data Pipelining Tools

Data pipelining tools are an essential part of modern data management processes. As companies collect more and more data, they need to be able to move that data around quickly and efficiently to make use of it effectively. Data pipelining tools are designed to automate the process of moving data from one location to another, allowing businesses to streamline their data workflows and get the most value from their data.

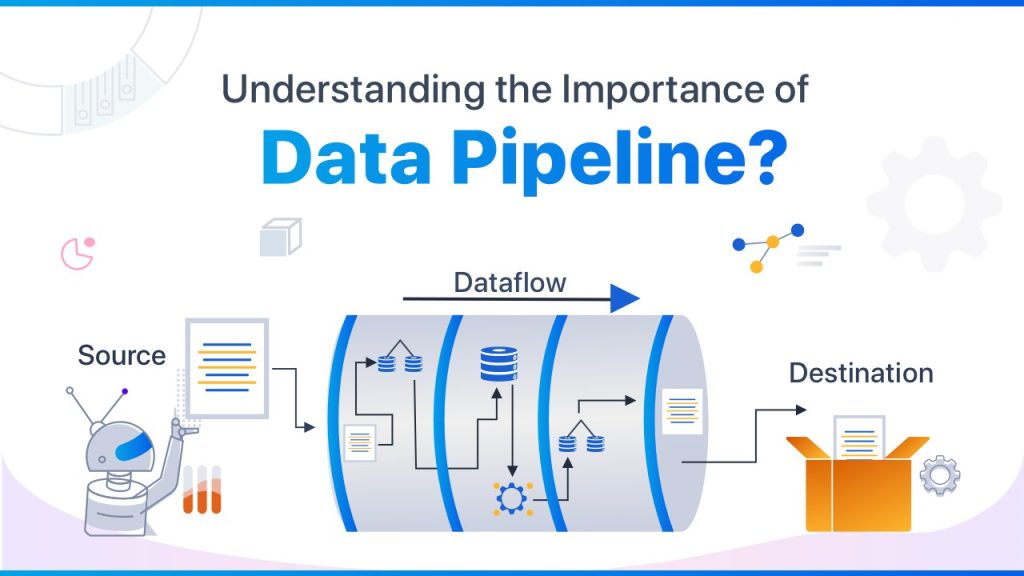

Overview of Data Pipelining

Data pipelining is the process of moving data from one system or platform to another, often with the goal of transforming that data in some way along the way. A pipeline consists of a series of steps, each of which performs a specific action on the data. These steps can include filtering, aggregating, transforming, and loading the data into a new system.

Why Data Pipelining is Important

In today’s data-driven business world, companies need to be able to quickly and efficiently move data between systems to make the most of it. Data pipelining makes this process much easier and more streamlined, allowing businesses to spend less time moving data around and more time analyzing and acting on it. Additionally, data pipelining tools can help improve data quality by automating the process of cleaning and transforming data.

Understanding the Importance of Data Pipelining in Business

The Role of Data Pipelining in Business Intelligence

Data pipelining plays a critical role in business intelligence by allowing companies to move data from disparate sources into a single analytics platform. Being able to combine data from different sources can provide valuable insights that would be impossible to discover otherwise.

How Data Pipelining Improves Data Quality

Data pipelining can help improve data quality by automating the process of cleaning and transforming data as it moves through the pipeline. This can include removing duplicates, standardizing data formats, and aggregating data from multiple sources, all of which can help improve the accuracy and reliability of the data.

Different Types of Data Pipelining Tools

Cloud-Based Data Pipelining Tools

Cloud-based data pipelining tools are becoming increasingly popular due to their flexibility and scalability. They allow users to easily move data between cloud services such as Amazon Web Services, Google Cloud Platform, and Microsoft Azure, and often have built-in integrations with popular data analytics and visualization tools.

Open-Source Data Pipelining Tools

Open-source data pipelining tools allow users to modify the source code to suit their specific needs. This can be a huge advantage for businesses with unique data management requirements or those looking to save money on software licenses.

Proprietary Data Pipelining Tools

Proprietary data pipelining tools are commercial software products that are developed and sold by software vendors. These tools often have advanced features and are backed by professional support, making them a good choice for companies looking for a reliable data management solution.

Key Features of Data Pipelining Tools

Data Integration Capabilities

Data integration capabilities are perhaps the most important feature of data pipelining tools. These tools should be able to handle a wide variety of data sources, including structured and unstructured data, and provide robust APIs and connectors for integrating with third-party systems.

Scalability and Flexibility

Data pipelining tools should be able to scale up or down as needed to accommodate changing data volumes. They should also be flexible enough to handle complex data flows and transformations.

Data Security and Compliance

Data security and compliance are critical considerations for any data management process. Data pipelining tools should provide strong security features, including encryption and access controls, and be compliant with relevant regulations such as GDPR and HIPAA.

Choosing the Right Data Pipelining Tool for Your Business

Data pipelining is a critical process in any modern business that requires efficient and timely data management. Choosing the right data pipelining tool for your business can be a daunting task, given the numerous options available in the market. Some of the factors that you need to consider include the data source and destination, the volume of data, the frequency of data transmission, and your budget.

Factors to Consider When Choosing a Data Pipelining Tool

One of the most important factors to consider when choosing a data pipelining tool is the data source and destination. The tool you choose should be compatible with your data source and destination. The volume of data that you need to process should also be a consideration. If you have large volumes of data, you need a tool that can handle the load without compromising on speed, efficiency, and accuracy. Your budget is also an important consideration, as some tools can be expensive.

Popular Data Pipelining Tools in the Market

There are several data pipelining tools available in the market, and each has its strengths and weaknesses. Some of the most popular ones include Apache NiFi, Apache Kafka, Google Cloud Dataflow, AWS Glue, and Microsoft Azure Data Factory. These tools allow for seamless data integration across various platforms and provide real-time data processing capabilities.

Implementing and Maintaining Data Pipelines

Implementing and maintaining data pipelines can be a challenging task, but with the right tools and best practices, you can ensure an efficient and effective pipeline.

Best Practices for Implementing Data Pipelines

To implement a robust data pipeline, you need to ensure that your pipeline is scalable, reliable, and fault-tolerant. You also need to ensure that your pipeline can handle data ingestion, data processing, data storage, and data retrieval effectively. Adopting agile methodologies, continuous integration, and continuous delivery can also help you implement an efficient data pipeline.

Common Challenges in Maintaining Data Pipelines

Maintaining data pipelines can be challenging, and some of the common challenges include data quality issues, data silos, and data inconsistency. To solve these challenges, you need to ensure that your pipeline is regularly monitored, and the necessary updates and patches are applied promptly.

Best Practices for Data Pipelining

Designing and implementing an effective data pipeline requires several best practices.

Designing an Effective Data Pipeline

To design an effective data pipeline, you need to ensure that your pipeline is scalable, modular, and easy to maintain. You also need to ensure that your pipeline can handle different data formats and data types effectively.

Monitoring and Troubleshooting Data Pipelines

To monitor and troubleshoot your data pipeline effectively, you need to ensure that you have the necessary monitoring and alerting systems in place. This will help you identify and resolve issues promptly before they cause significant damage to your pipeline.

Future Trends in Data Pipelining Tools

Data pipelining tools are continually evolving, and new trends are emerging.

The Emergence of AI-Driven Data Pipelining

AI-driven data pipelining is a new trend that is gaining popularity. This involves the use of AI and machine learning algorithms to automate the data pipeline process and optimize data processing in real-time.

Advancements in Cloud-Based Data Pipelining

Cloud-based data pipelining is also gaining popularity, thanks to the scalability and cost-effectiveness of cloud technologies. Cloud-based tools provide easy deployment, easy integration with other tools, and advanced security features.In conclusion, data pipelining tools play a crucial role in today’s business landscape. They help companies efficiently and effectively manage their data, enabling them to make informed business decisions. By understanding the importance of data pipelining, the different types of tools available, their key features, and best practices, you can choose the right tool for your business and design an effective data pipeline. With the constant advancements in technology, the future of data pipelining tools looks promising. So, keep an eye out for new trends and developments in this field and stay ahead of the game.

FAQ

What is Data Pipelining?

Data Pipelining is the process of moving data from one system to another, transforming it along the way, and ensuring its accuracy and quality.

What are the Benefits of Using Data Pipelining Tools?

Data Pipelining tools help companies efficiently and effectively manage their data, enabling them to make informed business decisions. They improve data quality, reduce errors, and ensure data security and compliance.

What are the Different Types of Data Pipelining Tools?

There are mainly three types of data pipelining tools – Cloud-based, Open-source, and Proprietary. Cloud-based tools are hosted on a cloud platform, while open-source tools are free and open to the public. Proprietary tools are commercial tools that require a license to use.

What Factors Should I Consider When Choosing a Data Pipelining Tool?

When choosing a data pipelining tool, consider the tool’s data integration capabilities, scalability, flexibility, security, and compliance features. Also, consider your organization’s budget, data volume, and technical expertise.