Source – searchmicroservices.techtarget.com

Containers aren’t a new concept; the first steps toward Linux containers were taken in 1979. Since then, there have been about a dozen new evolutionary steps in container deployment of applications, and we’re not done yet. Containers are also interacting with supporting technologies, like DevOps, and competing ones, like virtual machines (VMs), to create evolutionary pressure on all the technologies involved. The container of the future will be very different from that of today, but it’s still possible for users to track the trends and make the most of each step along the way.

All container architectures differ from VM architectures in that they are designed to virtualize the hardware and at least basic platform software, not just the hardware. That means container applications share the OS and some middleware elements, while VM models require all software be duplicated for each VM. The container approach reduces the overhead, allowing more applications to run per server.

Starting with container deployment

Early container deployment presumed that the users and applications were well-behaved and didn’t require mutual protection or security. This permitted errors or malice to create stability and security problems. So, in the first decade of container evolution, the focus was on improving the isolation of containers. This started with several “jail” concepts that focused mostly on isolating the file systems of containers and evolved to the landmark Solaris Containers, which took advantage of an enhanced Solaris OS feature called Zones to further isolate containers.

Google introduced several container architectures starting in 2006, and these had the capability to partition and allocate hardware, storage and network resources to containers, providing users with greater control over the way in which containers could influence not only the security but the performance of others that shared the same server. These improvements were gradually introduced into Linux itself, and that jump-started modern container evolution.

The Linux LXC container project — and Google’s LMCTFY work that launched Kubernetes orchestration — jumped off at this point (early this decade), and it was these initiatives that culminated in Docker. Docker was designed to take the technical framework for containers (isolation and resource control) and operationalize it. Kubernetes and Docker, the orchestration standard and architecture standard for containers, are increasingly merging, and so it’s best to think of them as a single approach. The combination is the de facto market leader in containerization today (rkt from CoreOS is a Docker variant), but not the end of the evolution. Two new pathways have opened for containers, and these will build the path to the future.

Container deployment and cloud

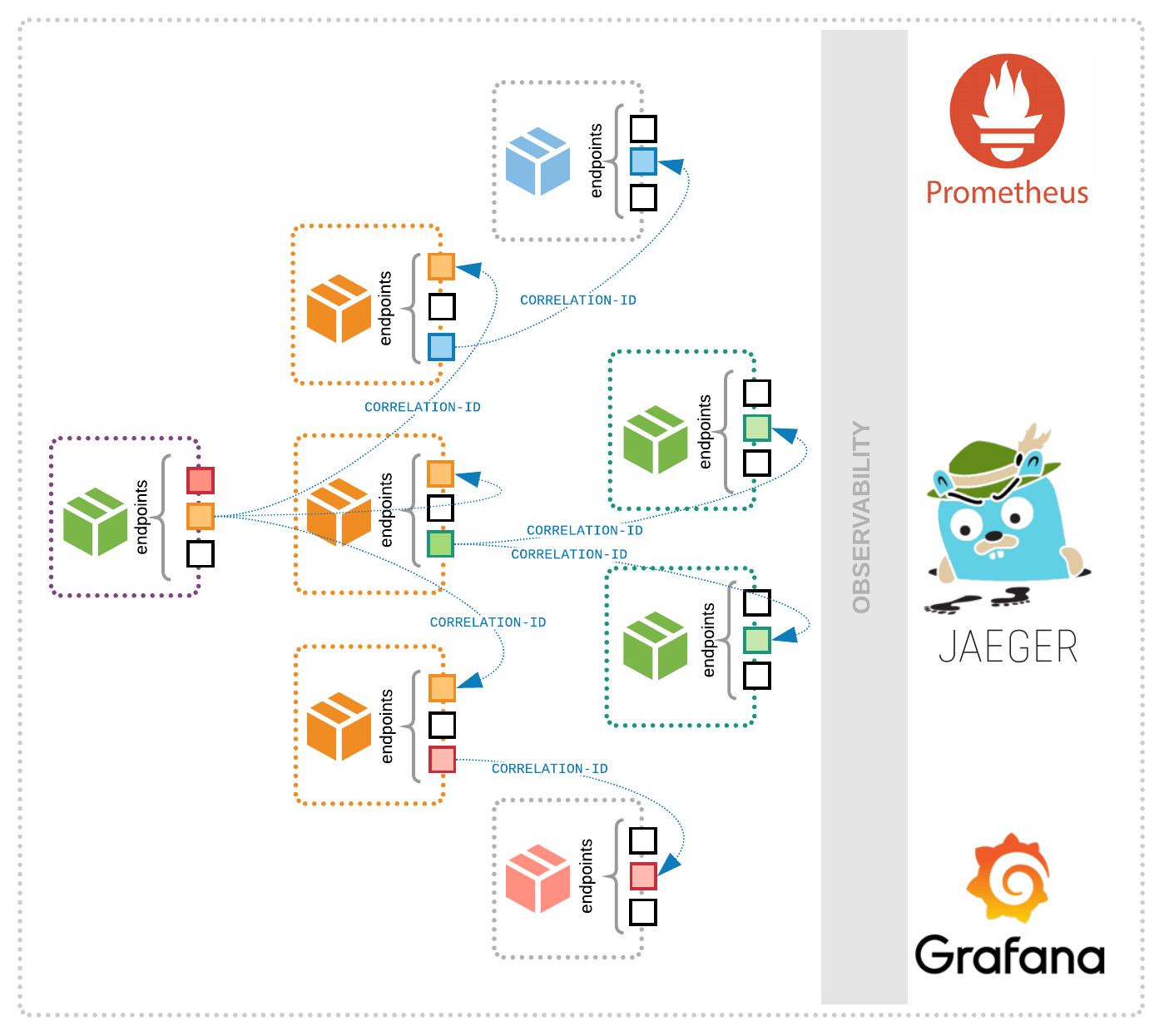

The first of these is the explosion in public cloud container services. Google, Amazon and Microsoft all offer container services that facilitate user extension of private container use into the cloud, most often for hybrid cloud applications. Kubernetes is becoming a de facto standard for orchestration for these applications, though other container orchestration models are also available. From the orchestration focus of cloud-based container services, it seems clear that the focus of container architecture evolution is increasingly application lifecycle management, the operational side of container deployment.

The second pathway is a kind of convergence with VMs. All of the traditional VM providers, both public cloud and private software stacks, have some support for containers. The focus of most is to allow deployment of VMs that, in turn, become container hosts and the support for a model of unified management of containers and VMs in such an environment. That’s created a technology focus, again on DevOps or orchestration, and behind that to application lifecycle management overall. Because this application lifecycle focus is common to our public container service path to the future, it seems clear that the future of container architectures will develop through greater support for the operationalization of application deployment, scaling and redeployment and the organization of resources and application components into a single pool of resources.

DevOps and container architecture

The development-centric group is focusing on making containers do what a standalone system and platform software would do — making containers a faithful replica of a dedicated server at the programming level. This is where things like database handling, interprocess connections at the logical (programming language) level and middleware integration work. A committee of container advocates from this group would seem to have a “fix it” mindset; they’ve accepted containers, and they just want them to work.

The operationalization group focuses on making deployment and operational management of the application lifecycle easy. This group is actually looking beyond what a container does to what a system of containers might need and do. Orchestration or DevOps has been the primary focus here, and work with application operationalization has now demonstrated that many of the goals of the development-centric group can also be met using operational tools. That’s good because it would mean that container evolutionary paths are converging.

In the long run, it is operationalization that will move container architectures into the future. Containers, microservices, cloud computing and other modern trends combine with each other and with business goals to create IT policy. That policy, however it prioritizes these technical elements, will stand or fall based on operational efficiency, and so, over time, container trends will become a point of focus for application operational trends. Plan for that now, and you’ll be ahead of the game.